PAKDD 2019 PPT 资源下载 | 主讲嘉宾 Jennifer Neville 教授演讲分享

PAKDD 是数据挖掘领域历史最悠久,最领先的国际会议之一。它为研究人员和行业从业者提供了一个国际论坛,供大家分享在 KDD 相关领域的新想法,原创研究成果和实践开发经验。

4 月 15 日至 17 日,第 23 届 PAKDD 2019 在澳门隆重举行,AI 研习社前往现场为大家带来直播。

视频观看地址:https://ai.yanxishe.com/page/meeting/71

同时,我们准备为关注数据挖掘的同学们准备了一个微信社群,可以添加小助手微信,让她邀请你加入微信群聊。

为了回馈社区用户,我们跟部分嘉宾老师争取到了 PPT 资料,下载地址可以点击【阅读原文】获得。

Keynote Speaker:Jennifer Neville

分享主题

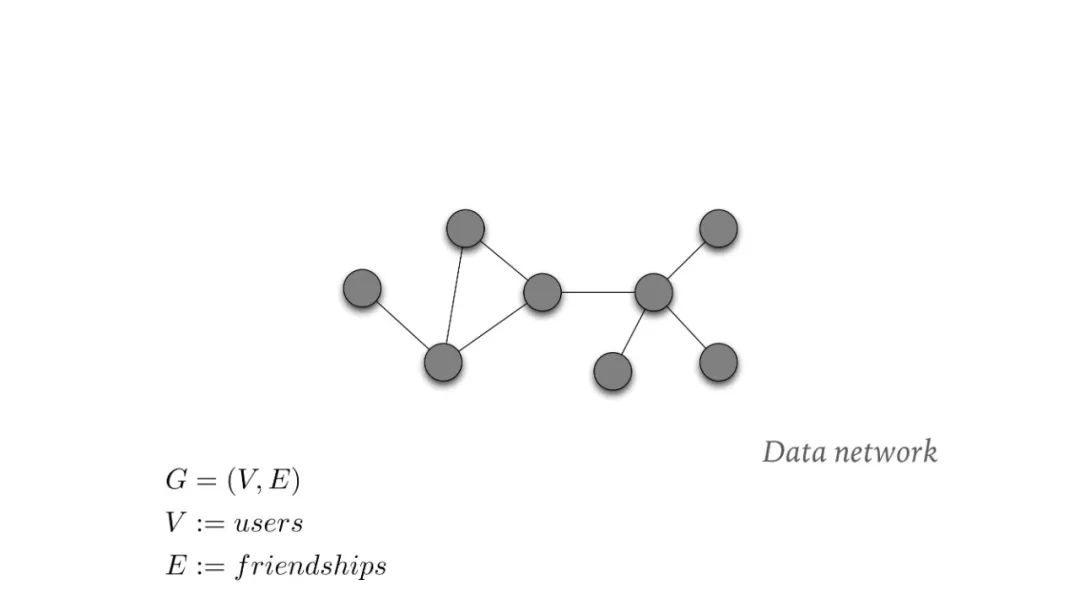

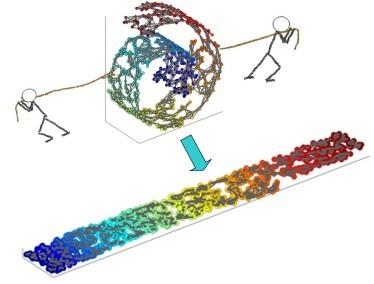

Towards Relational AI -- the good, the bad, and the ugly of learning over networks

嘉宾信息

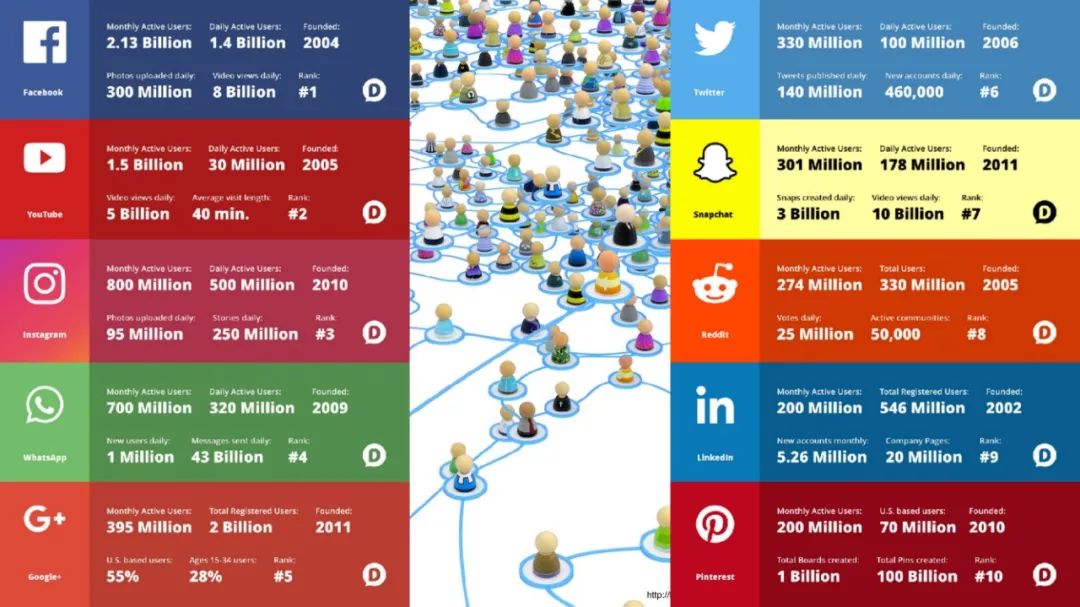

Jennifer Neville,现任普渡大学计算机科学和数据系的副教授。她于 2006 年取得 马萨诸塞大学阿默斯特分校的博士学位,是第 19 届 SIAMSDM(SIAM International Conference on Data Mining)大会的程序委员会主席,从 2015 年到 2018 年,她担任 AAI 执行委员会委员。同时,在 2016 年,她也担任了 ACMWSDM(ACM International Conference on Web Search and Data Mining) 的程序委员会主席。在 2012 年,她入选成为 DARPA CSSG(DARPA Computer Science Study Group)成员之一。她的学术作品有超过 100 多条同行评审,7500 多次引用,研究重点在于研究机器学习和人工智能技术,以此解决复杂的实际问题:例如社交网络、信息网络和物理网络。

分享主题

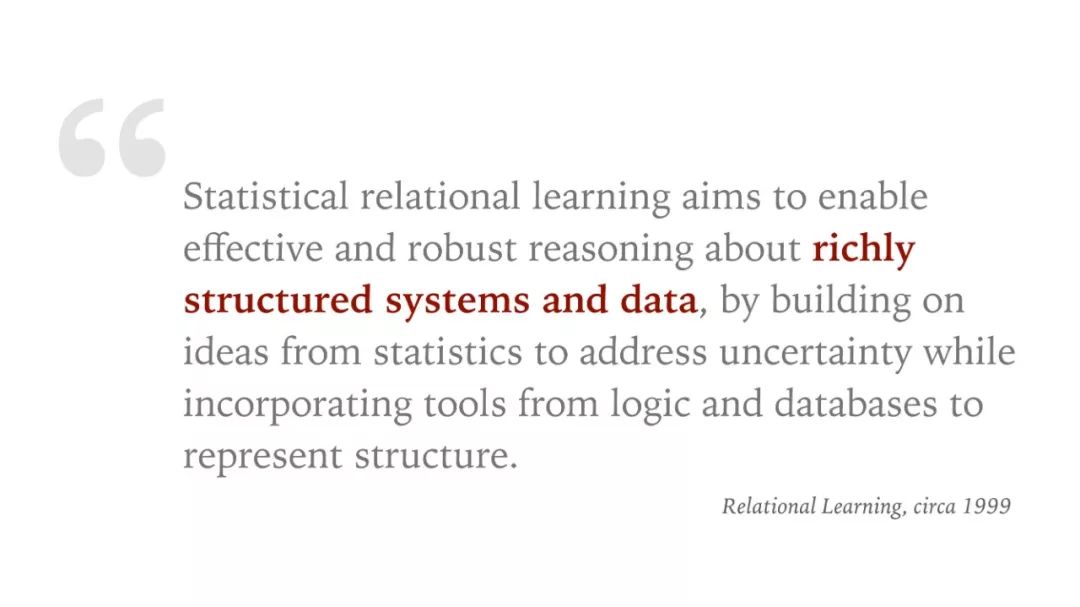

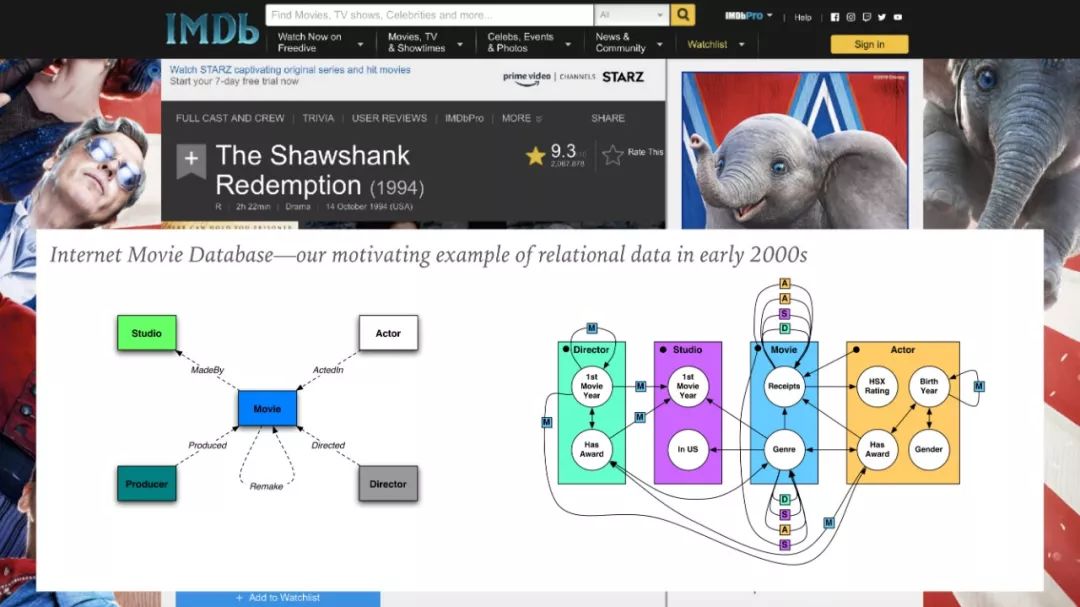

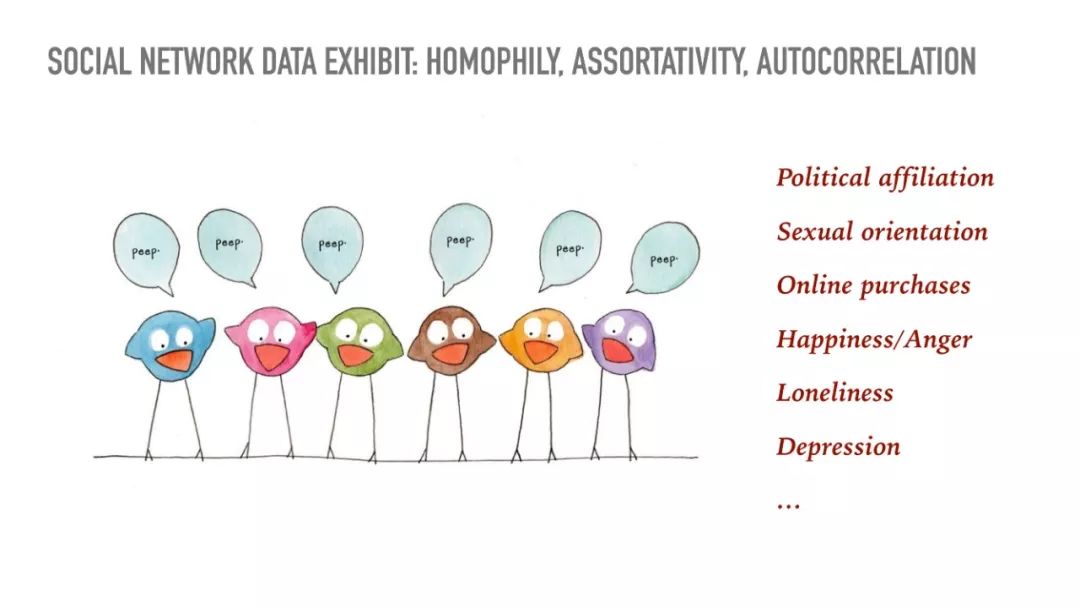

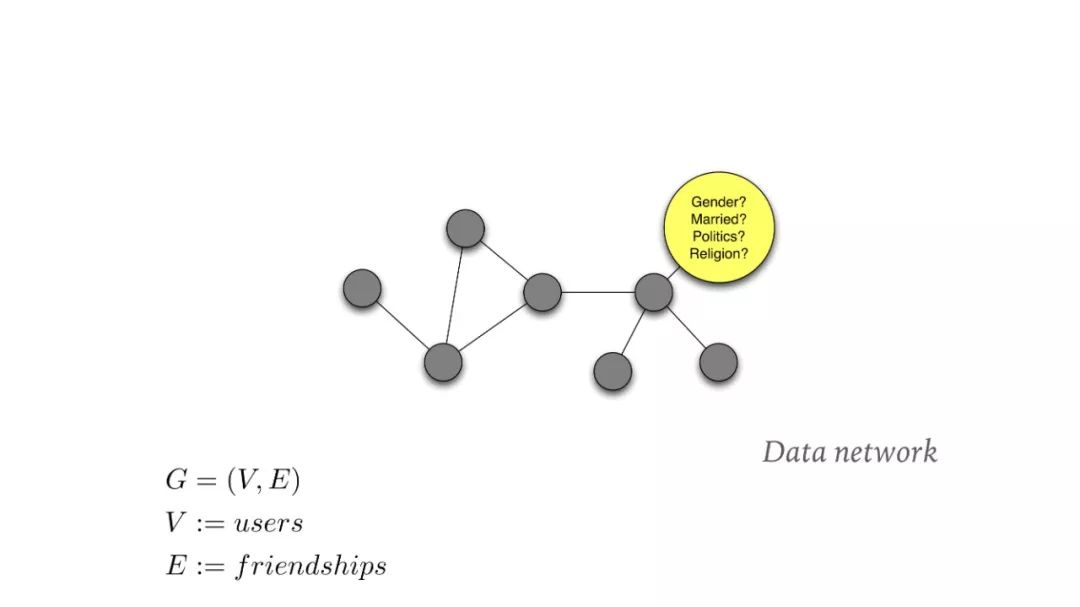

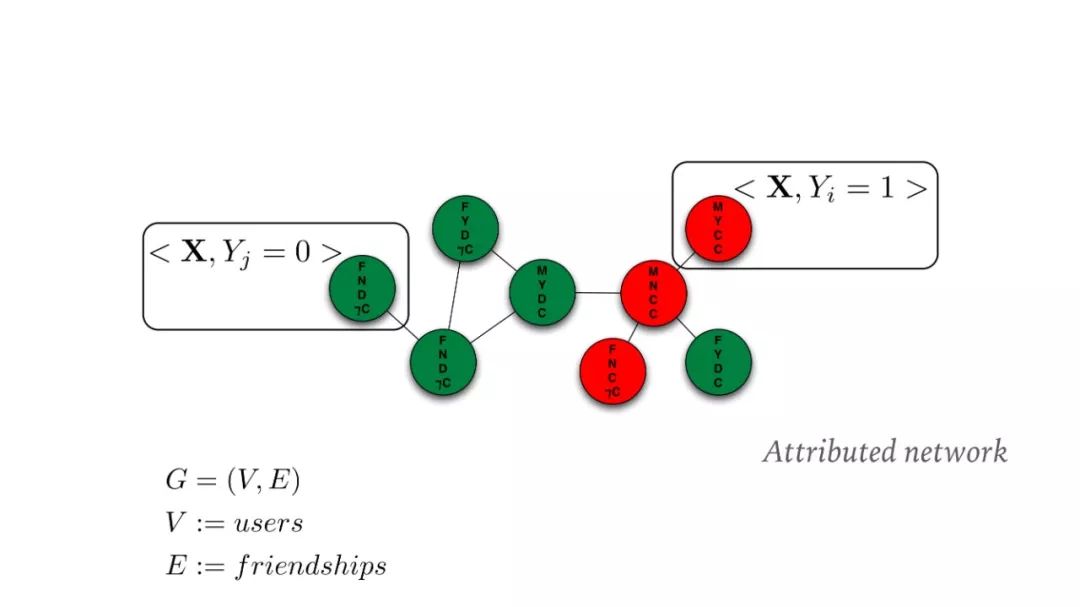

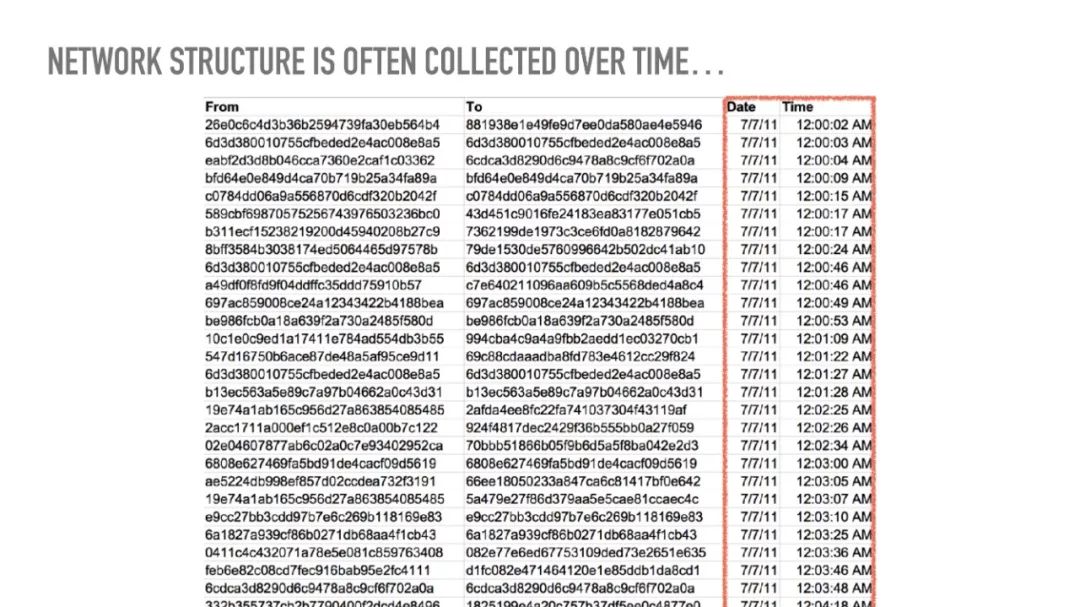

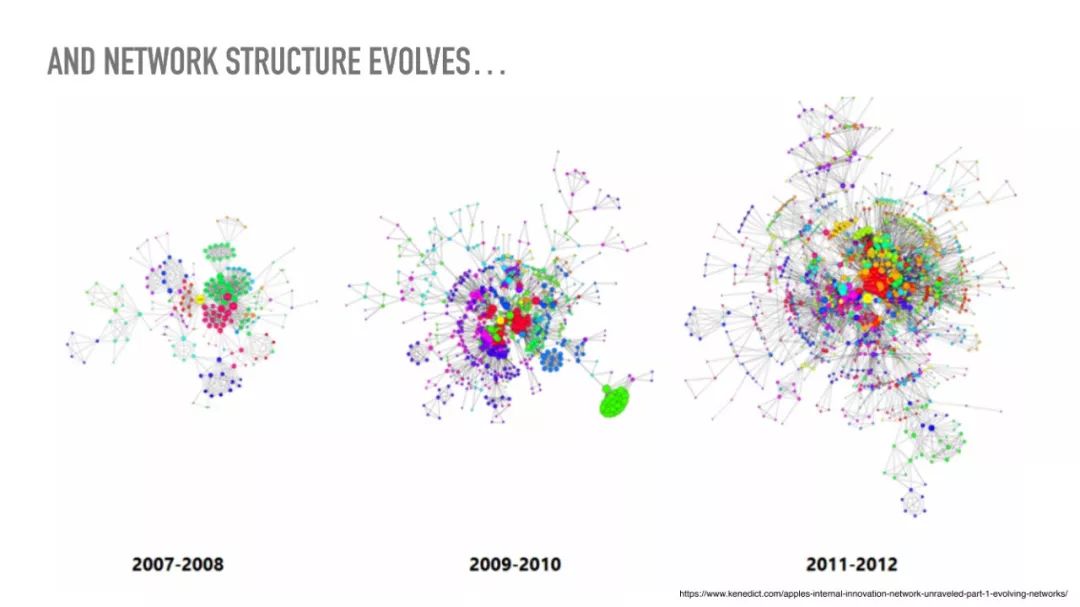

In the last 20 years, there has been a great deal of research on machine learning methods for graphs, networks, and other types of relational data. By moving beyond the independence assumptions of more traditional ML methods, relational models are now able to successfully exploit the additional information that is often observed in relationships among entities. Specifically, network models are able to use relational information to improve predictions about user interests, behavior, and interactions, particularly when individual data is sparse. The tradeoff however, is that the heterogeneity, partial-observability, and interdependence of large-scale network data can make it difficult to develop efficient and unbiased methods, due to several algorithmic and statistical challenges. In this talk, I will discuss these issues while surveying several general approaches used for relational learning in large-scale social and information networks. In addition, to reflect on the movement toward pervasive use of the models in personalized online systems, I will discuss potential implications for privacy, polarization of communities, and spread of misinformation.

资料截图

注:本资源仅供个人学习、交流参考用,切勿用于商业用途。

AI求职百题斩 · 每日一题

点击【阅读原文】进入下载页面