【预告】中科院博士:迁移学习的发展与现状 | 公开课

分享背景

▼

迁移学习伴随着最近几年的机器学习热潮,也成为了目前最炙手可热的研究方向。机器学习大牛Andrew Ng在2016年NIPS上提出“迁移学习将会是引领下一次机器学习热潮的驱动力”。

迁移学习强调通过不同领域之间通过知识迁移,来完成传统机器学习较难完成的任务。例如,传统机器学习依赖于大量标定数据来训练模型,当缺乏标定数据时,传统机器学习就很难得到泛化能力强的模型。此时,迁移学习就可以借助于其他相关领域的知识,来帮助训练更具有泛化能力的模型。迁移学习是解决标定数据难获取这一基础问题的重要手段,也是未来更好地研究无监督学习的重要方法。

建议预读文献

《A survey on Transfer Learning》

论文地址:http://ieeexplore.ieee.org/abstract/document/5288526/

A major assumption in many machine learning and data mining algorithms is that the training and future data must be in the same feature space and have the same distribution. However, in many real-world applications, this assumption may not hold. For example, we sometimes have a classification task in one domain of interest, but we only have sufficient training data in another domain of interest, where the latter data may be in a different feature space or follow a different data distribution. In such cases, knowledge transfer, if done successfully, would greatly improve the performance of learning by avoiding much expensive data-labeling efforts. In recent years, transfer learning has emerged as a new learning framework to address this problem. This survey focuses on categorizing and reviewing the current progress on transfer learning for classification, regression, and clustering problems. In this survey, we discuss the relationship between transfer learning and other related machine learning techniques such as domain adaptation, multitask learning and sample selection bias, as well as covariate shift. We also explore some potential future issues in transfer learning research.

《小王爱迁移》

系列文章地址:https://zhuanlan.zhihu.com/wjdml

分享提纲

▼

1.什么是迁移学习?

2.为什么要进行迁移学习?

3.迁移学习的基本类别

4.迁移学习代表性算法

5.深度学习与迁移学习结合

6.迁移学习最新进展

分享主题

▼

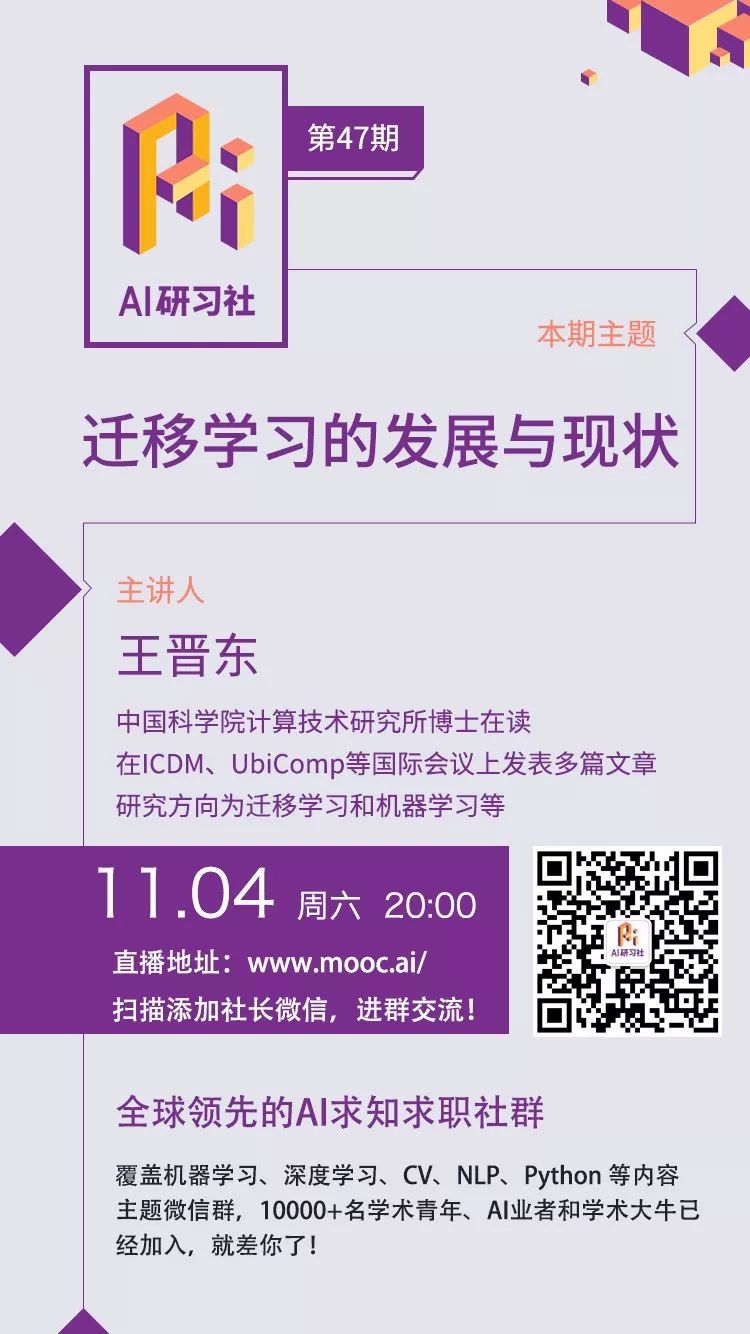

迁移学习的发展与现状

分享人简介

▼

王晋东,现于中国科学院计算技术研究所攻读博士学位,研究方向为迁移学习和机器学习等。他在国际权威会议ICDM、UbiComp等发表多篇文章。同时,也是知乎等知识共享社区的机器学习达人(知乎用户名:王晋东不在家)。他还在Github上发起建立了多个与机器学习相关的资源仓库,成立了超过120个高校和研究所参与的机器学习群,热心于知识的共享。个人主页:http://jd92.wang

分享时间

▼

北京时间11月4日(周六)晚20:00

参与方式

▼

扫描海报二维码添加社长微信,备注「王晋东」

复旦Ph.D沈志强:用于目标检测的DSOD模型(ICCV 2017)

如果你觉得活动不错,欢迎点赞并转发本文~

▼▼▼