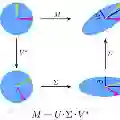

Large language models (LLMs) have demonstrated impressive capabilities in a wide range of downstream natural language processing tasks. Nevertheless, their considerable sizes and memory demands hinder practical deployment, underscoring the importance of developing efficient compression strategies. Singular value decomposition (SVD) decomposes a matrix into orthogonal components, enabling efficient low-rank approximation. This is particularly suitable for LLM compression, where weight matrices often exhibit significant redundancy. However, current SVD-based methods neglect the residual matrix from truncation, resulting in significant truncation loss. Additionally, compressing all layers of the model results in severe performance degradation. To overcome these limitations, we propose ResSVD, a new post-training SVD-based LLM compression method. Specifically, we leverage the residual matrix generated during the truncation process to reduce truncation loss. Moreover, under a fixed overall compression ratio, we selectively compress the last few layers of the model, which mitigates error propagation and significantly improves the performance of compressed models. Comprehensive evaluations of ResSVD on diverse LLM families and multiple benchmark datasets indicate that ResSVD consistently achieves superior performance over existing counterpart methods, demonstrating its practical effectiveness.

翻译:大语言模型(LLMs)在广泛的下游自然语言处理任务中展现了卓越的性能。然而,其庞大的参数量与内存需求阻碍了实际部署,这凸显了开发高效压缩策略的重要性。奇异值分解(SVD)将矩阵分解为正交分量,可实现高效的低秩近似。该方法特别适用于LLM压缩,因为其权重矩阵通常存在显著冗余。然而,现有的基于SVD的方法忽略了截断产生的残差矩阵,导致显著的截断损失。此外,对模型所有层进行压缩会导致严重的性能下降。为克服这些局限性,我们提出了ResSVD,一种新的基于SVD的LLM训练后压缩方法。具体而言,我们利用截断过程中产生的残差矩阵来减少截断损失。此外,在固定总体压缩比下,我们选择性地压缩模型的最后若干层,这减轻了误差传播并显著提升了压缩模型的性能。在多种LLM系列及多个基准数据集上对ResSVD进行的综合评估表明,ResSVD始终优于现有的同类方法,证明了其实际有效性。