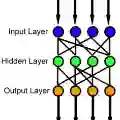

We derive an approximation error bound that holds simultaneously for a function and all its derivatives up to any prescribed order. The bounds apply to elementary functions, including multivariate polynomials, the exponential function, and the reciprocal function, and are obtained using feedforward neural networks with the Gaussian Error Linear Unit (GELU) activation. In addition, we report the network size, weight magnitudes, and behavior at infinity. Our analysis begins with a constructive approximation of multiplication, where we prove the simultaneous validity of error bounds over domains of increasing size for a given approximator. Leveraging this result, we obtain approximation guarantees for division and the exponential function, ensuring that all higher-order derivatives of the resulting approximators remain globally bounded.

翻译:本文推导了一个同时适用于函数及其任意指定阶导数的逼近误差界。该误差界适用于多元多项式、指数函数和倒数函数等初等函数,并通过采用高斯误差线性单元(GELU)激活函数的前馈神经网络实现。此外,我们给出了网络规模、权重幅值及无穷远渐近行为的量化分析。研究从乘法运算的构造性逼近入手,证明了在给定逼近器的条件下,误差界在逐渐扩大的定义域上具有同时有效性。基于此结果,我们获得了除法与指数函数的逼近保证,并确保所得逼近器的所有高阶导数在全空间保持有界性。