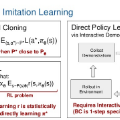

Active imitation learning (AIL) combats covariate shift by querying an expert during training. However, expert action labeling often dominates the cost, especially in GPU-intensive simulators, human-in-the-loop settings, and robot fleets that revisit near-duplicate states. We present Conformalized Rejection Sampling for Active Imitation Learning (CRSAIL), a querying rule that requests an expert action only when the visited state is under-represented in the expert-labeled dataset. CRSAIL scores state novelty by the distance to the $K$-th nearest expert state and sets a single global threshold via conformal prediction. This threshold is the empirical $(1-α)$ quantile of on-policy calibration scores, providing a distribution-free calibration rule that links $α$ to the expected query rate and makes $α$ a task-agnostic tuning knob. This state-space querying strategy is robust to outliers and, unlike safety-gate-based AIL, can be run without real-time expert takeovers: we roll out full trajectories (episodes) with the learner and only afterward query the expert on a subset of visited states. Evaluated on MuJoCo robotics tasks, CRSAIL matches or exceeds expert-level reward while reducing total expert queries by up to 96% vs. DAgger and up to 65% vs. prior AIL methods, with empirical robustness to $α$ and $K$, easing deployment on novel systems with unknown dynamics.

翻译:主动模仿学习通过训练期间查询专家来应对协变量偏移问题。然而,专家动作标注通常构成主要成本,尤其是在GPU密集型模拟器、人机协同环境以及机器人集群重复访问近似重复状态的情况下。本文提出用于主动模仿学习的共形化拒绝采样方法,该查询规则仅在访问状态在专家标注数据集中代表性不足时请求专家动作。CRSAIL通过计算状态与第K个最近邻专家状态的距离来评估状态新颖性,并利用共形预测设定单一全局阈值。该阈值基于策略校准得分的经验(1-α)分位数,提供了一种与分布无关的校准规则,将α与期望查询率相关联,使α成为任务无关的调节参数。这种状态空间查询策略对异常值具有鲁棒性,且与基于安全门的主动模仿学习方法不同,无需实时专家接管:我们使用学习器展开完整轨迹(回合),仅在事后对访问状态子集进行专家查询。在MuJoCo机器人任务评估中,CRSAIL在实现专家级奖励水平的同时,相较于DAgger将总专家查询量降低达96%,相较于现有主动模仿学习方法降低达65%,且对α和K参数具有经验鲁棒性,便于在动态特性未知的新系统上部署。