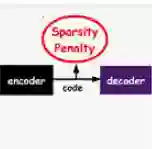

Optimal control and sequential decision making are widely used in many complex tasks. Optimal control over a sequence of natural images is a first step towards understanding the role of vision in control. Here, we formalize this problem as a reinforcement learning task, and derive general conditions under which an image includes enough information to implement an optimal policy. Reinforcement learning is shown to provide a computationally efficient method for finding optimal policies when natural images are encoded into "efficient" image representations. This is demonstrated by introducing a new reinforcement learning benchmark that easily scales to large numbers of states and long horizons. In particular, by representing each image as an overcomplete sparse code, we are able to efficiently solve an optimal control task that is orders of magnitude larger than those tasks solvable using complete codes. Theoretical justification for this behaviour is provided. This work also demonstrates that deep learning is not necessary for efficient optimal control with natural images.

翻译:最优控制与序列决策在众多复杂任务中应用广泛。对自然图像序列进行最优控制,是理解视觉在控制中作用的第一步。本文将这一问题形式化为强化学习任务,并推导出图像包含足够信息以实施最优策略的通用条件。研究表明,当自然图像被编码为“高效”的图像表示时,强化学习能够为寻找最优策略提供计算高效的方法。为此,我们引入了一个可轻松扩展至大规模状态与长时域的新强化学习基准测试。特别地,通过将每幅图像表示为过完备稀疏编码,我们成功高效地解决了比使用完备编码可求解任务规模大数个数量级的最优控制问题,并为此提供了理论依据。本工作同时证明,在处理自然图像的高效最优控制时,深度学习并非必需。