系统性能分析从入门到进阶

一 入门篇

1 资源角度

USE

I find it solves about 80% of server issues with 5% of the effort.

-

Utilization (U): as a percent over a time interval. eg, "one disk is running at 90% utilization". 大多数情况可以合理推测利用率高可能会影响性能

-

Saturation (S): as a queue length. eg, "the CPUs have an average run queue length of four". 资源竞争的激烈程度

-

Errors (E). scalar counts. eg, "this network interface has had fifty late collisions". Errors相对直观

CPU

-

Utilization. CPU的利用率

-

Saturation. 可以是load average, runqueue length, sched latency等

top - 17:13:49 up 83 days, 23:10, 1 user, load average: 433.52, 422.54, 438.70Tasks: 2765 total, 23 running, 1621 sleeping, 0 stopped, 34 zombie%Cpu(s): 23.4 us, 9.5 sy, 0.0 ni, 65.5 id, 0.7 wa, 0.0 hi, 1.0 si, 0.0 st

-

us, sys, ni - 对应un-niced user, kernel, niced user的CPU利用率

-

id, wa - 对应到idle, io wait的比例, io wait本质上也是一种idle, 区别在于对应cpu上有等待io的任务

-

hi, si - 对应hardirq, softirq的比例

-

st - 因为超卖等原因, hypervisor从该vm偷走的时间 (todo: docker)

load average: 433.52, 422.54, 438.70

-

run - 对应到/proc/stat里面的procs_running, 也就是runnable任务数

-

blk - 对应到/proc/stat里面的procs_blocked, 阻塞在I/O的任务数

#dstat -tp----system---- ---procs--- time |run blk new07-03 17:56:50|204 1.0 20207-03 17:56:51|212 0 23807-03 17:56:52|346 1.0 26607-03 17:56:53|279 5.0 26207-03 17:56:54|435 7.0 17707-03 17:56:55|442 3.0 25107-03 17:56:56|792 8.0 41907-03 17:56:57|504 16 15207-03 17:56:58|547 3.0 15607-03 17:56:59|606 2.0 21207-03 17:57:00|770 0 186

内存

-

Utilization. 内存利用率

-

Saturation. 这里主要考察内存回收算法的效率

-

total - MemTotal + SwapTotal, 一般来说MemTotal会略小于真实的物理内存

-

free - 未使用的内存. Linux倾向于缓存更多页面以提高性能, 所以不能简通过free来判断内存是否不足

-

buff/cache - 系统缓存, 一般不需要严格区分buffer和cache

-

available - 估计的可用物理内存大小

-

used - 等于total - free - buffers - cache

-

Swap - 该机器上未配置

#free -g total used free shared buff/cache availableMem: 503 193 7 2 301 301Swap: 0 0 0

更详细的信息可以直接去读/proc/meminfo:

#cat /proc/meminfoMemTotal: 527624224 kBMemFree: 8177852 kBMemAvailable: 316023388 kBBuffers: 23920716 kBCached: 275403332 kBSwapCached: 0 kBActive: 59079772 kBInactive: 431064908 kBActive(anon): 1593580 kBInactive(anon): 191649352 kBActive(file): 57486192 kBInactive(file): 239415556 kBUnevictable: 249700 kBMlocked: 249700 kBSwapTotal: 0 kBSwapFree: 0 kB[...]

-

pgscank/pgscand - 分别对应kswapd/direct内存回收时扫描的page数

-

pgsteal - 回收的page数

-

%vmeff - pgsteal/(pgscank+pgscand)

#sar -B 1

11:00:16 AM pgscank/s pgscand/s pgsteal/s %vmeff 11:00:17 AM 0.00 0.00 3591.00 0.00 11:00:18 AM 0.00 0.00 10313.00 0.00 11:00:19 AM 0.00 0.00 8452.00 0.00

I/O

-

Utilization. 存储设备的利用率, 单位时间内设备在处理I/O请求的时间

-

Saturation. 队列长度

-

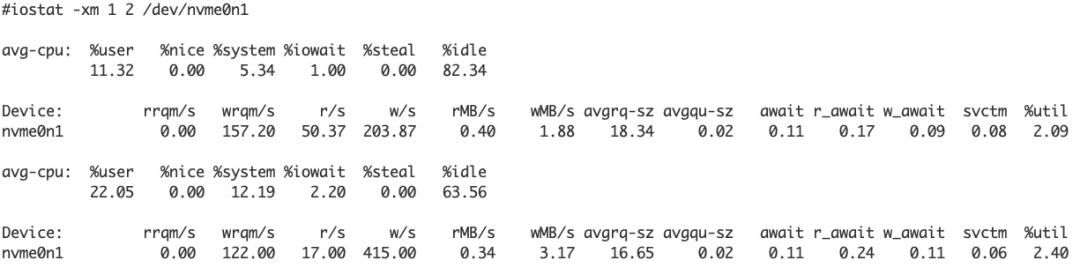

%util - 利用率. 注意即使达到100%的util, 也不代表设备没有性能余量了, 特别地现在的SSD盘内部都支持并发. 打个比方, 一家旅馆有10间房, 每天只要有1个房间入住, util就是100%。

-

svctm - 新版iostat已经删掉

-

await/r_await/w_await - I/O延迟, 包括排队时间

-

avgrq-sz - 平均request size, 请求处理时间和大小有一定关系, 不一定线性

-

argqu-sz - 评估queue size, 可以用来判断是否有积压

-

rMB/s, wMB/s, r/s, w/s - 基本语义

资源粒度

内存也有类似情况, 运行numastat -m

Node 0 Node 1 Node 2 Node 3 --------------- --------------- --------------- ---------------MemTotal 31511.92 32255.18 32255.18 32255.18MemFree 2738.79 131.89 806.50 10352.02MemUsed 28773.12 32123.29 31448.69 21903.16Active 7580.58 419.80 9597.45 5780.64Inactive 17081.27 26844.28 19806.99 13504.79Active(anon) 6.63 0.93 2.08 5.64Inactive(anon) 12635.75 25560.53 12754.29 9053.80Active(file) 7573.95 418.87 9595.37 5775.00Inactive(file) 4445.52 1283.75 7052.70 4450.98

mkdir /sys/fs/cgroup/cpuset/overloadedecho 0-1 > /sys/fs/cgroup/cpuset/cpuset.cpusecho 0 > /sys/fs/cgroup/cpuset/cpuset.memsecho $$for i in {0..1023}; do /tmp/busy & done

#uptime 14:10:54 up 6 days, 18:52, 10 users, load average: 920.92, 411.61, 166.95

2 应用角度

-

应用能使用多少资源, 而不是系统提供了多少资源, 这里面可能会有gap, 系统是个模糊的概念, 而应用本身却相对具体. 以上面cpuset为例, 物理机是个系统, cpuset管理的资源也可以成为系统, 但是应用在cpuset里面还是外面是确定的。

-

应用对资源的需求, 即使系统资源再多, 应用用不上性能也上不去, 也就是系统可能没问题, 而是应用本身的原因。

#pidstat -p `pgrep myserv` -t 115:47:05 UID TGID TID %usr %system %guest %CPU CPU Command15:47:06 0 71942 - 415.00 0.00 0.00 415.00 22 myserv15:47:06 0 - 71942 0.00 0.00 0.00 0.00 22 |__myserv...15:47:06 0 - 72079 7.00 94.00 0.00 101.00 21 |__myserv15:47:06 0 - 72080 10.00 90.00 0.00 100.00 19 |__myserv15:47:06 0 - 72081 9.00 91.00 0.00 100.00 35 |__myserv15:47:06 0 - 72082 5.00 95.00 0.00 100.00 29 |__myserv

3 常用命令

基本命令

-

top - 提供了交互模式和batch模式, 不带参数进入交互模式, 按下h键可以看到各种功能

-

ps - 提供了各种参数查看系统中任务的状态, 比如ps aux或者ps -eLf, 很多参数可以在需要的时候查看手册

-

free - 内存信息

-

iostat - I/O性能

-

pidstat - 查看进程相关的信息, 上面已经介绍过

-

mpstat - 可以查看单独cpu的利用率, softirq, hardirq个数等

-

vmstat - 可以查看虚拟内存及各种系统信息

-

netstat - 网络相关

-

dstat - 可以查看cpu/disk/mem/net等各种信息, 这些stat命令哪个方便用哪个

-

htop - 上面介绍过

-

irqstat - 方便观察中断信息

-

sar/tsar/ssar - 收集和查看系统运行的各种历史信息, 也提供实时模式

largest=70

while :; do mem=$(ps -p `pidof mysqld` -o %mem | tail -1) imem=$(printf %.0f $mem) if [ $imem -gt $largest ]; then echo 'p malloc_stats_print(0,0,0)' | gdb --quiet -nx -p `pidof mysqld` fi sleep 10done

perf

-

通过采样发现程序热点

-

通过硬件PMU深入分析问题的根源, 特别是配合硬件上的优化

#perf list | grep Hardware branch-misses [Hardware event] bus-cycles [Hardware event] cache-misses [Hardware event] cache-references [Hardware event] cpu-cycles OR cycles [Hardware event] instructions [Hardware event] L1-dcache-load-misses [Hardware cache event] L1-dcache-loads [Hardware cache event] L1-dcache-store-misses [Hardware cache event] L1-dcache-stores [Hardware cache event] L1-icache-load-misses [Hardware cache event] L1-icache-loads [Hardware cache event] branch-load-misses [Hardware cache event] branch-loads [Hardware cache event] dTLB-load-misses [Hardware cache event] iTLB-load-misses [Hardware cache event] mem:<addr>[/len][:access] [Hardware breakpoint]

-

通过-e指定感兴趣的一个或多个event

-

指定采样的范围, 比如进程级别 (-p), 线程级别 (-t), cpu级别 (-C), 系统级别 (-a)

#perf stat -p 31925 sleep 1

Performance counter stats for process id '31925':

2184.986720 task-clock (msec) # 2.180 CPUs utilized 3,210 context-switches # 0.001 M/sec 345 cpu-migrations # 0.158 K/sec 0 page-faults # 0.000 K/sec 4,311,798,055 cycles # 1.973 GHz <not supported> stalled-cycles-frontend <not supported> stalled-cycles-backend 409,465,681 instructions # 0.09 insns per cycle <not supported> branches 8,680,257 branch-misses # 0.00% of all branches

1.002506001 seconds time elapsed

void busy(long us) { struct timeval tv1, tv2; long delta = 0; gettimeofday(&tv1, NULL); do { gettimeofday(&tv2, NULL); delta = (tv2.tv_sec - tv1.tv_sec) * 1000000 + tv2.tv_usec - tv1.tv_usec; } while (delta < us);}

void A() { busy(2000); }void B() { busy(8000); }

int main() { while (1) { A(); B(); } return 0;}

Samples: 27K of event 'cycles', Event count (approx.): 14381317911 Children Self Command Shared Object Symbol+ 99.99% 0.00% a.out [unknown] [.] 0x0000fffffb925137+ 99.99% 0.00% a.out a.out [.] _start+ 99.99% 0.00% a.out libc-2.17.so [.] __libc_start_main+ 99.99% 0.00% a.out a.out [.] main+ 99.06% 25.95% a.out a.out [.] busy+ 79.98% 0.00% a.out a.out [.] B- 71.31% 71.31% a.out [vdso] [.] __kernel_gettimeofday __kernel_gettimeofday - busy + 79.84% B + 20.16% A+ 20.01% 0.00% a.out a.out [.] A

strace

#strace -v perf record -g -e cycles ./a.outperf_event_open({type=PERF_TYPE_HARDWARE, size=PERF_ATTR_SIZE_VER5, config=PERF_COUNT_HW_CPU_CYCLES, sample_freq=4000, sample_type=PERF_SAMPLE_IP|PERF_SAMPLE_TID|PERF_SAMPLE_TIME|PERF_SAMPLE_CALLCHAIN|PERF_SAMPLE_PERIOD, read_format=0, disabled=1, inherit=1, pinned=0, exclusive=0, exclusive_user=0, exclude_kernel=0, exclude_hv=0, exclude_idle=0, mmap=1, comm=1, freq=1, inherit_stat=0, enable_on_exec=1, task=1, watermark=0, precise_ip=0 /* arbitrary skid */, mmap_data=0, sample_id_all=1, exclude_host=0, exclude_guest=1, exclude_callchain_kernel=0, exclude_callchain_user=0, mmap2=1, comm_exec=1, use_clockid=0, context_switch=0, write_backward=0, namespaces=0, wakeup_events=0, config1=0, config2=0, sample_regs_user=0, sample_regs_intr=0, aux_watermark=0, sample_max_stack=0}, 51876, 25, -1, PERF_FLAG_FD_CLOEXEC) = 30

blktrace

-

blktrace: 收集

-

blkparse: 处理

-

btt: 强大的分析工具

-

btrace: blktrace/blkparse的一个简单封装, 相当于blktrace -d /dev/sda -o - | blkparse -i -

-

时间戳, 性能分析的关键信息之一

-

event, 第6列, 对应到I/O路径上的关键点, 具体对应关系可以查找相应手册或源码, 理解这些关键点是调试I/O性能的必要技能

-

I/O sector. I/O请求对应的扇区和大小

sudo btrace /dev/sda8,0 0 1 0.000000000 1024 A WS 302266328 + 8 <- (8,5) 794357368,0 0 2 0.000001654 1024 Q WS 302266328 + 8 [jbd2/sda5-8]8,0 0 3 0.000010042 1024 G WS 302266328 + 8 [jbd2/sda5-8]8,0 0 4 0.000011605 1024 P N [jbd2/sda5-8]8,0 0 5 0.000014993 1024 I WS 302266328 + 8 [jbd2/sda5-8]8,0 0 0 0.000018026 0 m N cfq1024SN / insert_request8,0 0 0 0.000019598 0 m N cfq1024SN / add_to_rr8,0 0 6 0.000022546 1024 U N [jbd2/sda5-8] 1

$ sudo blktrace -d /dev/sdb -w 5$ blkparse sdb -d sdb.bin$ btt -i sdb.bin==================== All Devices ====================

ALL MIN AVG MAX N--------------- ------------- ------------- ------------- -----------

Q2Q 0.000000001 0.000014397 0.008275391 347303Q2G 0.000000499 0.000071615 0.010518692 347298S2G 0.000128160 0.002107990 0.010517875 11512G2I 0.000000600 0.000001570 0.000040010 347298I2D 0.000000395 0.000000929 0.000003743 347298D2C 0.000116199 0.000144157 0.008443855 347288Q2C 0.000118211 0.000218273 0.010678657 347288

==================== Device Overhead ====================

DEV | Q2G G2I Q2M I2D D2C---------- | --------- --------- --------- --------- --------- ( 8, 16) | 32.8106% 0.7191% 0.0000% 0.4256% 66.0447%---------- | --------- --------- --------- --------- --------- Overall | 32.8106% 0.7191% 0.0000% 0.4256% 66.0447%

二 进阶篇

1 大学教材

-

Part I: AN OVERVIEW OF PERFORMANCE EVALUATION -

Part II: MEASUREMENT TECHNIQUES AND TOOLS -

Part III: PROBABILITY THEORY AND STATISTICS -

Part IV: EXPERIMENTAL DESIGN AND ANALYSIS -

Part V: SIMULATION -

Part VI: QUEUEING MODELS

2 技术博客

-

参考文末[2]有时间可以都过一遍, 总的来说主要包括3个部分:

-

性能分析的方法集. 代表作 USE方法 -

性能数据的搜集. 代表作 "工具大图" -

性能数据的可视化. 代表作 火焰图

-

文末链接[3]

-

文末链接[4]

-

文末链接[5]

3 知识结构

操作系统

-

ecs绑在socket 0上性能好 -

mysql绑在socket 1上性能好

-

系统启动的时候, 物理内存加到伙伴系统是先socket 0后socket 1

-

socket 1上的内存会被先分出来, 所以mysql分配的内存在socket 1. 特定集群的机器不会随意跑其他进程

-

在ecs的host上, 因为要分配的hugepage已经超过了socket 1上的所有内存, 所以后面分配的hugepage已经落在了socket 0

-

hugepage的分配是后进先出, 意味着ecs一开始分配到的hugepage在socket 0, 而该机器资源并没全部用完, 测试用的几个ecs内存全落在了socket 0上, 所以将ecs进程绑到socket 0的性能更好

硬件知识

-

新的平台上, 应用原有的很多假设被打破, 需要重新适配, 否则性能可能不及预期. 比如在Intel上面, 开关numa的性能差距不大, 在其他平台上可能就不一样

-

新的平台要取代老的平台, 就存在性能的比较. 由于平台性能差异大并且差异点多, 虽然speccpu之类的benchmark能一定程度反应平台整体的计算性能, 但很多时候还需要结合不同场景分别进行性能调优

-

不排除新平台存在某种bug或者未知的feature, 都需要我们去摸索解决的办法

数据分析

-

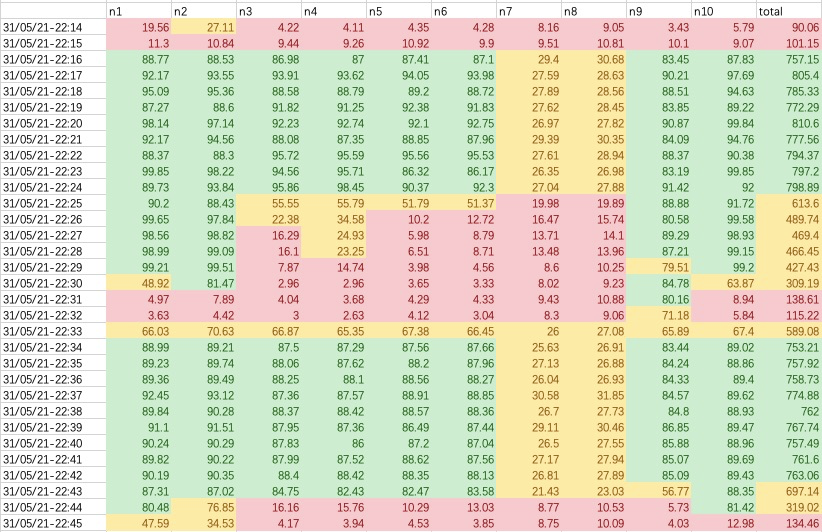

数据提取. 利用各种工具比如awk/sed/perl等脚本语言提取所需的数据

-

数据抽象. 从不同角度加工数据, 识别异常, 比如单机/集群分别是什么表现, 统计哪些值

-

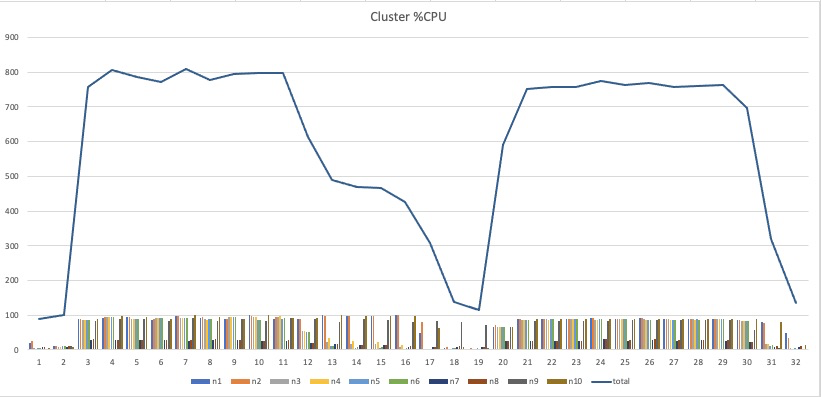

可视化. 可视化是数据处理非常重要的能力, 一图胜千言, 火焰图就是最好的例子. 常用画图工具有gnuplot, excel等

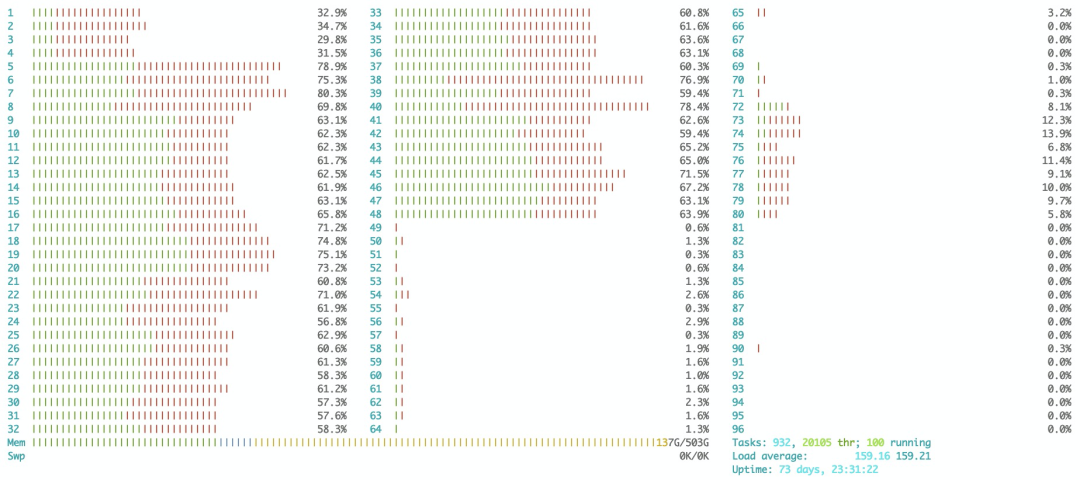

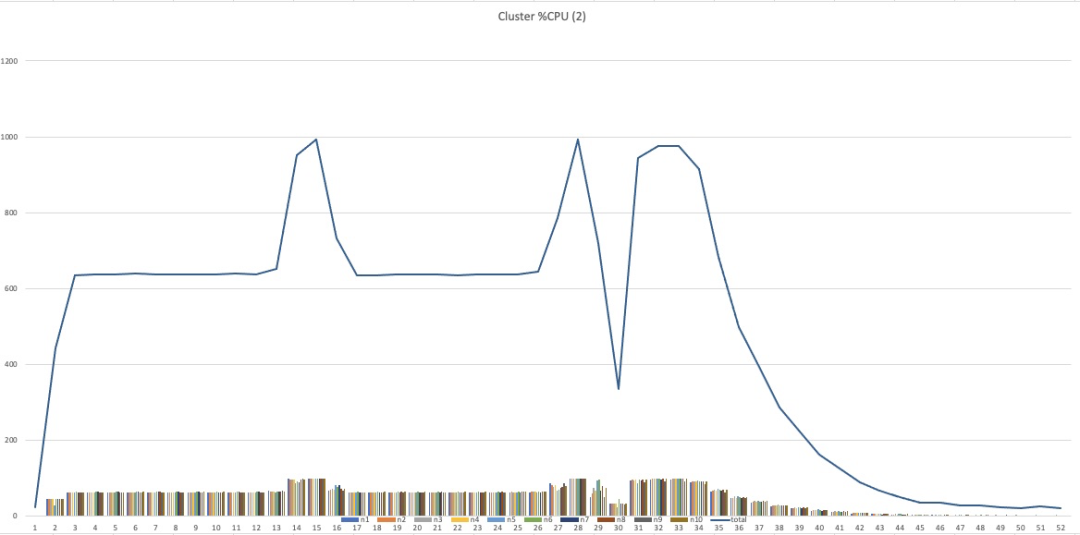

换种显示方式则更加明显, 很容易知道在不同阶段的表现, 比如正常Map和Reduce阶段cpu利用率也只有80%, 这个是否符合预期, 另外在Map和Reduce切换的时候, 系统idle很明显, 会不会是潜在优化点。

如果有对照的话, 可以直观地看不到不同表现, 特别是巨大的长尾时间有进一步优化的空间。

Benchmarking

lat_mem_rd -P 1 -N 1 10240 512

-

在信任关系建立前, 对别人的数据保持谨慎. 一是有可能自己对这块还没有足够理解, 二是需要测试报告提供足够的信息供他人做判断。 -

相信自己的数据. 必须相信自己, 但是选择相信自己的数据, 是因为有过详细合理的分析。 -

相信别人的数据. 信任链建立之后, 以及自己有了足够理解后, 选择相信.

4 更多工具

ftrace

-

谁调用了我. 这个只要在执行对应函数的时候拿到对应的栈就可以, 多种工具可以实现

-

我调用了谁. 这个是ftrace比较unique的功能

trace-cmd record -p function --func-stack -l generic_make_request dd if=/dev/zero of=file bs=4k count=1 oflag=direct

#trace-cmd reportcpus=128 dd-11344 [104] 4148325.319997: function: generic_make_request dd-11344 [104] 4148325.320002: kernel_stack: <stack trace>=> ftrace_graph_call (ffff00000809849c)=> generic_make_request (ffff000008445b80)=> submit_bio (ffff000008445f00)=> __blockdev_direct_IO (ffff00000835a0a8)=> ext4_direct_IO_write (ffff000001615ff8)=> ext4_direct_IO (ffff0000016164c4)=> generic_file_direct_write (ffff00000825c4e0)=> __generic_file_write_iter (ffff00000825c684)=> ext4_file_write_iter (ffff0000016013b8)=> __vfs_write (ffff00000830c308)=> vfs_write (ffff00000830c564)=> ksys_write (ffff00000830c884)=> __arm64_sys_write (ffff00000830c918)=> el0_svc_common (ffff000008095f38)=> el0_svc_handler (ffff0000080960b0)=> el0_svc (ffff000008084088)

$ sudo trace-cmd record -p function_graph -g generic_make_request dd if=/dev/zero of=file bs=4k count=1 oflag=direct

这样就可以拿到整个调用过程 (report结果稍微整理过):

$ trace-cmd report dd-22961 | generic_make_request() { dd-22961 | generic_make_request_checks() { dd-22961 0.080 us | _cond_resched(); dd-22961 | create_task_io_context() { dd-22961 0.485 us | kmem_cache_alloc_node(); dd-22961 0.042 us | _raw_spin_lock(); dd-22961 0.039 us | _raw_spin_unlock(); dd-22961 1.820 us | } dd-22961 | blk_throtl_bio() { dd-22961 0.302 us | throtl_update_dispatch_stats(); dd-22961 1.748 us | } dd-22961 6.110 us | } dd-22961 | blk_queue_bio() { dd-22961 0.491 us | blk_queue_split(); dd-22961 0.299 us | blk_queue_bounce(); dd-22961 0.200 us | bio_integrity_enabled(); dd-22961 0.183 us | blk_attempt_plug_merge(); dd-22961 0.042 us | _raw_spin_lock_irq(); dd-22961 | elv_merge() { dd-22961 0.176 us | elv_rqhash_find.isra.9(); dd-22961 | deadline_merge() { dd-22961 0.108 us | elv_rb_find(); dd-22961 0.852 us | } dd-22961 2.229 us | } dd-22961 | get_request() { dd-22961 0.130 us | elv_may_queue(); dd-22961 | mempool_alloc() { dd-22961 0.040 us | _cond_resched(); dd-22961 | mempool_alloc_slab() { dd-22961 0.395 us | kmem_cache_alloc(); dd-22961 0.744 us | } dd-22961 1.650 us | } dd-22961 0.334 us | blk_rq_init(); dd-22961 0.055 us | elv_set_request(); dd-22961 4.565 us | } dd-22961 | init_request_from_bio() { dd-22961 | blk_rq_bio_prep() { dd-22961 | blk_recount_segments() { dd-22961 0.222 us | __blk_recalc_rq_segments(); dd-22961 0.653 us | } dd-22961 1.141 us | } dd-22961 1.620 us | } dd-22961 | blk_account_io_start() { dd-22961 0.137 us | disk_map_sector_rcu(); dd-22961 | part_round_stats() { dd-22961 0.195 us | part_round_stats_single(); dd-22961 0.054 us | part_round_stats_single(); dd-22961 0.955 us | } dd-22961 2.148 us | } dd-22961 + 15.847 us | } dd-22961 + 23.642 us | }

uftrace

[] | main() { [] | A() { 0.160 us [ 69439] | busy(); 1.080 us [ 69439] | } /* A */ [] | B() { 0.050 us [ 69439] | busy(); 0.240 us [ 69439] | } /* B */ 1.720 us [ 69439] | } /* main */

BPF

-

tracepoint:kvm:kvm_mmio. host捕获guest mmio操作, guest里面最终通过写该mmio发送请求给host

-

kprobe:kvm_set_msi. 因为guest里面vdb使用msi中断, 中断最终通过该函数注入

-

只关注该qemu-kvm pid

-

vbd mmio对应的gpa, 这个可以在guest里面通过lspci获得

-

struct kvm的userspace_pid, struct kvm对应的qemu-kvm进程

-

struct kvm_kernel_irq_routing_entry的msi.devid, 对应到pci设备id

#include <linux/kvm_host.h>

BEGIN { @qemu_pid = $1; @mmio_start = 0xa000a00000; @mmio_end = 0xa000a00000 + 16384; @devid = 1536;}

tracepoint:kvm:kvm_mmio /pid == @qemu_pid/ { if (args->gpa >= @mmio_start && args->gpa < @mmio_end) { @start = nsecs; }}

kprobe:kvm_set_msi { $e = (struct kvm_kernel_irq_routing_entry *)arg0; $kvm = (struct kvm *)arg1; if (@start > 0 && $kvm->userspace_pid == @qemu_pid && $e->msi.devid == @devid) { @dur = stats(nsecs - @start); @start = 0; }}

interval:s:1 { print(@dur); clear(@dur);}

: count 598, average 1606320, total 960579533

: count 543, average 1785906, total 969747196

: count 644, average 1495419, total 963049914

: count 624, average 1546575, total 965062935

: count 645, average 1495250, total 964436299

5 更深理解

5 * This file contains the magic bits required to compute the global loadavg 6 * figure. Its a silly number but people think its important. We go through 7 * great pains to make it work on big machines and tickless kernels.

-

如果是实时观察的话, vmstat/dstat输出的runnable和I/O blocked的信息是种更好的选择, 因为相对于loadavg每5秒的采样, vmstat可以做到粒度更细, 而且loadavg的算法某种程度可以理解为有损的。

-

如果是sar/tsar的话, 假设收集间隔是10min的话, loadavg因为能覆盖更大的范围, 确实比10min一个的数字包含更多的信息, 但我们需要思考它对调试的真正价值.

-

获取load采样点的时间 -

测试用例刚好跳过该采样点

kprobe:calc_load_fold_active /cpu == 0/ { printf("%ld\n", nsecs / 1000000000);}

#include "kernel/sched/sched.h"kprobe:calc_global_load_tick /cpu == 0/ { $rq = (struct rq *)arg0; @[$rq->calc_load_update] = count();}

interval:s:5 { print(@); clear(@);}

#./calc_load.bt -I /kernel-source@[4465886482]: 61@[4465887733]: 1189

@[4465887733]: 62@[4465888984]: 1188

kprobe:id_nr_invalid /cpu == 0/ { printf("%ld\n", nsecs / 1000000000);}

while :; do sec=$(awk -F. '{print $1}' /proc/uptime) rem=$((sec % 5)) if [ $rem -eq 2 ]; then # 1s after updating load break; fi sleep 0.1done

for i in {0..63}; do ./busy 3 & # run 3sdone

大量busy进程成功跳过load的统计, 可以设想像cron执行的任务也是有这个可能的. 虽然不能否认loadavg的价值, 但总的来说load有以下缺陷:

-

系统级别的统计, 和具体应用产生的联系不够直接

-

使用采样的方式并且采样间隔 (5s) 较大, 有的场景不能真实反映系统

-

统计的间隔较大(1/5/15分钟), 不利于及时反映当时的情况

-

语义稍微不够清晰, 不只包括cpu的load, 还包括D状态的任务, 这个本身不是大问题, 更多可以认为是feature

-

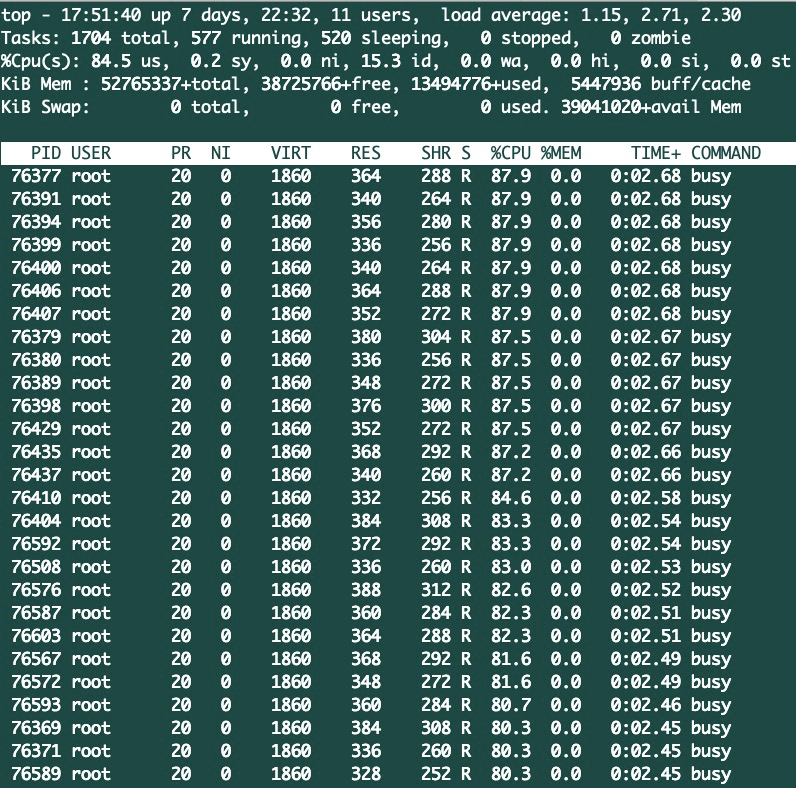

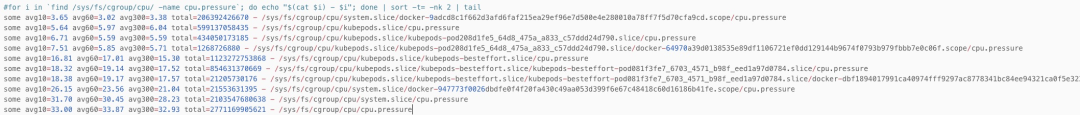

some - 因为缺少资源导致部分任务不能执行

-

full - 因为缺少资源导致所有任务不能执行, cpu不存在这种情况

这里会引出几个问题, 篇幅原因这里不再展开。

-

父cgroup的avg为什么比子cgroup还小? 是实现问题还是有额外的配置参数?

-

avg10等于33, 也就是1/3的时间有task因为没有cpu而得不到执行, 考虑到系统cpu利用率在40%左右并不算高, 我们怎么合理看待和使用这个值

top - 09:55:41 up 127 days, 1:44, 1 user, load average: 111.70, 87.08, 79.41Tasks: 3685 total, 21 running, 2977 sleeping, 1 stopped, 8 zombie%Cpu(s): 27.3 us, 8.9 sy, 0.0 ni, 59.8 id, 0.1 wa, 0.0 hi, 4.0 si, 0.0 st

6 RTFSC

#sar -B 1

11:00:16 AM pgscank/s pgscand/s pgsteal/s %vmeff 11:00:17 AM 0.00 0.00 3591.00 0.00 11:00:18 AM 0.00 0.00 10313.00 0.00 11:00:19 AM 0.00 0.00 8452.00 0.00

-

pgscand: 对应到pgscan_direct域 -

pgscank: 对应到pgscan_kswapd域 -

pgsteal: 对应到pgsteal_开头的域

(gdb) b read_vmstat_paging(gdb) set follow-fork-mode child(gdb) rBreakpoint 1, read_vmstat_paging (st_paging=0x424f40) at rd_stats.c:751751 if ((fp = fopen(VMSTAT, "r")) == NULL)(gdb) n754 st_paging->pgsteal = 0;(gdb)757 while (fgets(line, sizeof(line), fp) != NULL) {(gdb)759 if (!strncmp(line, "pgpgin ", 7)) {(gdb)763 else if (!strncmp(line, "pgpgout ", 8)) {(gdb)767 else if (!strncmp(line, "pgfault ", 8)) {(gdb)771 else if (!strncmp(line, "pgmajfault ", 11)) {(gdb)775 else if (!strncmp(line, "pgfree ", 7)) {(gdb)779 else if (!strncmp(line, "pgsteal_", 8)) {(gdb)784 else if (!strncmp(line, "pgscan_kswapd", 13)) {(gdb)789 else if (!strncmp(line, "pgscan_direct", 13)) {(gdb)757 while (fgets(line, sizeof(line), fp) != NULL) {(gdb)

#grep pgsteal_ /proc/vmstatpgsteal_kswapd 168563pgsteal_direct 0pgsteal_anon 0pgsteal_file 978205

#grep pgscan_ /proc/vmstatpgscan_kswapd 204242pgscan_direct 0pgscan_direct_throttle 0pgscan_anon 0pgscan_file 50583828

if (current_is_kswapd()) {if (!cgroup_reclaim(sc))__count_vm_events(PGSCAN_KSWAPD, nr_scanned);count_memcg_events(lruvec_memcg(lruvec), PGSCAN_KSWAPD,nr_scanned);} else {if (!cgroup_reclaim(sc))__count_vm_events(PGSCAN_DIRECT, nr_scanned);count_memcg_events(lruvec_memcg(lruvec), PGSCAN_DIRECT,nr_scanned);}__count_vm_events(PGSCAN_ANON + file, nr_scanned);

-

这里sar取得是系统的/proc/vmstat, 而cgroup里面pgscan_kswapd和pgscan_direct只会加到cgroup的统计, 不会加到系统级的统计

-

cgroup里面pgsteal_kswapd和pgsteal_direct同样只会加到cgroup自己的统计

-

但是主要pgscan_anon, pgscan_file和pgsteal_anon, pgsteal_file都只加到系统级的统计

-

sar读取了pgscan_kswapd, pgscan_direct, 以及pgsteal_*, 这里*还包括了pgsteal_anon和pgsteal_file

#df -h .Filesystem Size Used Avail Use% Mounted oncgroup 0 0 0 - /sys/fs/cgroup/memory#grep -c 'pgscan\|pgsteal' memory.stat0

7 多上手

-

回答预设问题. 调试分析就是不断提出问题和验证的过程, 没有上手的话就会一直停留在第一个问题上. 比如我想了解某平台上物理内存是怎么编址的, 没有文档的话只能自己去实验

-

提出新的问题. 调试分析中不怕有问题, 怕的是提不出问题

-

会有意外收获. 很多时候并不是有意为之, 比如准备的是分析cpu调频能否降功耗, 上去却发现系统一直运行在最低频率

-

熟练. 熟练就是效率

-

改进产品. 可以试想下在整个云环境所有机器上扫描 (类似全面体检) 会发现多少潜在问题

我们在招聘

-

热招岗位: Go/Python/Java, 基础平台研发, 性能调优等 -

涉及技术领域: 计算, 存储, 网络等 -

简历投递地址: james.wf@alibaba-inc.com

参考资料

[1]https://www.cs.rice.edu/~johnmc/comp528/lecture-notes/

[2]https://brendangregg.com/

[3]http://dtrace.org/blogs/bmc/

[4]https://blog.stgolabs.net/

[5]https://lwn.net/

[6]https://github.com/namhyung/uftrace

All In one:如何搭建端到端可观测体系

登录查看更多

相关内容

中央处理器(CPU,Central Processing Unit),电子计算机的主要设备之一。其功能主要是解释计算机指令以及处理计算机软件中的数据。

专知会员服务

24+阅读 · 2022年3月24日

Arxiv

0+阅读 · 2022年4月20日

Arxiv

0+阅读 · 2022年4月19日

Arxiv

0+阅读 · 2022年4月18日