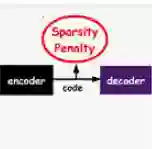

The rapid development of generative AI has transformed content creation, communication, and human development. However, this technology raises profound concerns in high-stakes domains, demanding rigorous methods to analyze and evaluate AI-generated content. While existing analytic methods often treat images as indivisible wholes, real-world AI failures generally manifest as specific visual patterns that can evade holistic detection and suit more granular and decomposed analysis. Here we introduce a content analysis tool, Language-Grounded Sparse Encoders (LanSE), which decompose images into interpretable visual patterns with natural language descriptions. Utilizing interpretability modules and large multimodal models, LanSE can automatically identify visual patterns within data modalities. Our method discovers more than 5,000 visual patterns with 93\% human agreement, provides decomposed evaluation outperforming existing methods, establishes the first systematic evaluation of physical plausibility, and extends to medical imaging settings. Our method's capability to extract language-grounded patterns can be naturally adapted to numerous fields, including biology and geography, as well as other data modalities such as protein structures and time series, thereby advancing content analysis for generative AI.

翻译:生成式人工智能的快速发展已深刻改变了内容创作、通信及人类发展进程。然而,该技术在高风险领域引发了深切担忧,亟需严谨的方法来分析与评估人工智能生成内容。现有分析方法通常将图像视为不可分割的整体,而实际场景中的人工智能故障往往表现为特定的视觉模式,这些模式可能规避整体性检测,更适合采用更细粒度与解构化的分析方式。本文提出一种内容分析工具——语言锚定稀疏编码器(LanSE),其能够将图像解构为具有自然语言描述的可解释视觉模式。通过利用可解释性模块与大型多模态模型,LanSE可自动识别数据模态内的视觉模式。我们的方法发现了超过5,000种视觉模式,其人类标注一致性达到93%;在解构化评估方面优于现有方法;建立了首个物理合理性的系统性评估框架;并成功拓展至医学影像场景。该方法提取语言锚定模式的能力可自然适配于生物学、地理学等诸多领域,以及蛋白质结构、时间序列等其他数据模态,从而推动生成式人工智能内容分析的发展。