每周一起读 | ACL 2019 & NAACL 2019:文本关系抽取专题沙龙

”每周一起读“是由 PaperWeekly 发起的论文共读活动,我们结合自然语言处理、计算机视觉和机器学习等领域的顶会论文和前沿成果来指定每期论文,并且邀请论文作者来到现场,和大家展开更有价值的延伸讨论。

我们希望能为 PaperWeekly 的各位读者带来一种全新的论文阅读体验、一个认识同行、找到组织的契机、一次与国际顶会论文作者当面交流的机会。

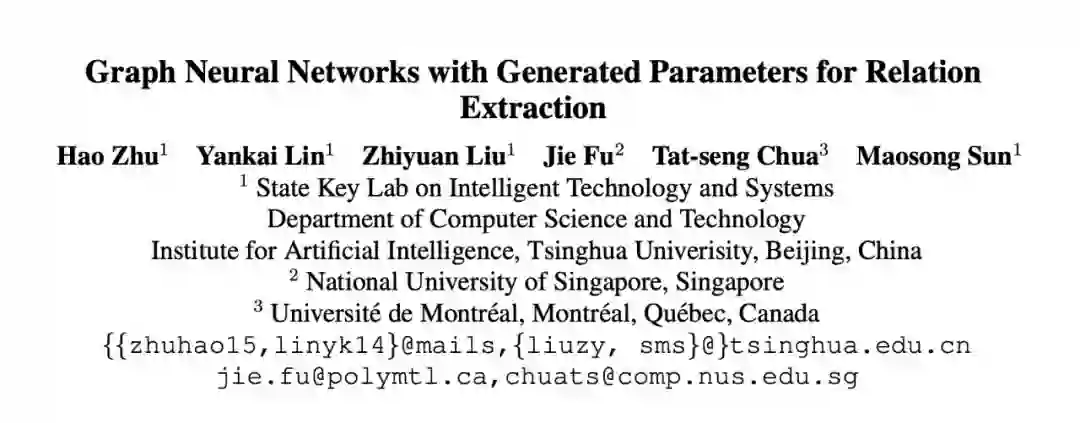

6 月 30 日(周日)下午 2 点,“每周一起读”将邀请清华大学计算机系本科生朱昊,和大家分享其发表于自然语言处理顶级会议 ACL 2019 和 NAACL 2019 的最新文章。

本期活动的主题是文本关系抽取,作者将分别从关系的相似性度量以及图神经网络方法等角度来分享。欢迎对文本关系抽取以及自然语言处理等相关话题感兴趣的同学来现场一同参与讨论。

朱昊

清华大学计算机系本科生

Hao Zhu is graduating from Tsinghua University with a bachelor degree in computer science and will be joining CMU LTI as a Ph.D. student this fall. He feels fortunate to work with Zhiyuan Liu, Jason Eisner (JHU), Matt Gormley (CMU) and Tat-seng Chua (NUS) during his undergraduate research.

His ultimate goal is to understand human intelligence. Believing in Feynman's famous quote, "What I cannot create, I do not understand.", he is working on teaching Machine Learning models to gain human intelligence. More specifically, he is currently interested in teaching machines to speak human language, as well as to do human-level logical reasoning. To reach such goals, he is always fashioning principle, computable, and effective approaches.

ACL 2019

Abstract: In this paper, we propose a novel graph neural network with generated parameters (GPGNNs). The parameters in the propagation module, i.e. the transition matrices used in message passing procedure, are produced by a generator taking natural language sentences as inputs. We verify GP-GNNs in relation extraction from text, both on bag- and instance settings. Experimental results on a human annotated dataset and two distantly supervised datasets show that multi-hop reasoning mechanism yields significant improvements. We also perform a qualitative analysis to demonstrate that our model could discover more accurate relations by multi-hop relational reasoning. Codes and data are released at https: //github.com/thunlp/gp-gnn.

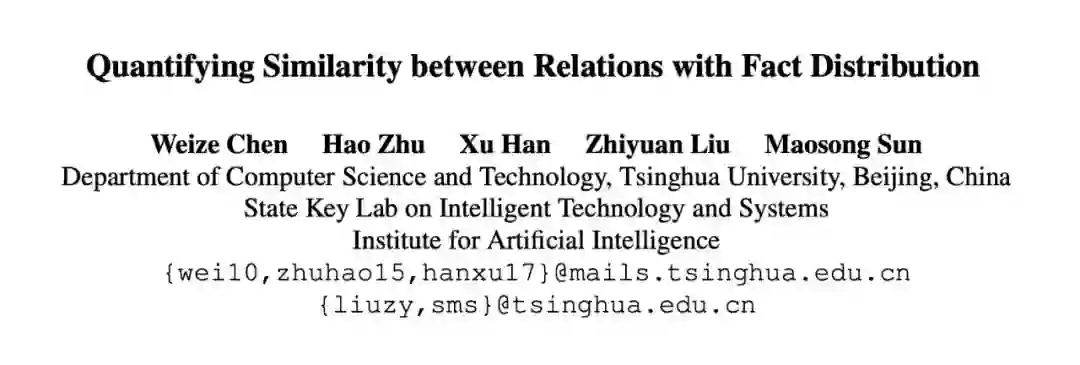

ACL 2019

Abstract: We introduce a conceptually simple and effective method to quantify the similarity between relations in knowledge bases. Specifically, our approach is based on the divergence between the conditional probability distributions over entity pairs. In this paper, these distributions are parameterized by a very simple neural network. Although computing the exact similarity is intractable, we provide a sampling-based method to get a good approximation.

We empirically show the outputs of our approach significantly correlate with human judgments. By applying our method to various tasks, we also find that (1) our approach could effectively detect redundant relations extracted by open information extraction (Open IE) models, that (2) even the most competitive models for relational classification still make mistakes among very similar relations, and that (3) our approach could be incorporated into negative sampling and softmax classification to alleviate these mistakes. The source code and experiment details of this paper can be obtained from https://github.com/thunlp/relation-similarity.

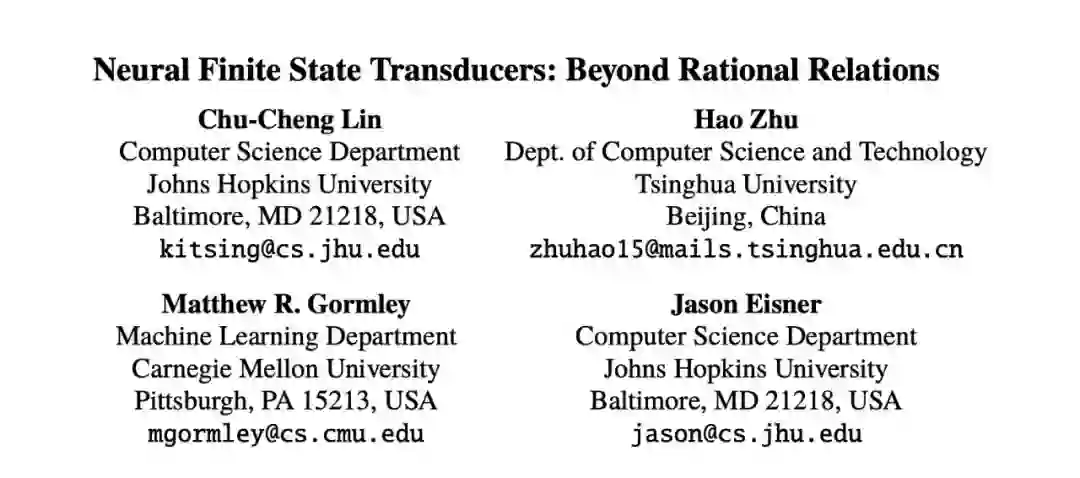

NAACL 2019

Abstract: We introduce neural finite state transducers (NFSTs), a family of string transduction models defining joint and conditional probability distributions over pairs of strings. The probability of a string pair is obtained by marginalizing over all its accepting paths in a finite state transducer. In contrast to ordinary weighted FSTs, however, each path is scored using an arbitrary function such as a recurrent neural network, which breaks the usual conditional independence assumption (Markov property). NFSTs are more powerful than previous finite-state models with neural features (Rastogi et al., 2016). We present training and inference algorithms for locally and globally normalized variants of NFSTs. In experiments on different transduction tasks, they compete favorably against seq2seq models while offering interpretable paths that correspond to hard monotonic alignments.

时间:6 月 30 日(周日) 14:00–16:00

地点:北京智源人工智能研究院102会议室

北京市海淀区中关村南大街1-1号

中关村领创空间(信息谷)

1 / 长按识别二维码报名

2 / 加入NLP专题交流群

报名截止日期:6 月 29 日(周六)12:00

* 场地人数有限,报名成功的读者将收到包含电子门票二维码的短信通知,请留意查收。

注意事项:

* 如您无法按时到场参与活动,请于活动开始前 24 小时在 PaperWeekly 微信公众号后台留言告知,留言格式为放弃报名 + 报名电话;无故缺席者,将不再享有后续活动的报名资格。

1 / 扫码关注

扫码关注 PaperWeekly👇

PaperWeekly

清华大学计算机科学与技术系

北京智源人工智能研究院

🔍

现在,在「知乎」也能找到我们了

进入知乎首页搜索「PaperWeekly」

点击「关注」订阅我们的专栏吧

关于PaperWeekly

PaperWeekly 是一个推荐、解读、讨论、报道人工智能前沿论文成果的学术平台。如果你研究或从事 AI 领域,欢迎在公众号后台点击「交流群」,小助手将把你带入 PaperWeekly 的交流群里。

▽ 点击 | 阅读原文 | 立刻报名