Python 爬虫:爬虫攻防

一次性付费进群,长期免费索取教程,没有付费教程。

进微信群回复公众号:微信群;QQ群:460500587

微信公众号:计算机与网络安全

ID:Computer-network

对于一般用户而言,网络爬虫是个好工具,它可以方便地从网站上获取自己想要的信息。可对于网站而言,网络爬虫占用了太多的资源,也没可能从这些爬虫获取点击量,增加广告收入。据有关调查研究证明,网络上超过60%以上的访问量都是爬虫造成的,也难怪网站方对网络爬虫恨之入骨,“杀”之而后快了。

网站方采取种种措施拒绝网络爬虫的访问,而网络高手们则毫不示弱,改进网络爬虫,赋予它更强的功能、更快的速度,以及更隐蔽的手段。在这场爬虫与反爬虫的战争中,双方的比分交替领先,最终谁会赢得胜利,大家将拭目以待。

1、创建一般爬虫

我们先写一个小爬虫程序,假设网站方的各种限制,然后再来看看如何破解网站方的限制,让大家自由地使用爬虫工具。网站限制的爬虫肯定不包括我们这种只有几次访问的爬虫。一般来说,小于100次访问的爬虫都无须为此担心,这里的爬虫纯粹是做演示。

以爬取美剧天堂站点为例,使用Scrapy爬虫来爬取最近更新的美剧。进入Scrapy工作目录,创建meiju100项目。执行命令:

cd

cd code/scrapy

scrapy startproject meiju100

cd meiju100

scrapy genspider meiju100Spider meijutt.com

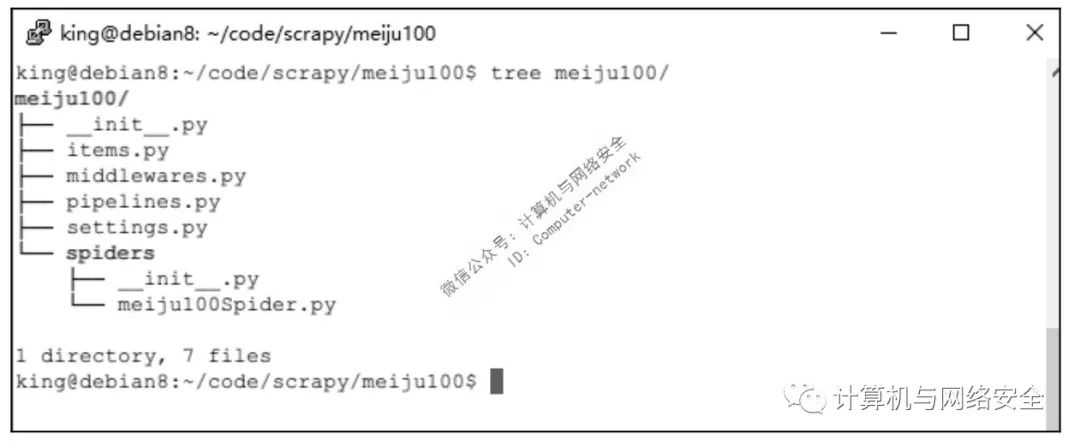

tree meiju100

执行的结果如图1所示。

修改后的items.py的内容如下:

1 # -*- coding: utf-8 -*-

2

3 # Define here the models for your scraped items

4 #

5 # See documentation in:

6 # http://doc.scrapy.org/en/latest/topics/items.html

7

8 import scrapy

9

10

11 class Meiju100Item(scrapy.Item):

12 # define the fields for your item here like:

13 # name = scrapy.Field()

14 storyName = scrapy.Field()

15 storyState = scrapy.Field()

16 tvStation = scrapy.Field()

17 updateTime = scrapy.Field()

修改后的meiju100Spider.py内容如下:

1 # -*- coding: utf-8 -*-

2 import scrapy

3 from meiju100.items import Meiju100Item

4

5

6 class Meiju100spiderSpider(scrapy.Spider):

7 name = 'meiju100Spider'

8 allowed_domains = ['meijutt.com']

9 start_urls = ['http://meijutt.com/new100.html']

10

11 def parse(self, response):

12 subSelector = response.xpath('//li/div[@class="lasted-num fn-left"]')

13 items = []

14 for sub in subSelector:

15 item = Meiju100Item()

16 item['storyName'] = sub.xpath('../h5/a/text()').extract()[0]

17 try:

18 item['storyState'] = sub.xpath('../span[@class="state1 new100state1"]/font/text()').extract()[0]

19 except IndexError as e:

20 item['storyState'] = sub.xpath('../span[@class="state1 new100state1"]/text()').extract()[0]

21 item['tvStation'] =sub.xpath('../span[@class="mjtv"]/text()').extract()

22 try:

23 item['updateTime'] = sub.xpath('../div[@class="lasted-time new100time fn-right"]/font/text()').extract()[0]

24 except IndexError as e:

25 item['updateTime'] = sub.xpath('../div[@class="lasted-time new100time fn-right"]/text()').extract()[0]

26 items.append(item)

27 return items

修改后的pipelines.py内容如下:

1 # -*- coding: utf-8 -*-

2

3 # Define your item pipelines here

4 #

5 # Don't forget to add your pipeline to the ITEM_PIPELINES setting

6 # See: http://doc.scrapy.org/en/latest/topics/item-pipeline.html

7

8 import time

9

10 class Meiju100Pipeline(object):

11 def process_item(self, item, spider):

12 today = time.strftime('%Y%m%d', time.localtime())

13 fileName = today + 'meiju.txt'

14 with open(fileName, 'a') as fp:

15 fp.write("%s \t" %(item['storyName'].encode('utf8')))

16 fp.write("%s \t" %(item['storyState'].encode('utf8')))

17 if len(item['tvStation']) == 0:

18 fp.write("unknow \t")

19 else:

20 fp.write("%s \t" %(item['tvStation'][0]).encode('utf8'))

21

22 return item

修改后的settings.py内容如下:

1 # -*- coding: utf-8 -*-

2

3 # Scrapy settings for meiju100 project

4 #

5 # For simplicity, this file contains only the most important settings by

6 # default. All the other settings are documented here:

7 #

8 # http://doc.scrapy.org/en/latest/topics/settings.html

9 #

10

11 BOT_NAME = 'meiju100'

12

13 SPIDER_MODULES = ['meiju100.spiders']

14 NEWSPIDER_MODULE = 'meiju100.spiders'

15

16 # Crawl responsibly by identifying yourself (and your website) on the User-Agent

17 #USER_AGENT = 'meiju100 (+http://www.yourdomain.com)'

18

19

20 ### user define

21 ITEM_PIPELINES = {

22 'meiju100.pipelines.Meiju100Pipeline':10

23 }

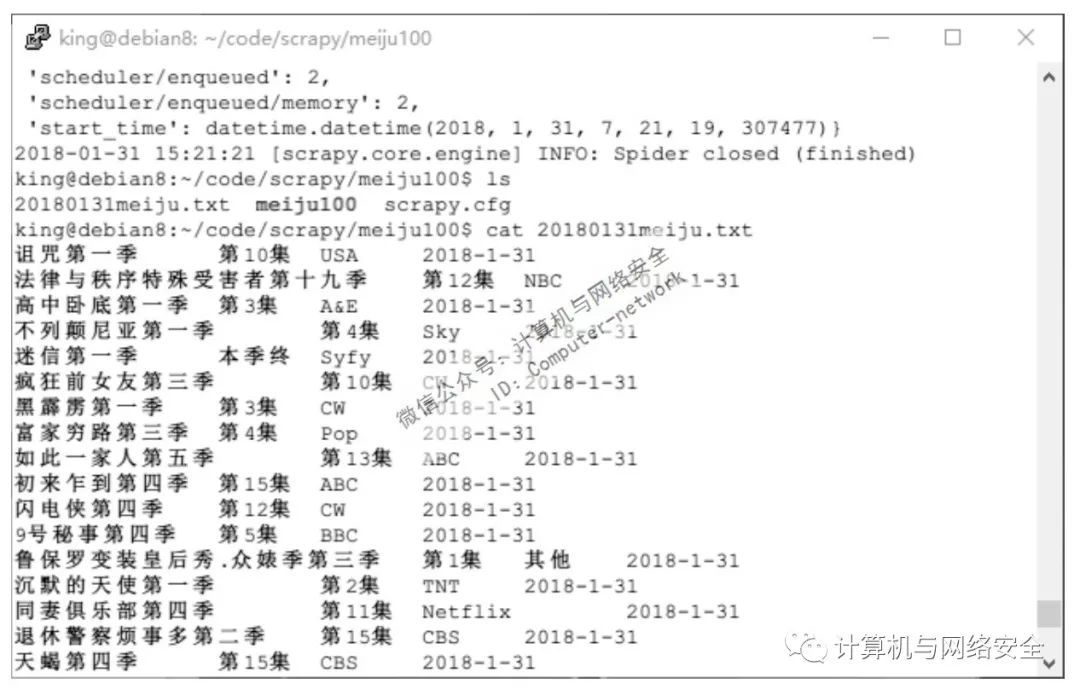

这个美剧爬虫已经修改完毕了,回到meiju项目的主目录下,执行命令:

scrapy crawl meiju100Spider

cat *.txt

执行结果如图2所示。

2、封锁间隔时间破解

Scrapy在两次请求之间的时间设置是DOWNLOAD_DELAY。如果不考虑反爬虫的因素,这个值当然是越小越好。如果把DOWNLOAD_DELAY设置成了0.1,也就是每0.1秒向网站请求一次网页。网站管理员只要不瞎,稍微过滤一下日志,必定会为爬虫使用者如此侮辱他的智商而愤恨不已。

如果对爬虫的结果需求不是那么急,也希望“打枪的不要,悄悄地进村”,那还是把这一项的值设置得稍微大一点吧。在settings.py中找到这一行,取消前面的注释符号,并将至修改一下(在settings.py的第30行)。

DOWNLOAD_DELAY=5

这样每5秒一次的请求就不那么显眼了。除非被爬网站的访问量非常非常的小,否则以这个频率的请求是很难被发现的。

3、封锁Cookies破解

COOKIES_ENABLED=False

4、封锁User-Agent破解

User-Agent是浏览器的身份标识。网站就是通过User-Agent来确定浏览器类型的。有很多的网站都会拒绝不符合一定标准的User-Agent请求网页。在Scrapy项目中曾冒充浏览器访问网站。但如果网站将频繁访问网站的User-Agent作为爬虫的标志,然后将其拉入黑名单又该怎么办呢?

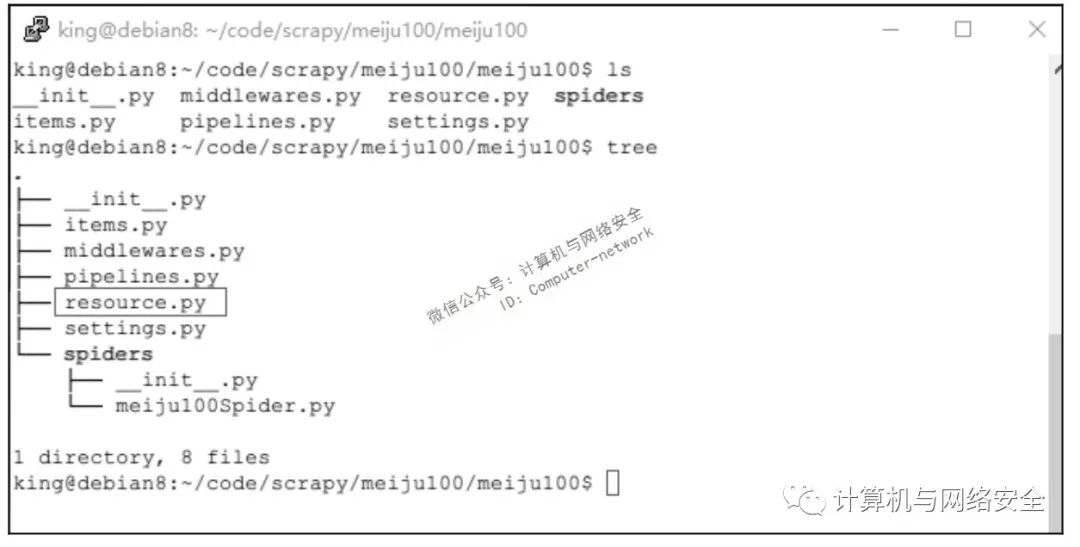

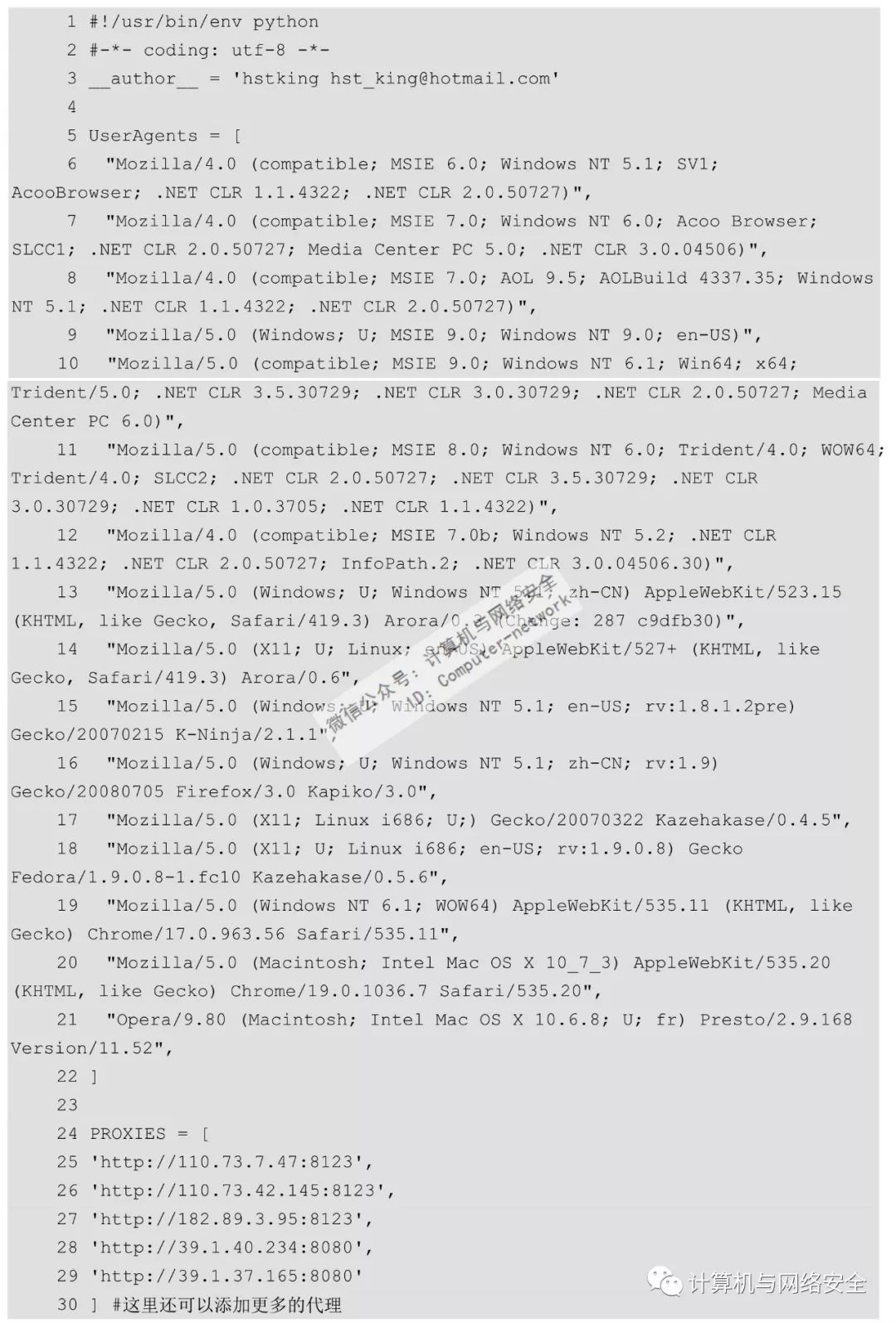

这个也很简单。可以准备一大堆的User-Agent,然后随机挑选一个使用,使用一次就更换,这样不就解决了。挑选几个合适的浏览器User-Agent放到资源文件resource.py中待用。然后将resource.py复制到settings.py的同级目录中去,如图3所示。

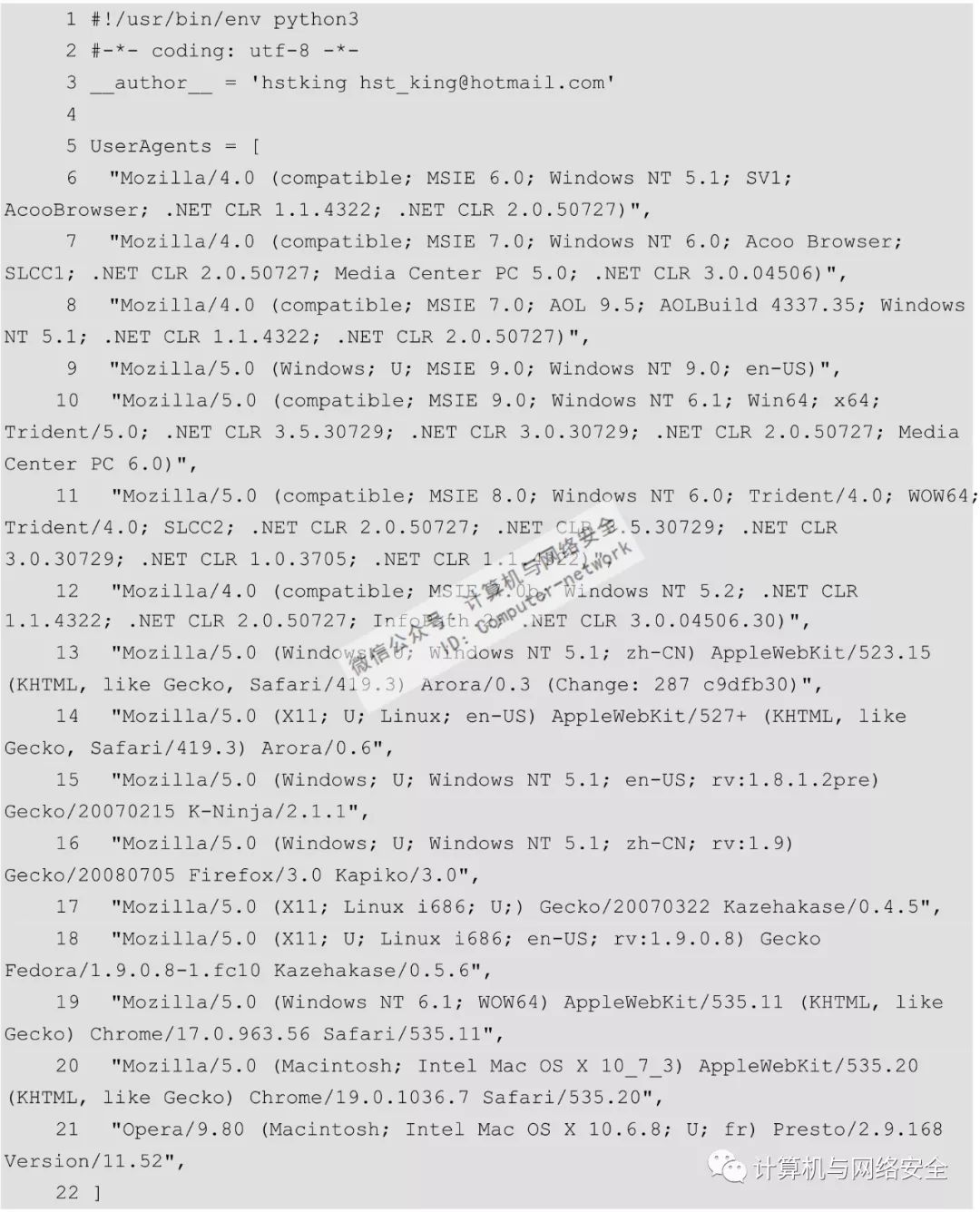

resource.py文件内容如下:

Scrapy在创建项目时已经创建好了一个middlewares.py文件。这个在刚才运行scrapy crawl meiju100Spider时,middlewares.py并没有起作用。现在需要修改User-Agent,可以直接在middlewares.py中新建一个新类(重新建立一个新文件也可以,但UserAgentMiddleware本身就属于middlewares的,放到一起更加方便而已)。这个新类继承与UserAgentMiddleware类。然后在settings.py中设置一下,让这个新类替代掉原来的UserAgentMiddlerware类。修改middlewares.py如下:

1 # -*- coding: utf-8 -*-

2

3 # Define here the models for your spider middleware

4 #

5 # See documentation in:

6 # https://doc.scrapy.org/en/latest/topics/spider-middleware.html

7

8 from scrapy import signals

9 from meiju100.resource import UserAgents

10 from scrapy.downloadermiddlewares.useragent import UserAgentMiddleware

11 import random

12

13

14 class Meiju100SpiderMiddleware(object):

15 # Not all methods need to be defined. If a method is not defined,

16 # scrapy acts as if the spider middleware does not modify the

17 # passed objects.

18

19 @classmethod

20 def from_crawler(cls, crawler):

21 # This method is used by Scrapy to create your spiders.

22 s = cls()

23 crawler.signals.connect(s.spider_opened,signal=signals.spider_opened)

24 return s

25

26 def process_spider_input(self, response, spider):

27 # Called for each response that goes through the spider

28 # middleware and into the spider.

29

30 # Should return None or raise an exception.

31 return None

32

33 def process_spider_output(self, response, result, spider):

34 # Called with the results returned from the Spider, after

35 # it has processed the response.

36

37 # Must return an iterable of Request, dict or Item objects.

38 for i in result:

39 yield i

40

41 def process_spider_exception(self, response, exception, spider):

42 # Called when a spider or process_spider_input() method

43 # (from other spider middleware) raises an exception.

44

45 # Should return either None or an iterable of Response, dict

46 # or Item objects.

47 pass

48

49 def process_start_requests(self, start_requests, spider):

50 # Called with the start requests of the spider, and works

51 # similarly to the process_spider_output() method, except

52 # that it doesn’t have a response associated.

53

54 # Must return only requests (not items).

55 for r in start_requests:

56 yield r

57

58 def spider_opened(self, spider):

59 spider.logger.info('Spider opened: %s' % spider.name)

60

61

62 class Meiju100DownloaderMiddleware(object):

63 # Not all methods need to be defined. If a method is not defined,

64 # scrapy acts as if the downloader middleware does not modify the

65 # passed objects.

66

67 @classmethod

68 def from_crawler(cls, crawler):

69 # This method is used by Scrapy to create your spiders.

70 s = cls()

71 crawler.signals.connect(s.spider_opened,signal=signals.spider_opened)

72 return s

73

74 def process_request(self, request, spider):

75 # Called for each request that goes through the downloader

76 # middleware.

77

78 # Must either:

79 # - return None: continue processing this request

80 # - or return a Response object

81 # - or return a Request object

82 # - or raise IgnoreRequest: process_exception() methods of

83 # installed downloader middleware will be called

84 return None

85

86 def process_response(self, request, response, spider):

87 # Called with the response returned from the downloader.

88

89 # Must either;

90 # - return a Response object

91 # - return a Request object

92 # - or raise IgnoreRequest

93 return response

94

95 def process_exception(self, request, exception, spider):

96 # Called when a download handler or a process_request()

97 # (from other downloader middleware) raises an exception.

98

99 # Must either:

100 # - return None: continue processing this exception

101 # - return a Response object: stops process_exception() chain

102 # - return a Request object: stops process_exception() chain

103 pass

104

105 def spider_opened(self, spider):

106 spider.logger.info('Spider opened: %s' % spider.name)

107

108 class CustomUserAgentMiddleware(UserAgentMiddleware):

109 def __init__(self, user_agent='Scrapy'):

110 ua = random.choice(UserAgents)

111 self.user_agent = ua

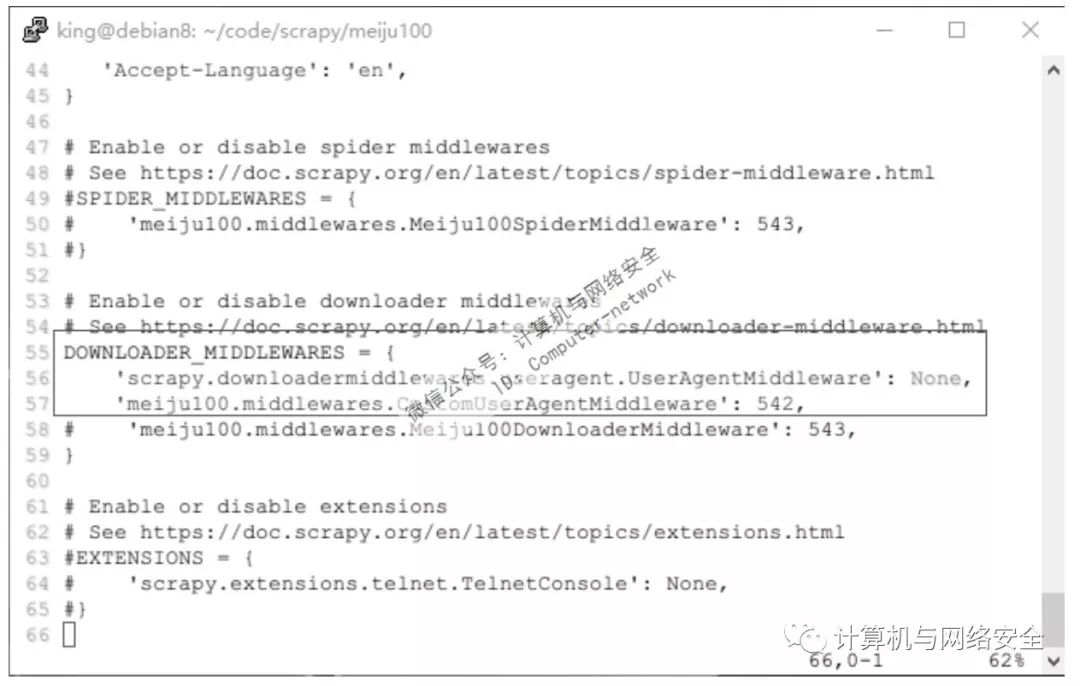

这里将UserAgentMiddleware默认是自动运行的,现在目的是用CustomUserAgentMiddleware来替代它,需要将它关闭掉。所以将UserAgentMiddleware的值设置成None。CustomUserAgentMiddleware设置成542,这个数是启动的顺序,并不是随便设置的,是依据它的被替代类的启动顺序来设置的。

保存关闭文件,回到项目下执行命令:

scrapy crawl meiju100Spider

结果是没问题的,现在来看看Spider的日志,如图5所示。

设置的中间件CustomUserAgentMiddleware已经起作用了。

5、封锁IP破解

在反爬虫中,最容易被发觉的实际上是IP。同一IP短时间内访问同一站点,如果数目少,管理员可能会以为是网吧或者大型的局域网在访问而放你一马。数目多了,那肯定是爬虫了。个人用户可以用重启猫的方法换IP(这种方法也不算太靠谱,不可能封锁一次就重启一次猫吧),专线用户总不能让ISP给换专线吧,因此最方便的方法就是使用代理了。

这里将准备一个代理池,从中随机地选取一个代理使用。爬取一次,选取一个不同的代理。进入之前创建的middlewares目录中,在资源文件resource.py中加入一个IP池,也就是一个代理服务器的列表。

修改后的resource.py的内容如下:

PROXIES里的代理是从网络上抓取的代理,具有时效性,使用时请自行设置可用的代理。

修改中间件文件middlewares.py中Meiju100DownloaderMiddleware类的process_request函数,如图6所示。

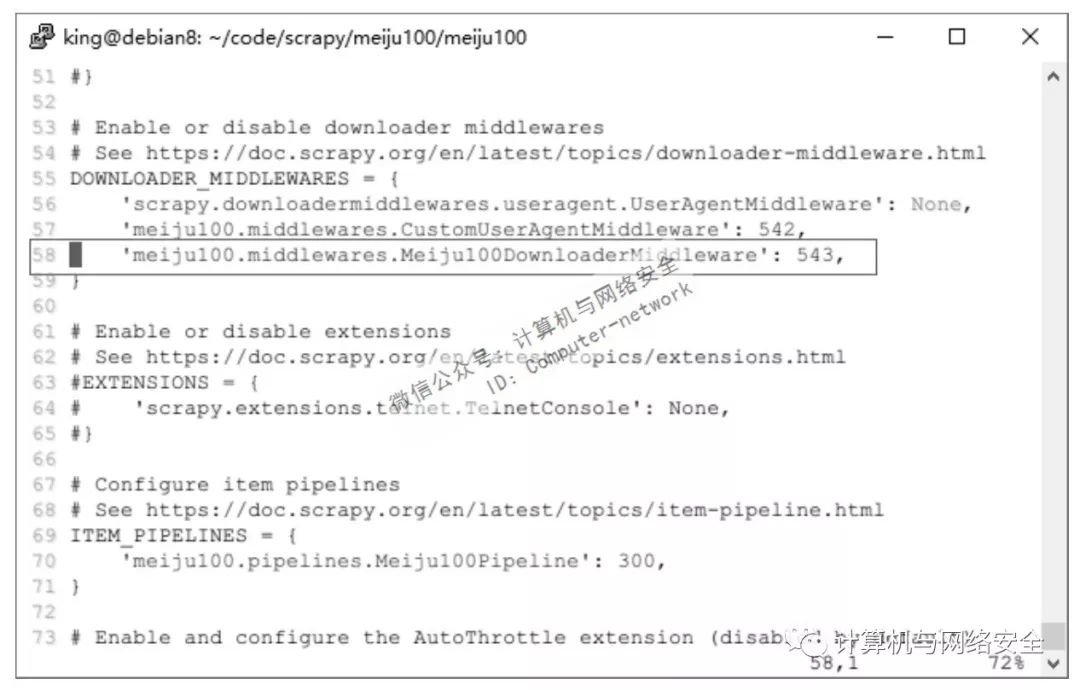

修改settings.py文件,将Meiju100DownloaderMiddleware添加到启动的中间件去,如图7所示。

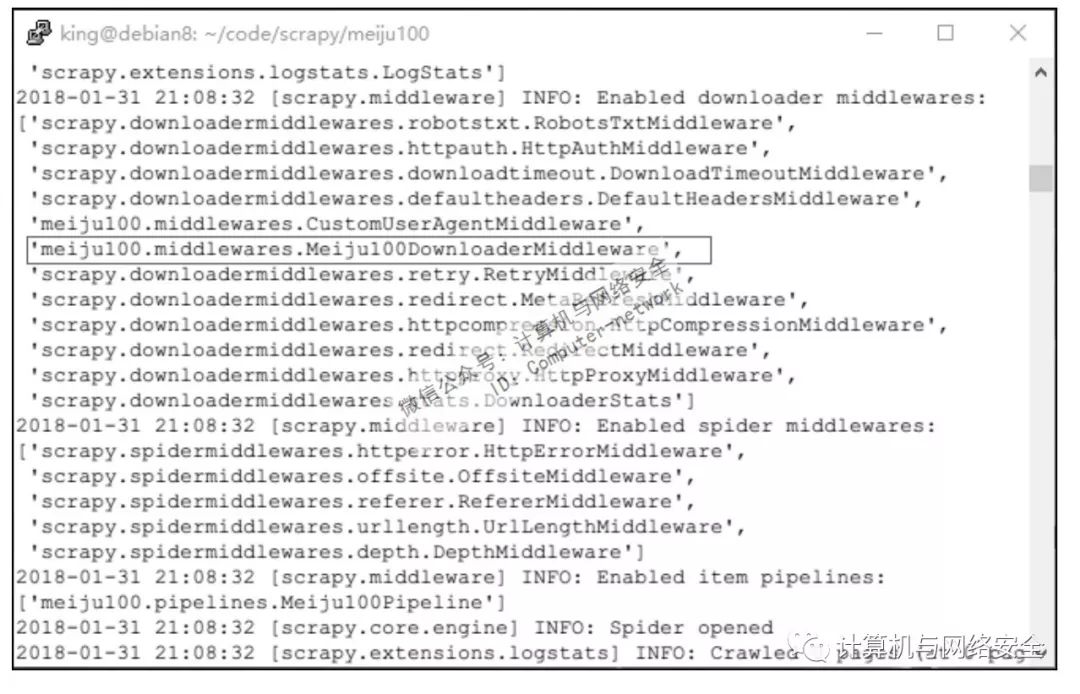

保存文件,回到项目下执行命令:

scrapy crawl meiju100Spider

查看Scrapy的日志,如图8所示。

程序运行符合预期设计。Scrapy就可以随机地使用代理池中的代理服务器了。

实际反爬虫的方法远不止这一些,只不过个人用户掌握这些也够用了。个人用户动则成千上万的网页爬取毕竟是少数。

微信公众号:计算机与网络安全

ID:Computer-network

【推荐书籍】