【论文推荐】最新七篇行人再识别相关论文—深度排序、风格自适应、对抗、重排序、多层次相似性、深度空间特征重构、图对应迁移

【导读】既昨天推出六篇行人再识别文章,专知内容组今天又推出最近七篇行人再识别(Person Re-Identification)相关文章,为大家进行介绍,欢迎查看!

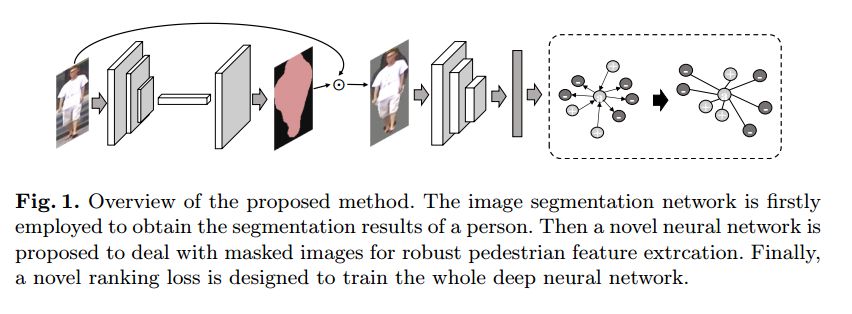

1. MaskReID: A Mask Based Deep Ranking Neural Network for Person Re-identification(用于行人再识别的一种基于深度排序的神经网络)

作者:Lei Qi,Jing Huo,Lei Wang,Yinghuan Shi,Yang Gao

机构:Nanjing University,University of Wollongong

摘要:In this paper, a novel mask based deep ranking neural network with skipped fusing layer (MaskReID) is proposed for person re-identification (Re-ID). For person Re-ID, there are multiple challenges co-exist throughout the re-identification process, including cluttered background, appearance variations (illumination, pose, occlusion, etc.) among different camera views and interference of samples of similar appearance. A compact framework is proposed to address these problems. Firstly, to address the problem of cluttered background, masked images which are the image segmentations of the original images are incorporated as input in the proposed neural network. Then, to remove the appearance variations so as to obtain more discriminative feature, a new network structure is proposed which fuses feature of different layers as the final feature. This makes the final feature a combination of all the low, middle and high level feature, which is more informative. Lastly, as person Re-ID is a special image retrieval task, a novel ranking loss is designed to optimize the whole network. The ranking loss relieved the interference problem of similar samples while producing ranking results. The experimental results demonstrate that the proposed method consistently outperforms the state-of-the-art methods on many person Re-ID datasets, especially large-scale datasets, such as, CUHK03, Market1501 and DukeMTMC-reID.

期刊:arXiv, 2018年4月11日

网址:

http://www.zhuanzhi.ai/document/eda020abd6b0838e9e023806052d86a3

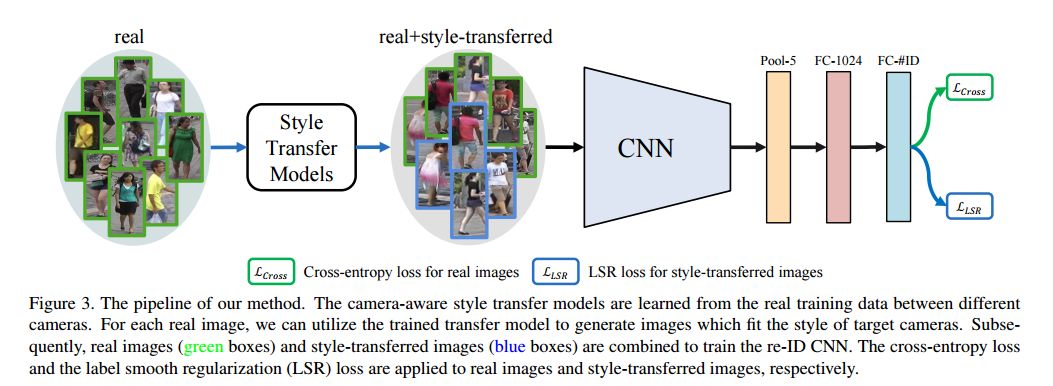

2. Camera Style Adaptation for Person Re-identification(基于相机风格自适应的行人再识别)

作者:Zhun Zhong,Liang Zheng,Zhedong Zheng,Shaozi Li,Yi Yang

机构:Xiamen University,University of Technology Sydney

摘要:Being a cross-camera retrieval task, person re-identification suffers from image style variations caused by different cameras. The art implicitly addresses this problem by learning a camera-invariant descriptor subspace. In this paper, we explicitly consider this challenge by introducing camera style (CamStyle) adaptation. CamStyle can serve as a data augmentation approach that smooths the camera style disparities. Specifically, with CycleGAN, labeled training images can be style-transferred to each camera, and, along with the original training samples, form the augmented training set. This method, while increasing data diversity against over-fitting, also incurs a considerable level of noise. In the effort to alleviate the impact of noise, the label smooth regularization (LSR) is adopted. The vanilla version of our method (without LSR) performs reasonably well on few-camera systems in which over-fitting often occurs. With LSR, we demonstrate consistent improvement in all systems regardless of the extent of over-fitting. We also report competitive accuracy compared with the state of the art.

期刊:arXiv, 2018年4月10日

网址:

http://www.zhuanzhi.ai/document/b3726c52dfbc0e7f8b93cb1efe183388

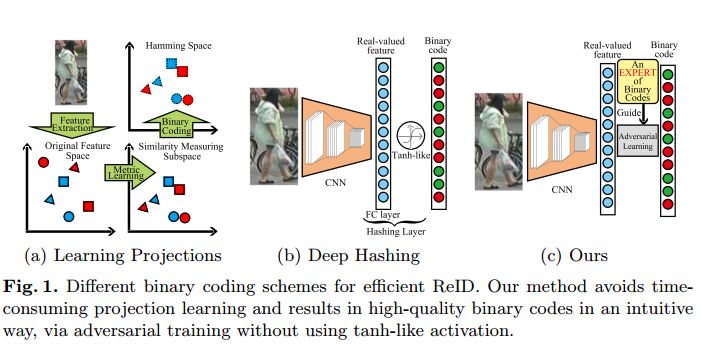

3. Adversarial Binary Coding for Efficient Person Re-identification(高效行人再识别的对抗性的二进制编码)

作者:Zheng Liu,Jie Qin,Annan Li,Yunhong Wang,Luc Van Gool

机构:Beihang University

摘要:Person re-identification (ReID) aims at matching persons across different views/scenes. In addition to accuracy, the matching efficiency has received more and more attention because of demanding applications using large-scale data. Several binary coding based methods have been proposed for efficient ReID, which either learn projections to map high-dimensional features to compact binary codes, or directly adopt deep neural networks by simply inserting an additional fully-connected layer with tanh-like activations. However, the former approach requires time-consuming hand-crafted feature extraction and complicated (discrete) optimizations; the latter lacks the necessary discriminative information greatly due to the straightforward activation functions. In this paper, we propose a simple yet effective framework for efficient ReID inspired by the recent advances in adversarial learning. Specifically, instead of learning explicit projections or adding fully-connected mapping layers, the proposed Adversarial Binary Coding (ABC) framework guides the extraction of binary codes implicitly and effectively. The discriminability of the extracted codes is further enhanced by equipping the ABC with a deep triplet network for the ReID task. More importantly, the ABC and triplet network are simultaneously optimized in an end-to-end manner. Extensive experiments on three large-scale ReID benchmarks demonstrate the superiority of our approach over the state-of-the-art methods.

期刊:arXiv, 2018年4月6日

网址:

http://www.zhuanzhi.ai/document/b575b56c5d6eb23f01d3c4c38e86be3f

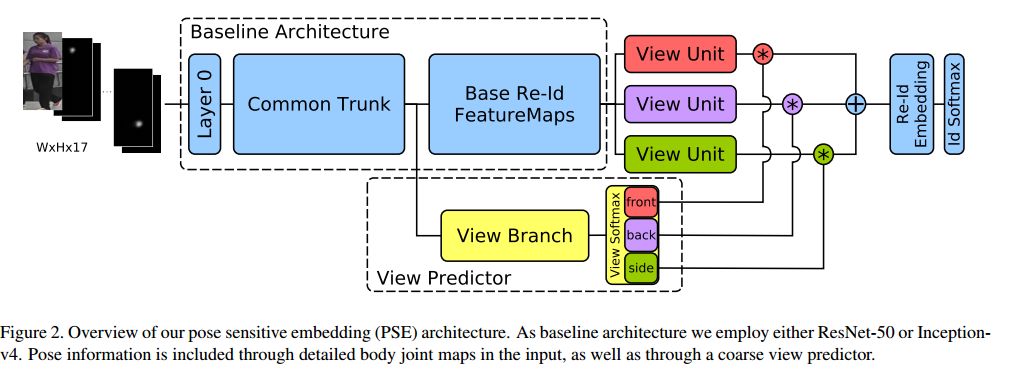

4. A Pose-Sensitive Embedding for Person Re-Identification with Expanded Cross Neighborhood Re-Ranking(一种扩展的交叉区域重排序的对行人再识别的姿态敏感的嵌入)

作者:M. Saquib Sarfraz,Arne Schumann,Andreas Eberle,Rainer Stiefelhagen

摘要:Person re identification is a challenging retrieval task that requires matching a person's acquired image across non overlapping camera views. In this paper we propose an effective approach that incorporates both the fine and coarse pose information of the person to learn a discriminative embedding. In contrast to the recent direction of explicitly modeling body parts or correcting for misalignment based on these, we show that a rather straightforward inclusion of acquired camera view and/or the detected joint locations into a convolutional neural network helps to learn a very effective representation. To increase retrieval performance, re-ranking techniques based on computed distances have recently gained much attention. We propose a new unsupervised and automatic re-ranking framework that achieves state-of-the-art re-ranking performance. We show that in contrast to the current state-of-the-art re-ranking methods our approach does not require to compute new rank lists for each image pair (e.g., based on reciprocal neighbors) and performs well by using simple direct rank list based comparison or even by just using the already computed euclidean distances between the images. We show that both our learned representation and our re-ranking method achieve state-of-the-art performance on a number of challenging surveillance image and video datasets. The code is available online at: https://github.com/pse-ecn/pose-sensitive-embedding

期刊:arXiv, 2018年4月2日

网址:

http://www.zhuanzhi.ai/document/b5f4c0c2ec94708fce9141722bf9b999

5. Efficient and Deep Person Re-Identification using Multi-Level Similarity(高效、深度的行人再识别,使用多层次的相似性)

作者:Yiluan Guo,Ngai-Man Cheung

机构:Singapore University of Technology and Design

摘要:Person Re-Identification (ReID) requires comparing two images of person captured under different conditions. Existing work based on neural networks often computes the similarity of feature maps from one single convolutional layer. In this work, we propose an efficient, end-to-end fully convolutional Siamese network that computes the similarities at multiple levels. We demonstrate that multi-level similarity can improve the accuracy considerably using low-complexity network structures in ReID problem. Specifically, first, we use several convolutional layers to extract the features of two input images. Then, we propose Convolution Similarity Network to compute the similarity score maps for the inputs. We use spatial transformer networks (STNs) to determine spatial attention. We propose to apply efficient depth-wise convolution to compute the similarity. The proposed Convolution Similarity Networks can be inserted into different convolutional layers to extract visual similarities at different levels. Furthermore, we use an improved ranking loss to further improve the performance. Our work is the first to propose to compute visual similarities at low, middle and high levels for ReID. With extensive experiments and analysis, we demonstrate that our system, compact yet effective, can achieve competitive results with much smaller model size and computational complexity.

期刊:arXiv, 2018年4月2日

网址:

http://www.zhuanzhi.ai/document/02620b2c806c8b049810a38e23201be9

6. Deep Spatial Feature Reconstruction for Partial Person Re-identification: Alignment-Free Approach(部分行人再识别的深度空间特征重构:无对齐方法)

作者:Lingxiao He,Jian Liang,Haiqing Li,Zhenan Sun

机构:University of Chinese Academy of Sciences

摘要:Partial person re-identification (re-id) is a challenging problem, where only several partial observations (images) of people are available for matching. However, few studies have provided flexible solutions to identifying a person in an image containing arbitrary part of the body. In this paper, we propose a fast and accurate matching method to address this problem. The proposed method leverages Fully Convolutional Network (FCN) to generate fix-sized spatial feature maps such that pixel-level features are consistent. To match a pair of person images of different sizes, a novel method called Deep Spatial feature Reconstruction (DSR) is further developed to avoid explicit alignment. Specifically, DSR exploits the reconstructing error from popular dictionary learning models to calculate the similarity between different spatial feature maps. In that way, we expect that the proposed FCN can decrease the similarity of coupled images from different persons and increase that from the same person. Experimental results on two partial person datasets demonstrate the efficiency and effectiveness of the proposed method in comparison with several state-of-the-art partial person re-id approaches. Additionally, DSR achieves competitive results on a benchmark person dataset Market1501 with 83.58\% Rank-1 accuracy.

期刊:arXiv, 2018年4月1日

网址:

http://www.zhuanzhi.ai/document/2be3fa074a04eebde2872787a6572a0a

7. Graph Correspondence Transfer for Person Re-identification(图对应迁移的行人再识别)

作者:Qin Zhou,Heng Fan,Shibao Zheng,Hang Su,Xinzhe Li,Shuang Wu,Haibin Ling

机构:Temple University,Tsinghua University,Shanghai Jiao Tong University

摘要:In this paper, we propose a graph correspondence transfer (GCT) approach for person re-identification. Unlike existing methods, the GCT model formulates person re-identification as an off-line graph matching and on-line correspondence transferring problem. In specific, during training, the GCT model aims to learn off-line a set of correspondence templates from positive training pairs with various pose-pair configurations via patch-wise graph matching. During testing, for each pair of test samples, we select a few training pairs with the most similar pose-pair configurations as references, and transfer the correspondences of these references to test pair for feature distance calculation. The matching score is derived by aggregating distances from different references. For each probe image, the gallery image with the highest matching score is the re-identifying result. Compared to existing algorithms, our GCT can handle spatial misalignment caused by large variations in view angles and human poses owing to the benefits of patch-wise graph matching. Extensive experiments on five benchmarks including VIPeR, Road, PRID450S, 3DPES and CUHK01 evidence the superior performance of GCT model over other state-of-the-art methods.

期刊:arXiv, 2018年4月1日

网址:

http://www.zhuanzhi.ai/document/917e704f70744faf6cc733d7c348d2ab

-END-

专 · 知

人工智能领域主题知识资料查看获取:【专知荟萃】人工智能领域26个主题知识资料全集(入门/进阶/论文/综述/视频/专家等)

同时欢迎各位用户进行专知投稿,详情请点击:

【诚邀】专知诚挚邀请各位专业者加入AI创作者计划!了解使用专知!

请PC登录www.zhuanzhi.ai或者点击阅读原文,注册登录专知,获取更多AI知识资料!

请扫一扫如下二维码关注我们的公众号,获取人工智能的专业知识!

请加专知小助手微信(Rancho_Fang),加入专知主题人工智能群交流!加入专知主题群(请备注主题类型:AI、NLP、CV、 KG等)交流~

投稿&广告&商务合作:fangquanyi@gmail.com

点击“阅读原文”,使用专知