签证搞不定,没空去开会?David这份《NeurIPS 2019顶会笔记》70页pdf硬核笔记看下就好

【导读】NeurlPS 2019 大会正在加拿大温哥华热火朝天召开中,1.3万人盛况空前。大会发布了7篇最佳论文,一系列论文和tutorial,涉及很多热点比如图机器学习、元学习、核方法、软硬一体化等。如果你不能亲临会场,这里有一份来自布朗大学David Abel博士的 70页的NeurIPS 2019的会议笔记,了解一下。

David Abel 是美国布朗大学计算机科学专业的在读博士生,师从Michael Littman,研究重点是抽象概念及其在智能中的应用。同时还是牛津大学Future of Humanity Institute 的一名实习生。

个人主页 https://david-abel.github.io/

这份笔记针对会议重点内容进行讲解,包括研究问题与背景、研究发现与贡献、主要方法与结果都依次展现出来。这对于我们快速找到喜欢的主题非常重要,找到后我们也能进一步参考演讲视频与论文,更深入地理解研究成果。

笔记摘要:

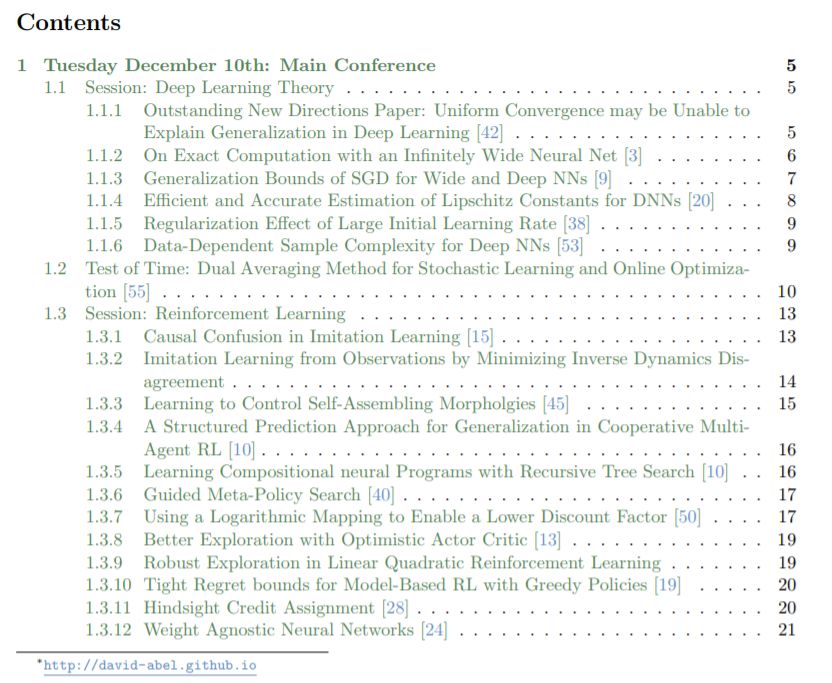

1. 深度学习理论

Outstanding New Directions Paper: Uniform Convergence may be Unable to Explain Generalization in Deep Learning

论文地址:

https://papers.nips.cc/paper/8722-distribution-independent-pac-learning-of-halfspaces-with-massart-noise

摘要:为了解释过参数化深度网络令人惊讶的良好泛化性能,近期的论文为深度学习开发出了各种泛化边界,这些边界都是基于一致收敛(uniform convergence)理论上的基本学习技巧。

众所周知,许多现有的边界在数值上都很大,通过大量的实验,研究者揭示了这些界限的一个更值得关注的方面:实际上,这些边界可以随着训练数据集的增大而增大。根据观察结果,他们随后给出了一些用梯度下降(gradient descent, GD)训练的过参数化线性分类器和神经网络的例子,而在这些例子中,一致收敛被证明不能「解释泛化」——即使尽可能充分地考虑了梯度下降的隐含偏见。更加确切地说,即使只考虑梯度下降输出的分类器集,这些分类器的测试误差小于设置中的一些小的ε。研究者也表明,对这组分类器应用(双边,two-sided)一致收敛将只产生一个空洞的大于 1-ε的泛化保证。通过这些发现,研究者对基于一致收敛的泛化边界的能力提出了质疑,从而全面了解为什么过参数化深度网络泛化得很好。

On Exact Computation with an Innitely Wide Neural Net ,https://papers.nips.cc/paper/9025-on-exact-computation-with-an-infinitely-wide-neural-net

Generalization Bounds of SGD for Wide and Deep NNs

Efficient and Accurate Estimation of Lipschitz Constants for DNNs

Regularization Effect of Large Initial Learning Rate

Data-Dependent Sample Complexity for Deep NNs

2. 强化学习

Causal Confusion in Imitation Learning

Imitation Learning from Observations by Minimizing Inverse Dynamics Disagreement

Learning to Control Self-Assembling Morpholgies

A Structured Prediction Approach for Generalization in Cooperative MultiAgent RL

Learning Compositional neural Programs with Recursive Tree Search

Guided Meta-Policy Search

Using a Logarithmic Mapping to Enable a Lower Discount Factor

Better Exploration with Optimistic Actor Critic

Robust Exploration in Linear Quadratic Reinforcement Learning

Tight Regret bounds for Model-Based RL with Greedy Policies

Hindsight Credit Assignment

Weight Agnostic Neural Networks

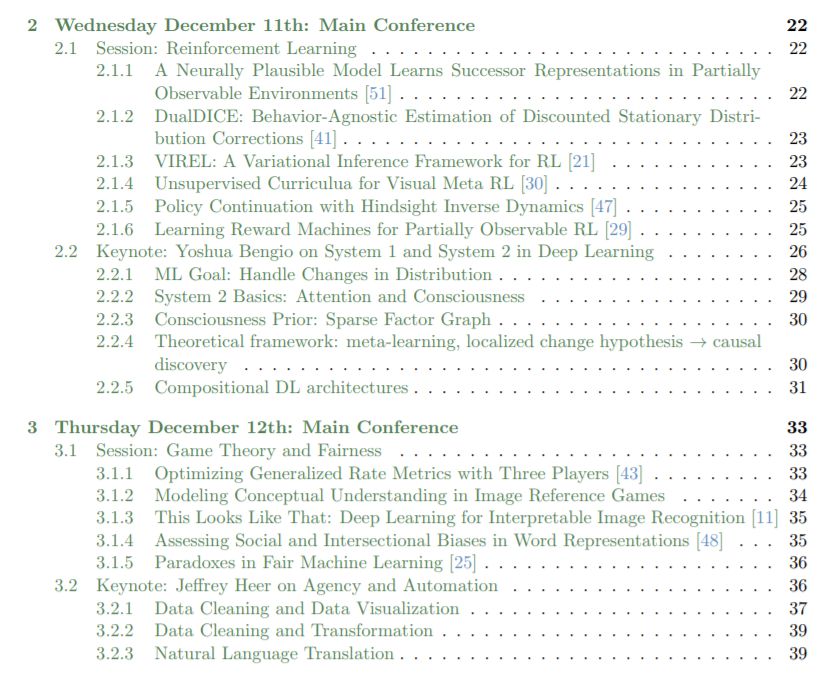

A Neurally Plausible Model Learns Successor Representations in Partially

Observable Environments

DualDICE: Behavior-Agnostic Estimation of Discounted Stationary Distribution Corrections

VIREL: A Variational Inference Framework for RL

Unsupervised Curriculua for Visual Meta RL

Policy Continuation with Hindsight Inverse Dynamics

Learning Reward Machines for Partially Observable RL

3. 【Yoshua Bengio特邀报告NeurIPS2019】深度学习系统从1代到2代,36页ppt概述DL进展与未来

4. 博弈论与公平性

Optimizing Generalized Rate Metrics with Three Players

Modeling Conceptual Understanding in Image Reference Games

This Looks Like That: Deep Learning for Interpretable Image Recognition

Assessing Social and Intersectional Biases in Word Representations

Paradoxes in Fair Machine Learning

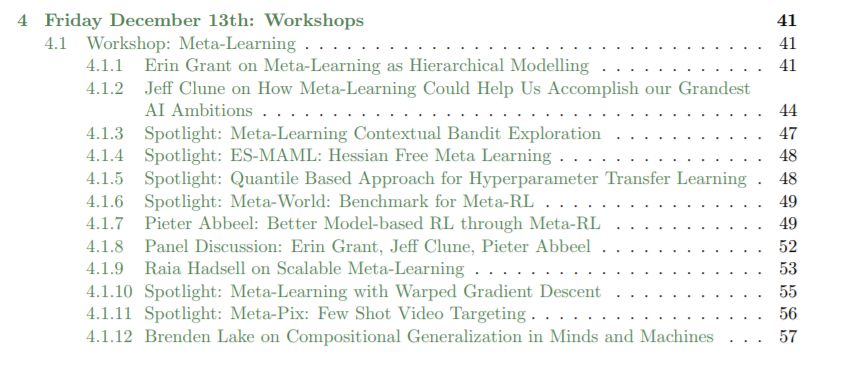

5. 元学习

Workshop: Meta-Learning

Erin Grant on Meta-Learning as Hierarchical Modelling

Uber AI NeurIPS 2019《元学习meta-learning》教程,附92页PPT下载How Meta-Learning Could Help Us Accomplish our Grandest

AI Ambitions

Spotlight: Meta-Learning Contextual Bandit Exploration

Spotlight: ES-MAML: Hessian Free Meta Learning

Spotlight: Quantile Based Approach for Hyperparameter Transfer Learning

Spotlight: Meta-World: Benchmark for Meta-RL

Pieter Abbeel: Better Model-based RL through Meta-RL

Panel Discussion: Erin Grant, Je Clune, Pieter Abbeel

Raia Hadsell on Scalable Meta-Learning

Spotlight: Meta-Learning with Warped Gradient Descent

Spotlight: Meta-Pix: Few Shot Video Targeting

Brenden Lake on Compositional Generalization in Minds and Machines

便捷下载,请关注专知公众号(点击上方蓝色专知关注)

后台回复“NEUR2019” 就可以获取David Abel《NeurIPS 2019顶会笔记》70页pdf下载链接索引~

参考地址:

https://david-abel.github.io/notes/neurips_2019.pdf