【论文推荐】最新八篇网络节点表示相关论文—可扩展嵌入、对抗自编码器、图划分、异构信息、显式矩阵分解、深度高斯、图、随机游走

【导读】专知内容组整理了最近八篇网络节点表示(Network Embedding)相关文章,为大家进行介绍,欢迎查看!

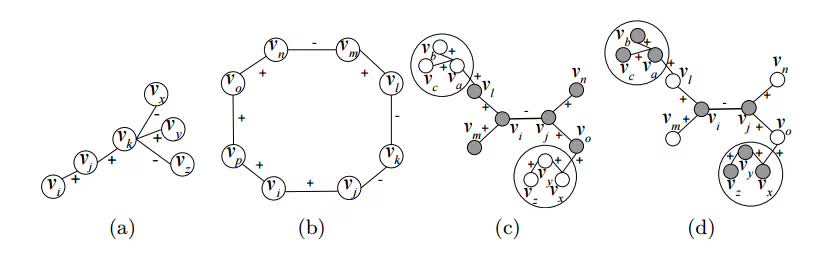

1.SIGNet: Scalable Embeddingsfor Signed Networks(SIGNet: 基于可扩展嵌入的Signed网络)

作者:Mohammad Raihanul Islam,B. Aditya Prakash,Naren Ramakrishnan

摘要:Recent successes in word embedding and document embedding have motivated researchers to explore similar representations for networks and to use such representations for tasks such as edge prediction, node label prediction, and community detection. Such network embedding methods are largely focused on finding distributed representations for unsigned networks and are unable to discover embeddings that respect polarities inherent in edges. We propose SIGNet, a fast scalable embedding method suitable for signed networks. Our proposed objective function aims to carefully model the social structure implicit in signed networks by reinforcing the principles of social balance theory. Our method builds upon the traditional word2vec family of embedding approaches and adds a new targeted node sampling strategy to maintain structural balance in higher-order neighborhoods. We demonstrate the superiority of SIGNet over state-of-the-art methods proposed for both signed and unsigned networks on several real world datasets from different domains. In particular, SIGNet offers an approach to generate a richer vocabulary of features of signed networks to support representation and reasoning.

期刊:arXiv, 2018年3月23日

网址:

http://www.zhuanzhi.ai/document/1e17f4153baacfc1393d58a30186779c

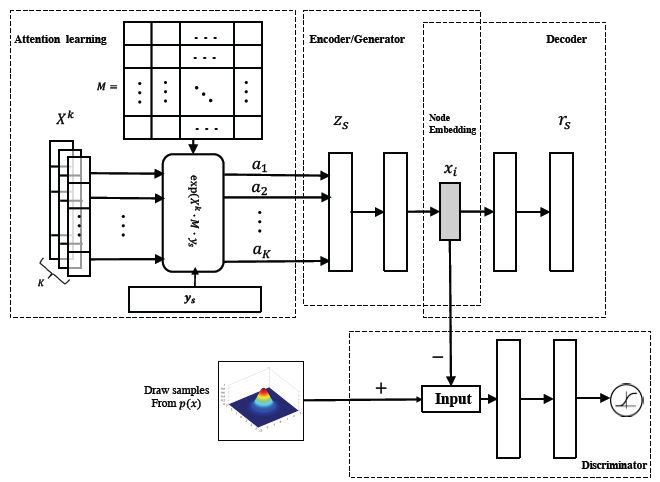

2. AAANE: Attention-based Adversarial Autoencoder for Multi-scale Network Embedding(AAANE:基于注意力机制对抗自编码器的多尺度网络节点表示)

作者:Lei Sang,Min Xu,Shengsheng Qian,Xindong Wu

机构:Hefei University of Technology, University of Technology Sydney, Institute of Automation, Chinese Academy of Sciences

摘要:Network embedding represents nodes in a continuous vector space and preserves structure information from the Network. Existing methods usually adopt a "one-size-fits-all" approach when concerning multi-scale structure information, such as first- and second-order proximity of nodes, ignoring the fact that different scales play different roles in the embedding learning. In this paper, we propose an Attention-based Adversarial Autoencoder Network Embedding(AAANE) framework, which promotes the collaboration of different scales and lets them vote for robust representations. The proposed AAANE consists of two components: 1) Attention-based autoencoder effectively capture the highly non-linear network structure, which can de-emphasize irrelevant scales during training. 2) An adversarial regularization guides the autoencoder learn robust representations by matching the posterior distribution of the latent embeddings to given prior distribution. This is the first attempt to introduce attention mechanisms to multi-scale network embedding. Experimental results on real-world networks show that our learned attention parameters are different for every network and the proposed approach outperforms existing state-of-the-art approaches for network embedding.

期刊:arXiv, 2018年3月24日

网址:

http://www.zhuanzhi.ai/document/2eff4f2b546ad1be81c27bc8436fe570

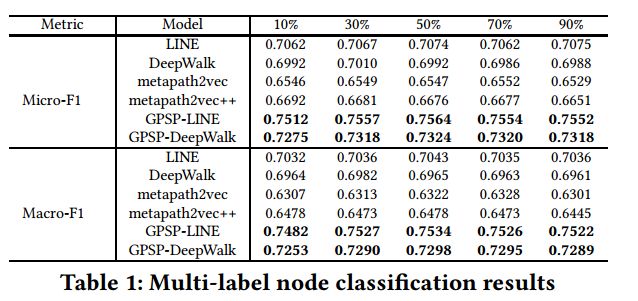

3.GPSP: Graph Partition and Space Projection based Approach for Heterogeneous Network Embedding(GPSP:基于图划分和空间投影的异构网络嵌入方法)

作者:Wenyu Du,Shuai Yu,Min Yang,Qiang Qu,Jia Zhu

机构:South China Normal University

摘要:In this paper, we propose GPSP, a novel Graph Partition and Space Projection based approach, to learn the representation of a heterogeneous network that consists of multiple types of nodes and links. Concretely, we first partition the heterogeneous network into homogeneous and bipartite subnetworks. Then, the projective relations hidden in bipartite subnetworks are extracted by learning the projective embedding vectors. Finally, we concatenate the projective vectors from bipartite subnetworks with the ones learned from homogeneous subnetworks to form the final representation of the heterogeneous network. Extensive experiments are conducted on a real-life dataset. The results demonstrate that GPSP outperforms the state-of-the-art baselines in two key network mining tasks: node classification and clustering.

期刊:arXiv, 2018年3月7日

网址:

http://www.zhuanzhi.ai/document/15a877b3d238077a40a22bfec68951e1

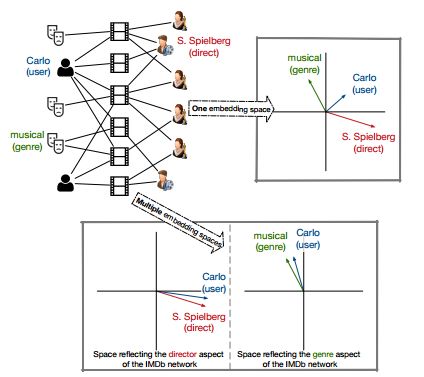

4.AspEm: Embedding Learning by Aspects in Heterogeneous Information Networks(AspEm:在异构信息网络中嵌入学习)

作者:Yu Shi,Huan Gui,Qi Zhu,Lance Kaplan,Jiawei Han

机构:University of Illinois at Urbana-Champaign

摘要:Heterogeneous information networks (HINs) are ubiquitous in real-world applications. Due to the heterogeneity in HINs, the typed edges may not fully align with each other. In order to capture the semantic subtlety, we propose the concept of aspects with each aspect being a unit representing one underlying semantic facet. Meanwhile, network embedding has emerged as a powerful method for learning network representation, where the learned embedding can be used as features in various downstream applications. Therefore, we are motivated to propose a novel embedding learning framework---AspEm---to preserve the semantic information in HINs based on multiple aspects. Instead of preserving information of the network in one semantic space, AspEm encapsulates information regarding each aspect individually. In order to select aspects for embedding purpose, we further devise a solution for AspEm based on dataset-wide statistics. To corroborate the efficacy of AspEm, we conducted experiments on two real-words datasets with two types of applications---classification and link prediction. Experiment results demonstrate that AspEm can outperform baseline network embedding learning methods by considering multiple aspects, where the aspects can be selected from the given HIN in an unsupervised manner.

期刊:arXiv, 2018年3月6日

网址:

http://www.zhuanzhi.ai/document/0b510266e78c7d329ca206b13f58f235

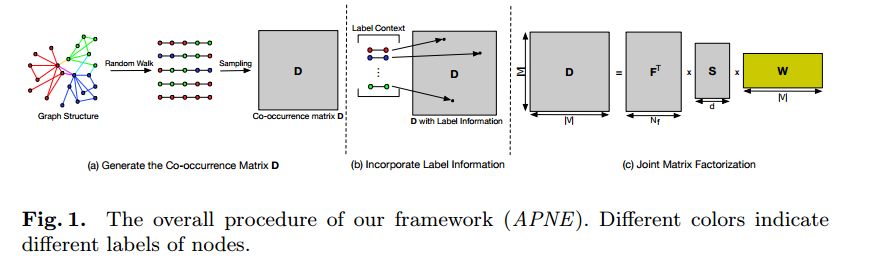

5.Enhancing Network Embedding with Auxiliary Information: An Explicit Matrix Factorization Perspective(基于辅助信息增强网络表示:一个显式矩阵分解的视角)

作者:Junliang Guo,Linli Xu,Xunpeng Huang,Enhong Chen

机构:University of Science and Technology of China

摘要:Recent advances in the field of network embedding have shown the low-dimensional network representation is playing a critical role in network analysis. However, most of the existing principles of network embedding do not incorporate auxiliary information such as content and labels of nodes flexibly. In this paper, we take a matrix factorization perspective of network embedding, and incorporate structure, content and label information of the network simultaneously. For structure, we validate that the matrix we construct preserves high-order proximities of the network. Label information can be further integrated into the matrix via the process of random walk sampling to enhance the quality of embedding in an unsupervised manner, i.e., without leveraging downstream classifiers. In addition, we generalize the Skip-Gram Negative Sampling model to integrate the content of the network in a matrix factorization framework. As a consequence, network embedding can be learned in a unified framework integrating network structure and node content as well as label information simultaneously. We demonstrate the efficacy of the proposed model with the tasks of semi-supervised node classification and link prediction on a variety of real-world benchmark network datasets.

期刊:arXiv, 2018年3月5日

网址:

http://www.zhuanzhi.ai/document/efb9a8f17eeb72e96cdce3587a438499

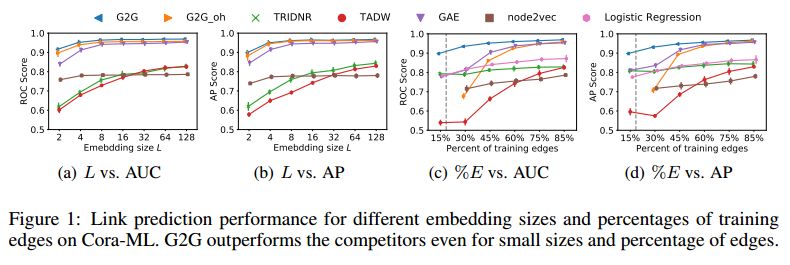

6.Deep Gaussian Embedding of Graphs: Unsupervised Inductive Learning via Ranking(图的深度高斯嵌入:基于排序的无监督归纳学习)

作者:Aleksandar Bojchevski,Stephan Günnemann

机构:Technical University of Munich

摘要:Methods that learn representations of nodes in a graph play a critical role in network analysis since they enable many downstream learning tasks. We propose Graph2Gauss - an approach that can efficiently learn versatile node embeddings on large scale (attributed) graphs that show strong performance on tasks such as link prediction and node classification. Unlike most approaches that represent nodes as point vectors in a low-dimensional continuous space, we embed each node as a Gaussian distribution, allowing us to capture uncertainty about the representation. Furthermore, we propose an unsupervised method that handles inductive learning scenarios and is applicable to different types of graphs: plain/attributed, directed/undirected. By leveraging both the network structure and the associated node attributes, we are able to generalize to unseen nodes without additional training. To learn the embeddings we adopt a personalized ranking formulation w.r.t. the node distances that exploits the natural ordering of the nodes imposed by the network structure. Experiments on real world networks demonstrate the high performance of our approach, outperforming state-of-the-art network embedding methods on several different tasks. Additionally, we demonstrate the benefits of modeling uncertainty - by analyzing it we can estimate neighborhood diversity and detect the intrinsic latent dimensionality of a graph.

期刊:arXiv, 2018年2月27日

网址:

http://www.zhuanzhi.ai/document/86b45a32e77c7a6cdd84d0057bdcd9eb

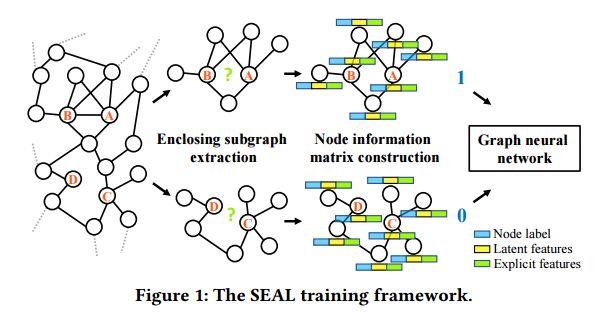

7.Link Prediction Based on Graph Neural Networks(基于图神经网络的链接预测)

作者:Muhan Zhang,Yixin Chen

机构:Washington University in St

摘要:Traditional methods for link prediction can be categorized into three main types: graph structure feature-based, latent feature-based, and explicit feature-based. Graph structure feature methods leverage some handcrafted node proximity scores, e.g., common neighbors, to estimate the likelihood of links. Latent feature methods rely on factorizing networks' matrix representations to learn an embedding for each node. Explicit feature methods train a machine learning model on two nodes' explicit attributes. Each of the three types of methods has its unique merits. In this paper, we propose SEAL (learning from Subgraphs, Embeddings, and Attributes for Link prediction), a new framework for link prediction which combines the power of all the three types into a single graph neural network (GNN). GNN is a new type of neural network which directly accepts graphs as input and outputs their labels. In SEAL, the input to the GNN is a local subgraph around each target link. We prove theoretically that our local subgraphs also reserve a great deal of high-order graph structure features related to link existence. Another key feature is that our GNN can naturally incorporate latent features and explicit features. It is achieved by concatenating node embeddings (latent features) and node attributes (explicit features) in the node information matrix for each subgraph, thus combining the three types of features to enhance GNN learning. Through extensive experiments, SEAL shows unprecedentedly strong performance against a wide range of baseline methods, including various link prediction heuristics and network embedding methods.

期刊:arXiv, 2018年2月27日

网址:

http://www.zhuanzhi.ai/document/355a8e110b7f29ac60cc2a87004887b0

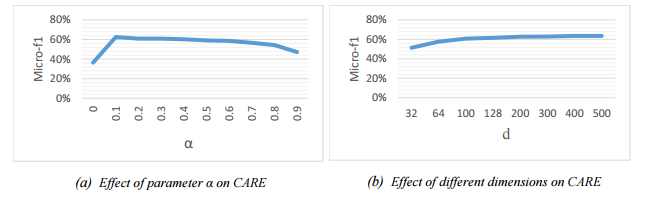

8.Community Aware Random Walk for Network Embedding(基于社区意识随机游走的网络嵌入)

作者:Mohammad Mehdi Keikha,Maseud Rahgozar,Masoud Asadpour

机构:Carnegie Mellon University

摘要:Social network analysis provides meaningful information about behavior of network members that can be used for diverse applications such as classification, link prediction. However, network analysis is computationally expensive because of feature learning for different applications. In recent years, many researches have focused on feature learning methods in social networks. Network embedding represents the network in a lower dimensional representation space with the same properties which presents a compressed representation of the network. In this paper, we introduce a novel algorithm named "CARE" for network embedding that can be used for different types of networks including weighted, directed and complex. Current methods try to preserve local neighborhood information of nodes, whereas the proposed method utilizes local neighborhood and community information of network nodes to cover both local and global structure of social networks. CARE builds customized paths, which are consisted of local and global structure of network nodes, as a basis for network embedding and uses the Skip-gram model to learn representation vector of nodes. Subsequently, stochastic gradient descent is applied to optimize our objective function and learn the final representation of nodes. Our method can be scalable when new nodes are appended to network without information loss. Parallelize generation of customized random walks is also used for speeding up CARE. We evaluate the performance of CARE on multi label classification and link prediction tasks. Experimental results on various networks indicate that the proposed method outperforms others in both Micro and Macro-f1 measures for different size of training data.

期刊:arXiv, 2018年2月19日

网址:

http://www.zhuanzhi.ai/document/5ab58901591c5adfd4943acbd1a8d4fa

-END-

专 · 知

人工智能领域主题知识资料查看获取:【专知荟萃】人工智能领域26个主题知识资料全集(入门/进阶/论文/综述/视频/专家等)

同时欢迎各位用户进行专知投稿,详情请点击:

【诚邀】专知诚挚邀请各位专业者加入AI创作者计划!了解使用专知!

请PC登录www.zhuanzhi.ai或者点击阅读原文,注册登录专知,获取更多AI知识资料!

请扫一扫如下二维码关注我们的公众号,获取人工智能的专业知识!

请加专知小助手微信(Rancho_Fang),加入专知主题人工智能群交流!加入专知主题群(请备注主题类型:AI、NLP、CV、 KG等)交流~

点击“阅读原文”,使用专知!