UC.Berkeley CS189讲义教材:《机器学习全面指南》,185页pdf

【导读】UC.Berkeley CS189 《Introduction to Machine Learning》是面向初学者的机器学习课程在本指南中,我们创建了一个全面的课程指南,以便与学生和公众分享我们的知识,并希望吸引其他大学的学生对伯克利的机器学习课程感兴趣。

课程主页: https://www.eecs189.

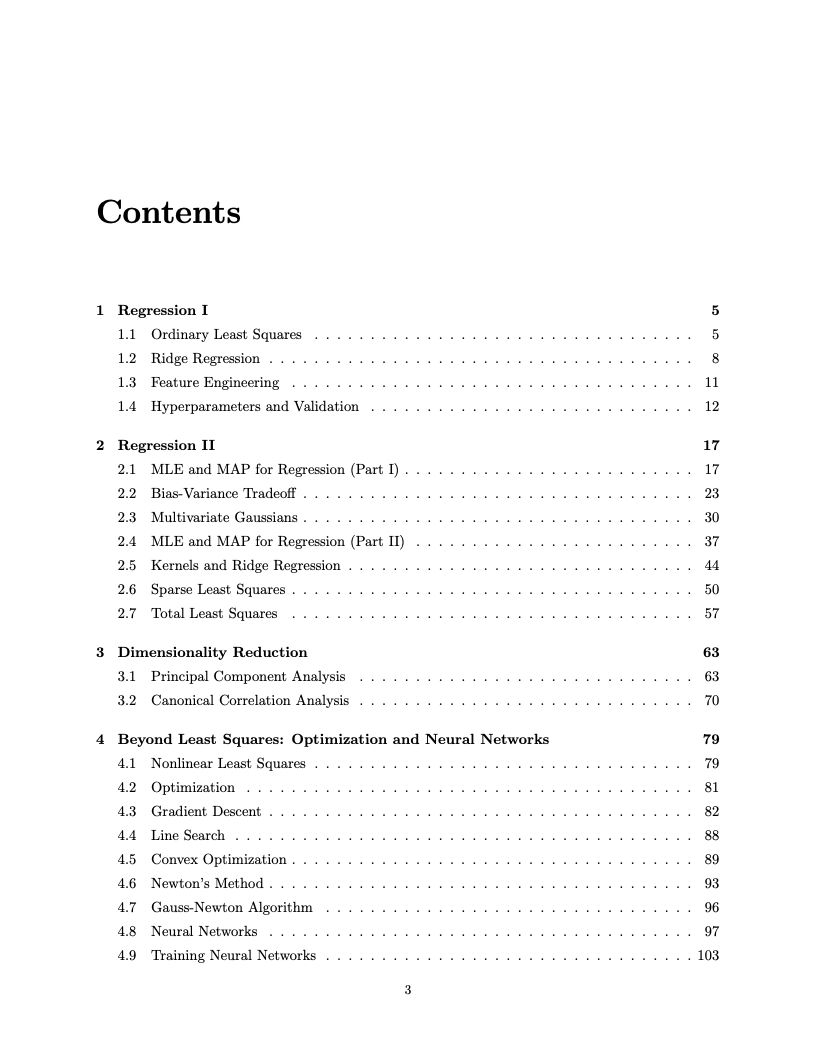

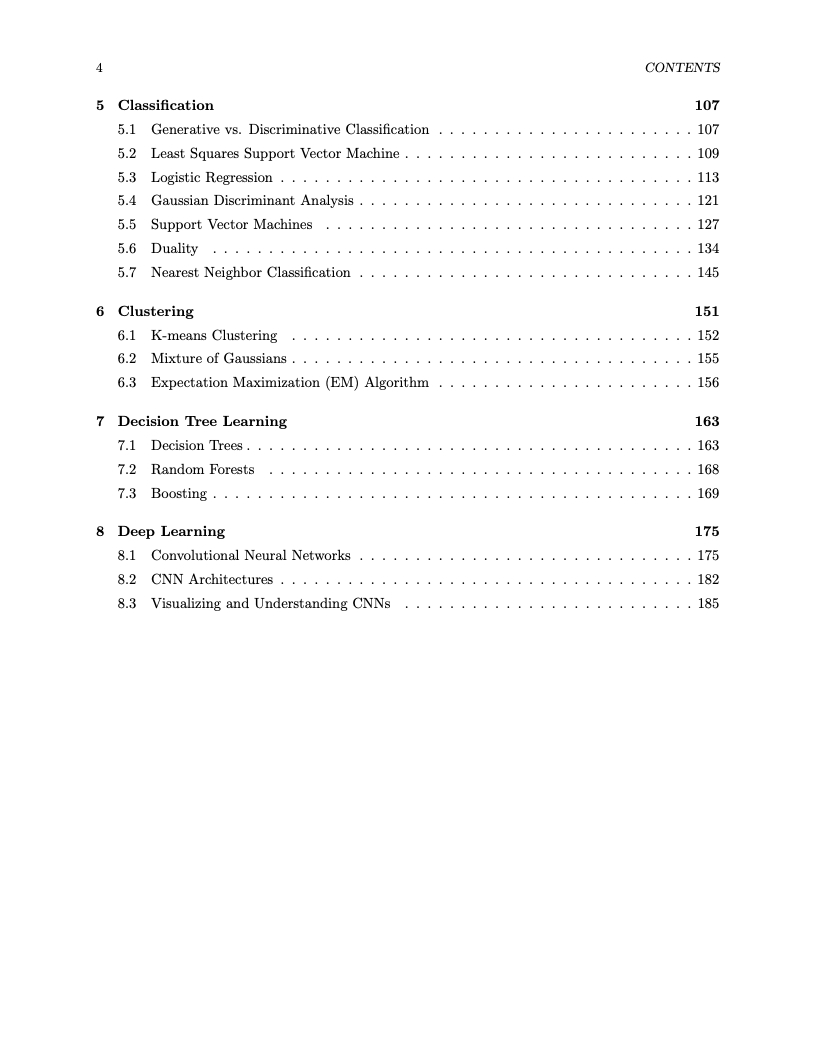

讲义目录:

Note 1: Introduction

Note 2: Linear Regression

Note 3: Features, Hyperparameters, Validation

Note 4: MLE and MAP for Regression (Part I)

Note 5: Bias-Variance Tradeoff

Note 6: Multivariate Gaussians

Note 7: MLE and MAP for Regression (Part II)

Note 8: Kernels, Kernel Ridge Regression

Note 9: Total Least Squares

Note 10: Principal Component Analysis (PCA)

Note 11: Canonical Correlation Analysis (CCA)

Note 12: Nonlinear Least Squares, Optimization

Note 13: Gradient Descent Extensions

Note 14: Neural Networks

Note 15: Training Neural Networks

Note 16: Discriminative vs. Generative Classification, LS-SVM

Note 17: Logistic Regression

Note 18: Gaussian Discriminant Analysis

Note 19: Expectation-Maximization (EM) Algorithm, k-means Clustering

Note 20: Support Vector Machines (SVM)

Note 21: Generalization and Stability

Note 22: Duality

Note 23: Nearest Neighbor Classification

Note 24: Sparsity

Note 25: Decision Trees and Random Forests

Note 26: Boosting

Note 27: Convolutional Neural Networks (CNN)

讨论目录:

Discussion 0: Vector Calculus, Linear Algebra (solution)

Discussion 1: Optimization, Least Squares, and Convexity (solution)

Discussion 2: Ridge Regression and Multivariate Gaussians (solution)

Discussion 3: Multivariate Gaussians and Kernels (solution)

Discussion 4: Principal Component Analysis (solution)

Discussion 5: Least Squares and Kernels (solution)

Discussion 6: Optimization and Reviewing Linear Methods (solution)

Discussion 7: Backpropagation and Computation Graphs (solution)

Discussion 8: QDA and Logistic Regression (solution)

Discussion 9: EM (solution)

Discussion 10: SVMs and KNN (solution)

Discussion 11: Decision Trees (solution)

Discussion 12: LASSO, Sparsity, Feature Selection, Auto-ML (solution)

教材:

便捷下载,请关注专知公众号(点击上方蓝色专知关注)

后台回复“UCBML” 就可以获取图书专知下载链接索引

讲义和讨论,请访问课程主页