最新5篇生成对抗网络相关论文推荐—FusedGAN、DeblurGAN、AdvGAN、CipherGAN、MMD GANS

【导读】专知内容组整理了最近生成对抗网络相关文章,为大家进行介绍,欢迎查看!

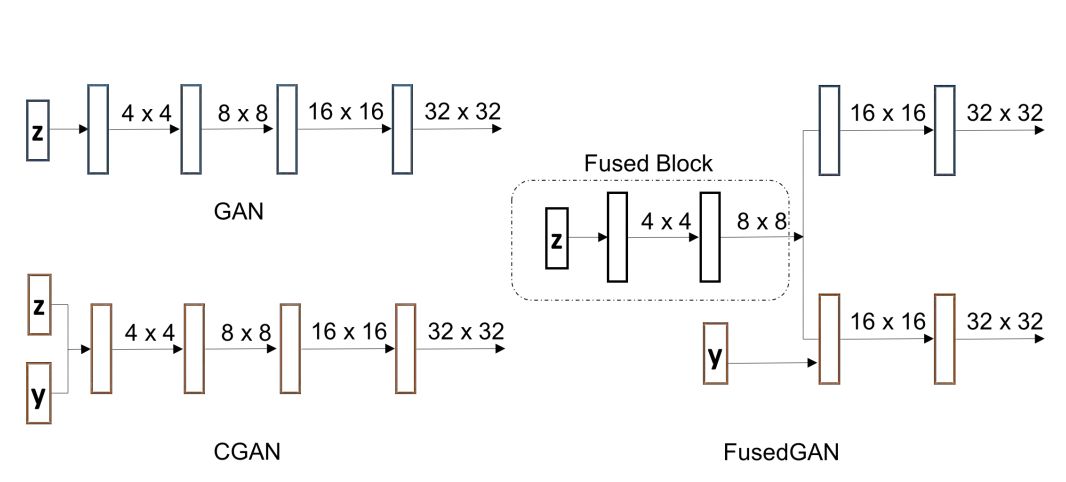

1. Semi-supervised FusedGAN for Conditional Image Generation(基于半监督FusedGAN的条件图像生成)

作者:Navaneeth Bodla,Gang Hua,Rama Chellappa

摘要:We present FusedGAN, a deep network for conditional image synthesis with controllable sampling of diverse images. Fidelity, diversity and controllable sampling are the main quality measures of a good image generation model. Most existing models are insufficient in all three aspects. The FusedGAN can perform controllable sampling of diverse images with very high fidelity. We argue that controllability can be achieved by disentangling the generation process into various stages. In contrast to stacked GANs, where multiple stages of GANs are trained separately with full supervision of labeled intermediate images, the FusedGAN has a single stage pipeline with a built-in stacking of GANs. Unlike existing methods, which requires full supervision with paired conditions and images, the FusedGAN can effectively leverage more abundant images without corresponding conditions in training, to produce more diverse samples with high fidelity. We achieve this by fusing two generators: one for unconditional image generation, and the other for conditional image generation, where the two partly share a common latent space thereby disentangling the generation. We demonstrate the efficacy of the FusedGAN in fine grained image generation tasks such as text-to-image, and attribute-to-face generation.

期刊:arXiv, 2018年1月17日

网址:

http://www.zhuanzhi.ai/document/c9fca526062fb81b8a9480de826aba04

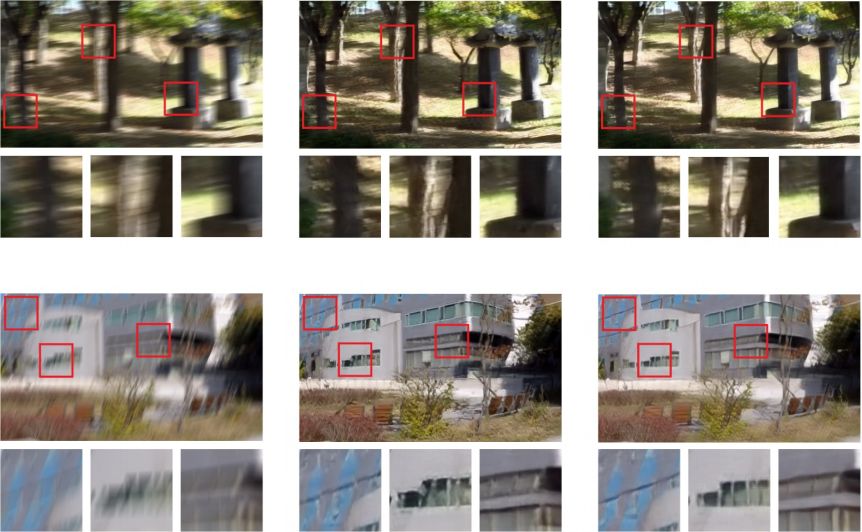

2. DeblurGAN: Blind Motion Deblurring Using Conditional Adversarial Networks(DeblurGAN:基于条件对抗网络的不规则运动去模糊)

作者:Orest Kupyn,Volodymyr Budzan,Mykola Mykhailych,Dmytro Mishkin,Jiri Matas

摘要:We present an end-to-end learning approach for motion deblurring, which is based on conditional GAN and content loss. It improves the state-of-the art in terms of peak signal-to-noise ratio, structural similarity measure and by visual appearance. The quality of the deblurring model is also evaluated in a novel way on a real-world problem -- object detection on (de-)blurred images. The method is 5 times faster than the closest competitor. Second, we present a novel method of generating synthetic motion blurred images from the sharp ones, which allows realistic dataset augmentation. Model, training code and dataset are available at https://github.com/KupynOrest/DeblurGAN

期刊:arXiv, 2018年1月16日

网址:

http://www.zhuanzhi.ai/document/80f3a483eb19f882dd5a4f309db6407a

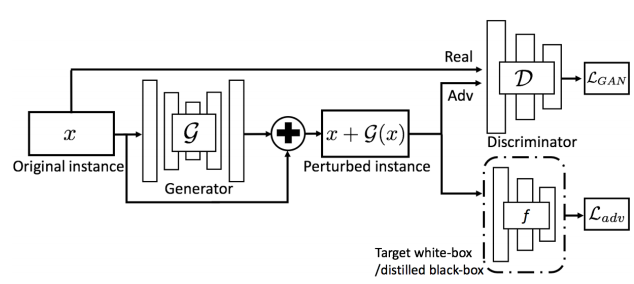

3. Generating Adversarial Examples with Adversarial Networks(基于对抗网络的对抗样本生成)

作者:Chaowei Xiao,Bo Li,Jun-Yan Zhu,Warren He,Mingyan Liu,Dawn Song

摘要:Deep neural networks (DNNs) have been found to be vulnerable to adversarial examples resulting from adding small-magnitude perturbations to inputs. Such adversarial examples can mislead DNNs to produce adversary-selected results. Different attack strategies have been proposed to generate adversarial examples, but how to produce them with high perceptual quality and more efficiently requires more research efforts. In this paper, we propose AdvGAN to generate adversarial examples with generative adversarial networks (GANs), which can learn and approximate the distribution of original instances. For AdvGAN, once the generator is trained, it can generate adversarial perturbations efficiently for any instance, so as to potentially accelerate adversarial training as defenses. We apply AdvGAN in both semi-whitebox and black-box attack settings. In semi-whitebox attacks, there is no need to access the original target model after the generator is trained, in contrast to traditional white-box attacks. In black-box attacks, we dynamically train a distilled model for the black-box model and optimize the generator accordingly. Adversarial examples generated by AdvGAN on different target models have high attack success rate under state-of-the-art defenses compared to other attacks. Our attack has placed the first with 92.76% accuracy on a public MNIST black-box attack challenge.

期刊:arXiv, 2018年1月16日

网址:

http://www.zhuanzhi.ai/document/41c7a0468e5580f292b38a04e4faa8c9

4. Unsupervised Cipher Cracking Using Discrete GANs(基于离散GANs的非监督密码破解)

作者:Aidan N. Gomez,Sicong Huang,Ivan Zhang,Bryan M. Li,Muhammad Osama,Lukasz Kaiser

摘要:This work details CipherGAN, an architecture inspired by CycleGAN used for inferring the underlying cipher mapping given banks of unpaired ciphertext and plaintext. We demonstrate that CipherGAN is capable of cracking language data enciphered using shift and Vigenere ciphers to a high degree of fidelity and for vocabularies much larger than previously achieved. We present how CycleGAN can be made compatible with discrete data and train in a stable way. We then prove that the technique used in CipherGAN avoids the common problem of uninformative discrimination associated with GANs applied to discrete data.

期刊:arXiv, 2018年1月16日

网址:

http://www.zhuanzhi.ai/document/3fc349ad9957de9f15abf37f59cd0b72

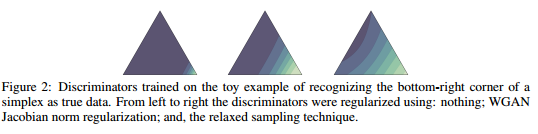

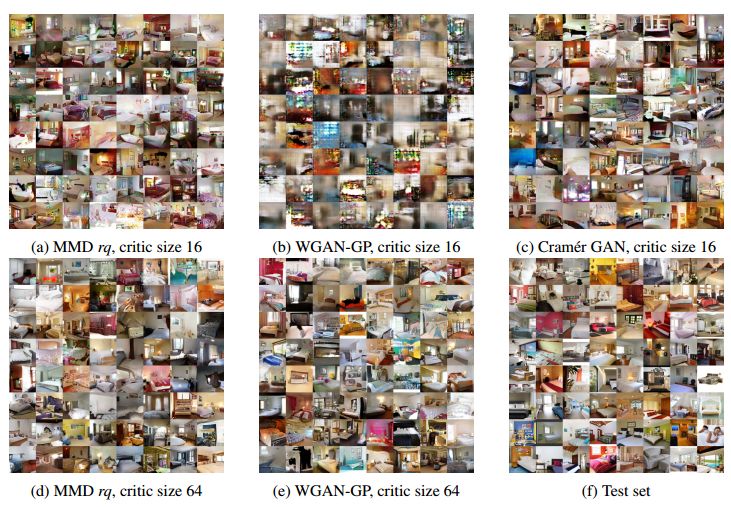

5. Demystifying MMD GANs(揭秘MMD GANS)

作者:Mikołaj Bińkowski,Dougal J. Sutherland,Michael Arbel,Arthur Gretton

摘要:We investigate the training and performance of generative adversarial networks using the Maximum Mean Discrepancy (MMD) as critic, termed MMD GANs. As our main theoretical contribution, we clarify the situation with bias in GAN loss functions raised by recent work: we show that gradient estimators used in the optimization process for both MMD GANs and Wasserstein GANs are unbiased, but learning a discriminator based on samples leads to biased gradients for the generator parameters. We also discuss the issue of kernel choice for the MMD critic, and characterize the kernel corresponding to the energy distance used for the Cramer GAN critic. Being an integral probability metric, the MMD benefits from training strategies recently developed for Wasserstein GANs. In experiments, the MMD GAN is able to employ a smaller critic network than the Wasserstein GAN, resulting in a simpler and faster-training algorithm with matching performance. We also propose an improved measure of GAN convergence, the Kernel Inception Distance, and show how to use it to dynamically adapt learning rates during GAN training.

期刊:arXiv, 2018年1月13日

网址:

http://www.zhuanzhi.ai/document/7b3d27c7e5f08949ffbc0bbbc7d375c4

更多论文请上专知查看:PC登录 www.zhuanzhi.ai 点击论文查看

-END-

专 · 知

人工智能领域主题知识资料查看获取:【专知荟萃】人工智能领域26个主题知识资料全集(入门/进阶/论文/综述/视频/专家等)

请PC登录www.zhuanzhi.ai或者点击阅读原文,注册登录专知,获取更多AI知识资料!

请扫一扫如下二维码关注我们的公众号,获取人工智能的专业知识!

请加专知小助手微信(Rancho_Fang),加入专知主题人工智能群交流!

点击“阅读原文”,使用专知