【论文推荐】最新八篇机器翻译相关论文—自注意力残差解码器、条件序列生成式对抗网络、检索译文、域自适应、细粒度注意力机制

【导读】既前两天推出二十篇机器翻译(Machine Translation)相关文章,专知内容组今天又推出最近八篇机器翻译相关文章,为大家进行介绍,欢迎查看!

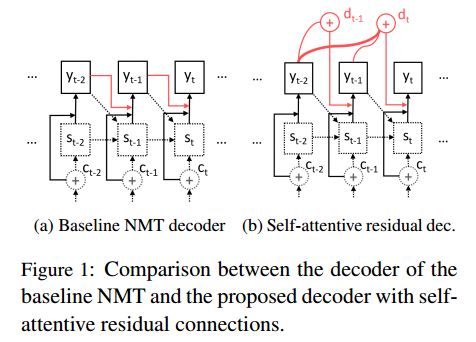

21. Self-Attentive Residual Decoder for Neural Machine Translation(基于自注意力机制残差解码器的神经机器翻译)

作者:Lesly Miculicich Werlen,Nikolaos Pappas,Dhananjay Ram,Andrei Popescu-Belis

机构:George Mason University

摘要:Neural sequence-to-sequence networks with attention have achieved remarkable performance for machine translation. One of the reasons for their effectiveness is their ability to capture relevant source-side contextual information at each time-step prediction through an attention mechanism. However, the target-side context is solely based on the sequence model which, in practice, is prone to a recency bias and lacks the ability to capture effectively non-sequential dependencies among words. To address this limitation, we propose a target-side-attentive residual recurrent network for decoding, where attention over previous words contributes directly to the prediction of the next word. The residual learning facilitates the flow of information from the distant past and is able to emphasize any of the previously translated words, hence it gains access to a wider context. The proposed model outperforms a neural MT baseline as well as a memory and self-attention network on three language pairs. The analysis of the attention learned by the decoder confirms that it emphasizes a wider context, and that it captures syntactic-like structures.

期刊:arXiv, 2018年3月23日

网址:

http://www.zhuanzhi.ai/document/dbc697ffcb43eb2a4b64fc55d86a543f

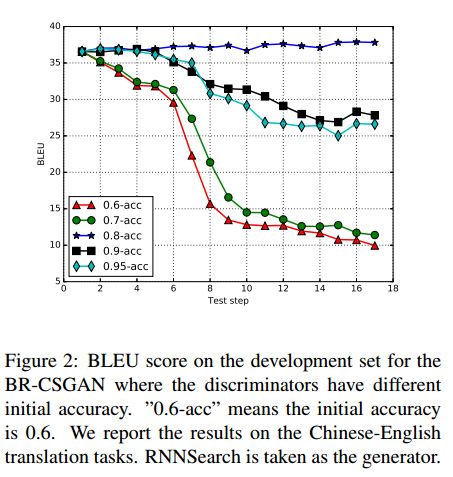

22. Improving Neural Machine Translation with Conditional Sequence Generative Adversarial Nets(基于条件序列生成式对抗网络的神经机器翻译)

作者:Zhen Yang,Wei Chen,Feng Wang,Bo Xu

机构:University of Chinese Academy of Sciences

摘要:This paper proposes an approach for applying GANs to NMT. We build a conditional sequence generative adversarial net which comprises of two adversarial sub models, a generator and a discriminator. The generator aims to generate sentences which are hard to be discriminated from human-translated sentences (i.e., the golden target sentences), And the discriminator makes efforts to discriminate the machine-generated sentences from human-translated ones. The two sub models play a mini-max game and achieve the win-win situation when they reach a Nash Equilibrium. Additionally, the static sentence-level BLEU is utilized as the reinforced objective for the generator, which biases the generation towards high BLEU points. During training, both the dynamic discriminator and the static BLEU objective are employed to evaluate the generated sentences and feedback the evaluations to guide the learning of the generator. Experimental results show that the proposed model consistently outperforms the traditional RNNSearch and the newly emerged state-of-the-art Transformer on English-German and Chinese-English translation tasks.

期刊:arXiv, 2018年4月8日

网址:

http://www.zhuanzhi.ai/document/c19bb78ee31a946aed7a98ca760bc7ee

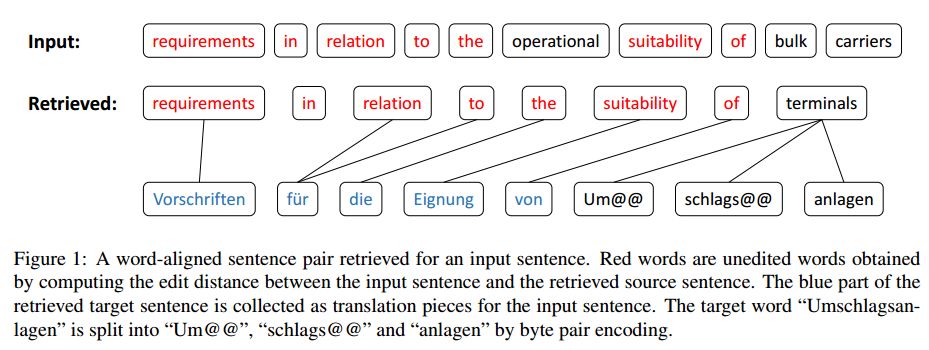

23. Guiding Neural Machine Translation with Retrieved Translation Pieces(用检索到的译文指导神经机器翻译)

作者:Jingyi Zhang,Masao Utiyama,Eiichro Sumita,Graham Neubig,Satoshi Nakamura

机构:Carnegie Mellon University

摘要:One of the difficulties of neural machine translation (NMT) is the recall and appropriate translation of low-frequency words or phrases. In this paper, we propose a simple, fast, and effective method for recalling previously seen translation examples and incorporating them into the NMT decoding process. Specifically, for an input sentence, we use a search engine to retrieve sentence pairs whose source sides are similar with the input sentence, and then collect $n$-grams that are both in the retrieved target sentences and aligned with words that match in the source sentences, which we call "translation pieces". We compute pseudo-probabilities for each retrieved sentence based on similarities between the input sentence and the retrieved source sentences, and use these to weight the retrieved translation pieces. Finally, an existing NMT model is used to translate the input sentence, with an additional bonus given to outputs that contain the collected translation pieces. We show our method improves NMT translation results up to 6 BLEU points on three narrow domain translation tasks where repetitiveness of the target sentences is particularly salient. It also causes little increase in the translation time, and compares favorably to another alternative retrieval-based method with respect to accuracy, speed, and simplicity of implementation.

期刊:arXiv, 2018年4月7日

网址:

http://www.zhuanzhi.ai/document/3ec2bce8a428ac5e93a8ce51d0469269

24. Domain Adaptation for Statistical Machine Translation(统计机器翻译的域自适应)

作者:Longyue Wang

机构:Faculty of Science and Technology University of Macau

摘要:Statistical machine translation (SMT) systems perform poorly when it is applied to new target domains. Our goal is to explore domain adaptation approaches and techniques for improving the translation quality of domain-specific SMT systems. However, translating texts from a specific domain (e.g., medicine) is full of challenges. The first challenge is ambiguity. Words or phrases contain different meanings in different contexts. The second one is language style due to the fact that texts from different genres are always presented in different syntax, length and structural organization. The third one is the out-of-vocabulary words (OOVs) problem. In-domain training data are often scarce with low terminology coverage. In this thesis, we explore the state-of-the-art domain adaptation approaches and propose effective solutions to address those problems.

期刊:arXiv, 2018年4月5日

网址:

http://www.zhuanzhi.ai/document/9110fd93f60a2255627bde53d87ff67f

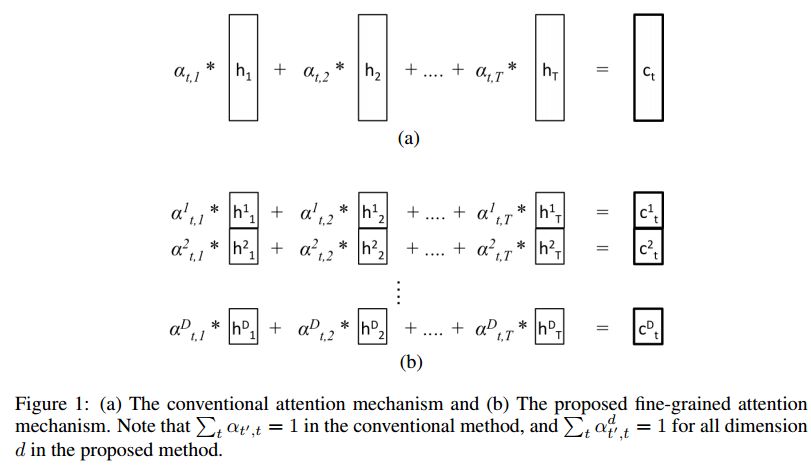

25. Fine-Grained Attention Mechanism for Neural Machine Translation(神经机器翻译中的细粒度注意力机制)

作者:Heeyoul Choi,Kyunghyun Cho,Yoshua Bengio

机构:Handong Global University,New York University,University of Montreal

摘要:Neural machine translation (NMT) has been a new paradigm in machine translation, and the attention mechanism has become the dominant approach with the state-of-the-art records in many language pairs. While there are variants of the attention mechanism, all of them use only temporal attention where one scalar value is assigned to one context vector corresponding to a source word. In this paper, we propose a fine-grained (or 2D) attention mechanism where each dimension of a context vector will receive a separate attention score. In experiments with the task of En-De and En-Fi translation, the fine-grained attention method improves the translation quality in terms of BLEU score. In addition, our alignment analysis reveals how the fine-grained attention mechanism exploits the internal structure of context vectors.

期刊:arXiv, 2018年4月3日

网址:

http://www.zhuanzhi.ai/document/a40b0be3439cc550ca779540ccc32d23

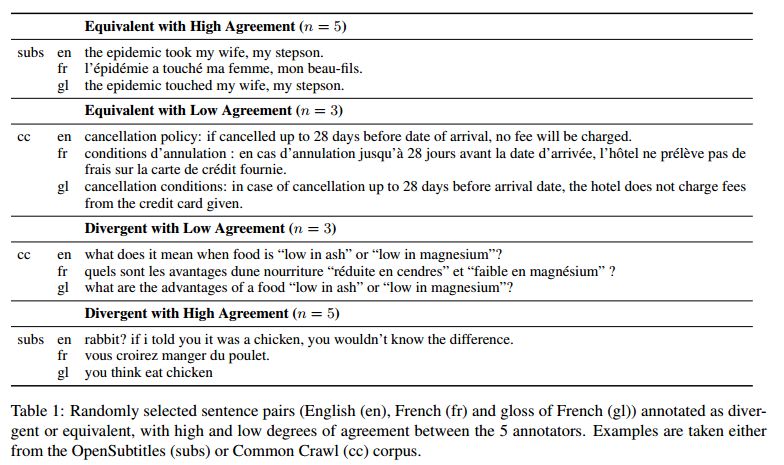

26. Identifying Semantic Divergences in Parallel Text without Annotations

作者:Yogarshi Vyas,Xing Niu,Marine Carpuat

机构:University of Maryland

摘要:Recognizing that even correct translations are not always semantically equivalent, we automatically detect meaning divergences in parallel sentence pairs with a deep neural model of bilingual semantic similarity which can be trained for any parallel corpus without any manual annotation. We show that our semantic model detects divergences more accurately than models based on surface features derived from word alignments, and that these divergences matter for neural machine translation.

期刊:arXiv, 2018年3月29日

网址:

http://www.zhuanzhi.ai/document/0ba474013d27be65b1fe47c829bf6d81

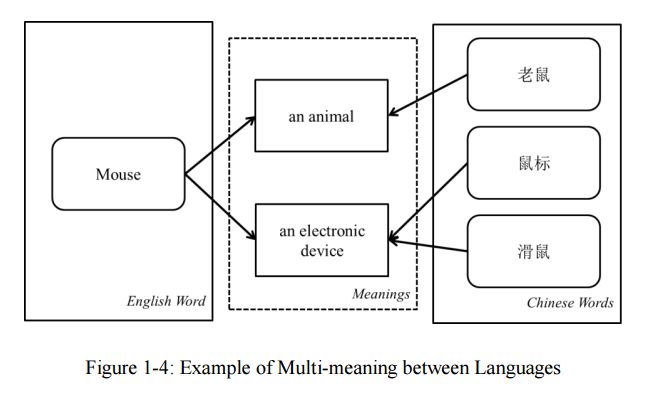

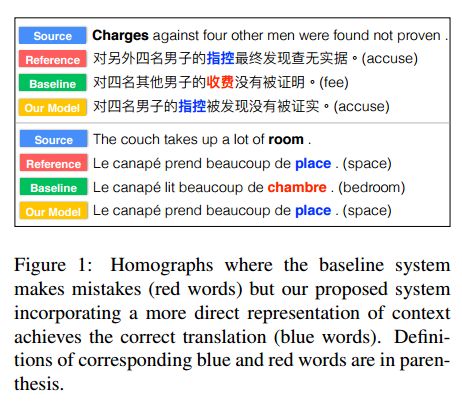

27. Handling Homographs in Neural Machine Translation(在神经机器翻译中处理同形异义词)

作者:Frederick Liu,Han Lu,Graham Neubig

机构:Carnegie Mellon University

摘要:Homographs, words with different meanings but the same surface form, have long caused difficulty for machine translation systems, as it is difficult to select the correct translation based on the context. However, with the advent of neural machine translation (NMT) systems, which can theoretically take into account global sentential context, one may hypothesize that this problem has been alleviated. In this paper, we first provide empirical evidence that existing NMT systems in fact still have significant problems in properly translating ambiguous words. We then proceed to describe methods, inspired by the word sense disambiguation literature, that model the context of the input word with context-aware word embeddings that help to differentiate the word sense be- fore feeding it into the encoder. Experiments on three language pairs demonstrate that such models improve the performance of NMT systems both in terms of BLEU score and in the accuracy of translating homographs.

期刊:arXiv, 2018年3月28日

网址:

http://www.zhuanzhi.ai/document/4646d99b36ed3195b9d2d59d82b16c46

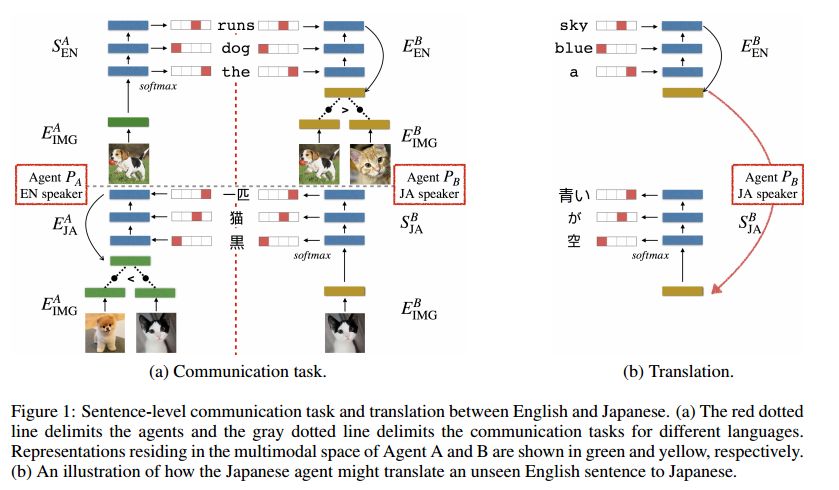

28. Emergent Translation in Multi-Agent Communication(Multi-Agent通信中的紧急翻译)

作者:Jason Lee,Kyunghyun Cho,Jason Weston,Douwe Kiela

机构:New York University

摘要:While most machine translation systems to date are trained on large parallel corpora, humans learn language in a different way: by being grounded in an environment and interacting with other humans. In this work, we propose a communication game where two agents, native speakers of their own respective languages, jointly learn to solve a visual referential task. We find that the ability to understand and translate a foreign language emerges as a means to achieve shared goals. The emergent translation is interactive and multimodal, and crucially does not require parallel corpora, but only monolingual, independent text and corresponding images. Our proposed translation model achieves this by grounding the source and target languages into a shared visual modality, and outperforms several baselines on both word-level and sentence-level translation tasks. Furthermore, we show that agents in a multilingual community learn to translate better and faster than in a bilingual communication setting.

期刊:arXiv, 2018年4月11日

网址:

http://www.zhuanzhi.ai/document/c1ecc57fbfae66df7b253944369bd78b

-END-

专 · 知

人工智能领域主题知识资料查看与加入专知人工智能服务群:

【专知AI服务计划】专知AI知识技术服务会员群加入与人工智能领域26个主题知识资料全集获取

点击上面图片加入会员

请PC登录www.zhuanzhi.ai或者点击阅读原文,注册登录专知,获取更多AI知识资料!

请扫一扫如下二维码关注我们的公众号,获取人工智能的专业知识!

点击“阅读原文”,使用专知