【ACM MM2018】多媒体领域顶会ACM Multimedia2018在首尔召开

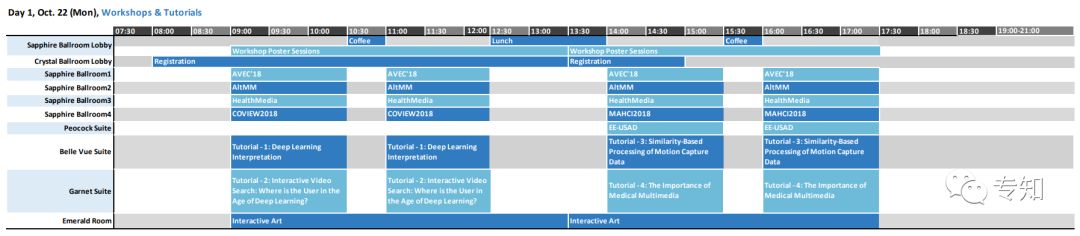

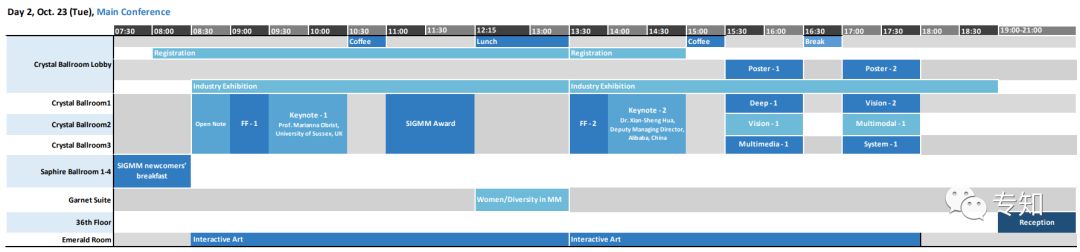

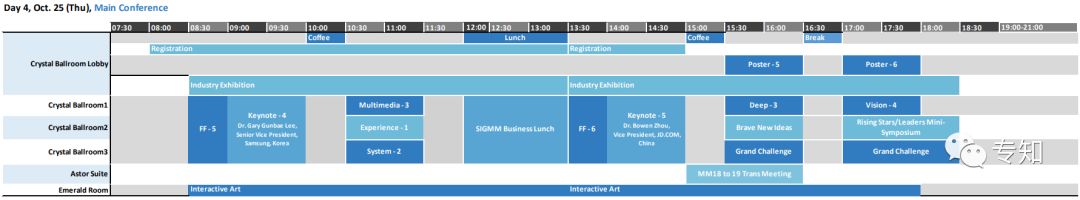

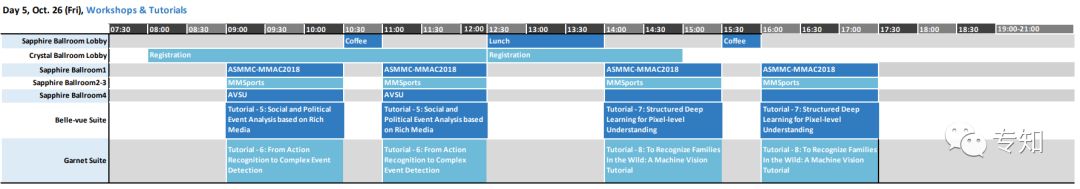

【导读】多媒体领域顶级会议ACM Multimedia今天正式在首尔乐天酒店拉开帷幕。ACM-MM致力于多媒体领域相关研究、从底层技术到应用程序,从理论到实践。这次会议共持续5天,22日-26日。

会议日程

(点击查看大图)

大会报告

Title: Don’t just Look – Smell, Taste, and Feel the Interaction

Speaker: Marianna Obrist, University of Sussex, Brighton, UK

Abstract: While our understanding of the sensory modalities for human-computer interaction (HCI) is advancing, there is still a huge gap in our understanding of ‘how’ to best integrate different modalities into the interaction with technology. While we have established a rich ecosystem for visual and auditory design, our sense of touch, taste, and smell are not yet supported with dedicated software tools, interaction techniques, and design tools. Hence, we are missing out on the opportunity to exploit the power of those sensory modalities, their strong link to emotions and memory, and their ability to facilitate recall and recognition in information processing and decision making. In this keynote, Dr Obrist presents an overview of scientific and technological developments in multisensory research and design with an emphasis on an experience-centered design approach that bridges and integrates knowledge on human sensory perception and advances in computing technology.

Bio: Marianna Obrist is Professor of Multisensory Experiences and head of the Sussex Computer Human Interaction (SCHI ‘sky’) Lab at the School of Engineering and Informatics at the University of Sussex in the UK. Her research ambition is to establish touch, taste, and smell as interaction modalities in HCI. Her research ambition is mainly supported by a ERC starting grant SenseX and ERC proof-of-concept award OWidgets. She has established dedicated experimental spaces for multisensory research at Sussex, including a dedicated olfactory interaction room equipped with a custom-built fully controllable scent-delivery device. Before joining Sussex, Dr Obrist was a Marie Curie Fellow at Newcastle University and prior to this an Assistant Professor at the University of Salzburg, Austria. Dr Obrist has co-authored over 70 research articles in peer-reviewed journals and conferences.

Title: Challenges and Practices of Large Scale Visual Intelligence in the Real-World

Speaker: Xian-Sheng Hua, Distinguished Engineer/VP, Alibaba Group

Abstract: Visual intelligence is one of the key parts of Artificial Intelligence. Considerable technology progresses along this direction have been made in the past a few years. However, how to incubate the right technologies and convert them into real business values in the real-world remains a challenge. In this talk, we will analyze current challenges of visual intelligence and try to summarize a few key points that may help us successfully develop and apply technologies to solve real-world problems. In particular, we will introduce a few successful examples, including “City Brain”, “Luban (visual design)”, “Jianyuan (industrial vision)”, “Pailitao (product search)”, etc., from the problem definition/discovery, to technology development, to product design, and to realizing business values.

Bio: Xian-Sheng Hua is now a Distinguished Engineer/VP of Alibaba Group, leading a team working on large-scale visual intelligence on the cloud. Dr. Hua is an IEEE Fellow, and ACM Distinguished Scientist. He received the B.S. degree in 1996, and the Ph.D. degree in applied mathematics in 2001, both from Peking University, Beijing, China. He joined Microsoft Research Asia, Beijing, China, in 2001, as a Researcher. He was a Principal Research and Development Lead in Multimedia Search for the Microsoft search engine, Bing, Redmond, WA, USA, from 2011 to 2013. He was a Senior Researcher with Microsoft Research Redmond, Redmond, WA, USA, from 2013 to 2015. He became a Researcher and Senior Director of the Alibaba Group, Hangzhou, China, in April of 2015, leading the Visual Computing Team in Search Division, Alibaba Cloud and then DAMO Academy. He has authored or coauthored more than 200 research papers and has filed more than 90 patents. His research interests include big multimedia data search, advertising, understanding, and mining, as well as pattern recognition and machine learning. Dr. Hua served or is now serving as an Associate Editor for the IEEE Trans. on Multimedia and ACM Transactions on Intelligent Systems and Technology. He served as a Program Co-Chair for IEEE ICME 2013, ACM Multimedia 2012, and IEEE ICME 2012. He was one of the recipients of the 2008 MIT Technology Review TR35 Young Innovator Award for his outstanding contributions on video search. He was the recipient of the Best Paper Awards at ACM Multimedia 2007, and Best Paper Award of the IEEE Trans. on CSVT in 2014.Dr. Hua will be serving as general co-chair of ACM Multimedia 2020.

Title: What has art got to do with it?

Speaker: Ernest Edmonds, De Montfort University, Leicester UK

Abstract: What can multi-media systems design learn from art? How can the research agenda be advanced by looking at art? How can we improve creativity support and the amplification of that important human capability? Interactive art has become a common part of life as a result of the many ways in which the computer and the Internet have facilitated it. Multi-media computing is as important to interactive art as mixing the colors of paint are to painting. This talk reviews recent work that looks at these issues through art research. In interactive digital art, the artist is concerned with how the artwork behaves, how the audience interacts with it, and, ultimately, how participants experience art as well as their degree of engagement. The talk examines these issues and brings together a collection of research results from art practice that illuminates this significant new and expanding area. In particular, this work points towards a much-needed critical language that can be used to describe, compare and frame research into the support of creativity.

Bio: Ernest Edmonds is a pioneer computer artist and HCI innovator for whom combing creative arts practice with creative technologies has been a life-long pursuit. He is a member of both the SIGCHI Academy and the SIGGRAPH Academy. In 2017 he won both the ACM SIGCHI Lifetime Achievement Award for Practice in Human-Computer Interaction and the ACM SIGGRAPH Distinguished Artist Award for Lifetime Achievement in Digital Art. He is Professor of Computational Art at De Montfort University, Leicester UK, and Chairman of the Board of ISEA International. He is an Honorary Editor of Leonardo and Editor-in-Chief of Springer’s Cultural Computing book series. His work was recently described in the book by Francesca Franco, “Generative Systems Art: The Work of Ernest Edmonds”.

Title: Living with Artificial Intelligence Technology in Connected Devices around Us

Speaker: Gary Geunbae Lee, Senior Vice President, Samsung

Abstract: This talk describes how AI technology can be used in multiple connected devices around us such as smartphone, TV, refrigerator and several consumer electronic devices, thus giving new and exciting customer experiences and values. This talk starts with Samsung’s AI vision as a device company, and introduces 5 strategic principles along with industrial usages of AI technology including speech and natural language, visual understanding, data intelligence and autonomous driving all with deep learning techniques heavily involved. These applications naturally form a platform for both on device/edge, cloud and machine learning services for various current and future Samsung devices.

Bio: Gary Geunbae Lee is a senior vice president and director of AI center, Samsung Research at Samsung Electronics. He is leading Samsung’s AI research and strategy to be applied to various consumer electronics devices. He was a professor at CSE department of POSTECH from 1991, and was a director of Intelligent Software Laboratory which focuses on natural language researches including spoken dialog processing, intelligent computer assisted language learning, speech synthesis and web/text mining. Dr. Lee has authored more than 250 papers in international journals and conferences, and has more than 35 patents registered in speech and language technology area. Dr. Lee has served as a technical committee member for international conferences such as ACL, COLING, IJCAI, SIGIR, IUI, Interspeech, ASRU/SLT and ICASSP. He was in a steering committee for Asian Information Retrieval Symposium (AIRS), International workshop on spoken dialog systems (IWSDS), and has been an executive board member of Asia Federation of NLP. He has served as an associate editor of IEEE/ACM transactions on Audio, Speech and Language Processing (TASLP) and ACM transactions on Asian Language Information Processing (TALIP). He has been a PC co-chair for AIRS2009, AIRS 2005, workshop chair for IUI 2007, and a PC area chair for IJCNLP 2004, 2005, 2008, and COLING2010, and also a senior program committee member for IJCAI 2011, ACM IUI 2011. He was a local organization co-chair of ACL 2012 (50th anniversary meeting) and general chair of SIGDIAL 2012 and IWSDS2015. He has edited several special issues of journals including ACM Trans. on Asian Language Information Processing and Information Processing & Management. He co-founded DiQuest and has been a CTO, which becomes now one of the major search engine company in Korea, and has been a technical advisor for SK Telecom, Samsung Electronics, and many other start-up companies. He was a winner of IR52 Jangyoungsil award with DiQuest, which is one of the most prestigious technology innovation awards in Korea. He was leading several national and industry projects for robust spoken dialog systems, dialog-based English tutoring agents, multimedia search/recommendation systems and expressive TTS. Dr. Lee holds a Ph.D. in computer science from UCLA, and BS/MS in computer engineering from Seoul National University.

Title: Transforming Retailing Experiences with Artificial Intelligence

Speaker: Bowen Zhou, Vice President of JD.com, Head of AI Research & Platform

Abstract: Artificial Intelligence (AI) is making big impacts in our daily life. In this talk, we will show how AI is transforming retail industry. In particular, we propose the brand-new concept of Retail as a Service (RaaS), where retail is redefined as the natural combination of content and interaction. With the capability of knowing more about consumers, products and retail scenarios integrating online and offline, AI is providing more personalized and comprehensive multimodal content and enabling more natural interactions between consumers and services, through the innovative technologies we invented at JD.com. We will show 1) how computer vision techniques can better understand consumers, help consumers easily discover products, and support multimodal content generation, 2) how the natural language processing techniques can be used to support intelligent customer services through emotion computing, 3) how AI is building the very fundamental technology infrastructure for RaaS.

Bio: Dr. Bowen Zhou, Vice President of JD.com and the Head of JD’s AI Platform & Research. Since he joined JD.com in Sept. 2017, Dr. Zhou has been overseeing JD’s overall artificial intelligence strategy, technology and execution. He helped JD establish a world-class artificial intelligence team spanning research, platform, development and business, including JD’s Artificial Intelligence Research (JDAI), JD’s AI Platform Division, and a business innovation department centric around AI. Prior to joining JD.com, Dr. Zhou had been with IBM for almost 15 years, where he most recently was appointed as the Director of AI Foundations lab at IBM Research in New York, and in the meantime, he was also the Chief Scientist of IBM Watson Group, and a Distinguished Engineer of IBM. Published more than 100 papers at peer-reviewed top journals and conference proceedings, and awarded over 10 patents, Dr. Zhou is a leading researcher in the areas of deep natural language understanding and speech translation. Bowen previously served as a member of the IEEE Speech and Language Technical Committee, an Associate Editor of IEEE Transactions, frequently served as an ICASSP Area Chair (2011-2015) and NAACL Area Chair for speech and language processing, machine translation, question answering, machine learning and information extraction etc.

会议接收论文列表

会议proceedings:http://www.sigmm.org/opentoc/MM2018-TOC

-END-

专 · 知

欢迎微信扫描下方二维码加入专知人工智能知识星球群,获取更多人工智能领域专业知识教程视频资料和与专家交流咨询!

登录www.zhuanzhi.ai或者点击阅读原文,使用专知,可获取更多AI知识资料!

专知运用有多个深度学习主题群,欢迎各位添加专知小助手微信(下方二维码)进群交流(请备注主题类型:AI、NLP、CV、 KG等)

请关注专知公众号,获取人工智能的专业知识!

点击“阅读原文”,使用专知