【论文推荐】最新6篇目标跟踪相关论文—动态记忆网络、相关滤波器、单次学习、相关、循环自回归网络、三维多目标

【导读】专知内容组整理了最近六篇目标跟踪(Object Tracking)相关文章,为大家进行介绍,欢迎查看!

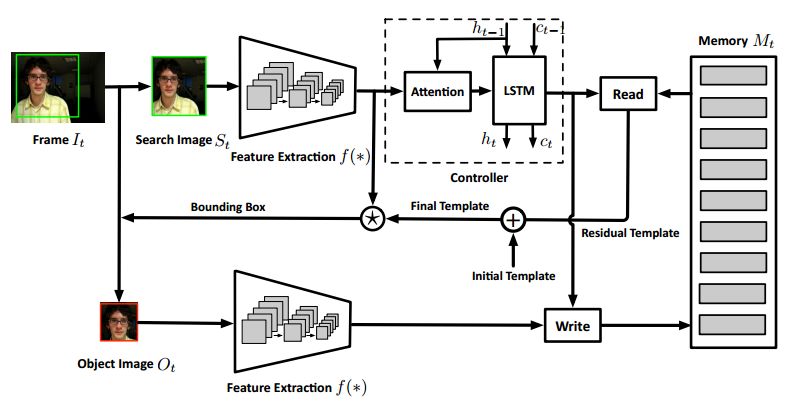

1.Learning Dynamic Memory Networks for Object Tracking(学习动态记忆网络的目标跟踪)

作者:Tianyu Yang,Antoni B. Chan

机构:City University of Hong Kong

摘要:Template-matching methods for visual tracking have gained popularity recently due to their comparable performance and fast speed. However, they lack effective ways to adapt to changes in the target object's appearance, making their tracking accuracy still far from state-of-the-art. In this paper, we propose a dynamic memory network to adapt the template to the target's appearance variations during tracking. An LSTM is used as a memory controller, where the input is the search feature map and the outputs are the control signals for the reading and writing process of the memory block. As the location of the target is at first unknown in the search feature map, an attention mechanism is applied to concentrate the LSTM input on the potential target. To prevent aggressive model adaptivity, we apply gated residual template learning to control the amount of retrieved memory that is used to combine with the initial template. Unlike tracking-by-detection methods where the object's information is maintained by the weight parameters of neural networks, which requires expensive online fine-tuning to be adaptable, our tracker runs completely feed-forward and adapts to the target's appearance changes by updating the external memory. Moreover, the capacity of our model is not determined by the network size as with other trackers -- the capacity can be easily enlarged as the memory requirements of a task increase, which is favorable for memorizing long-term object information. Extensive experiments on OTB and VOT demonstrates that our tracker MemTrack performs favorably against state-of-the-art tracking methods while retaining real-time speed of 50 fps.

期刊:arXiv, 2018年3月20日

网址:

http://www.zhuanzhi.ai/document/b64a9ef8a24e5a2d1f8eceef44fd4133

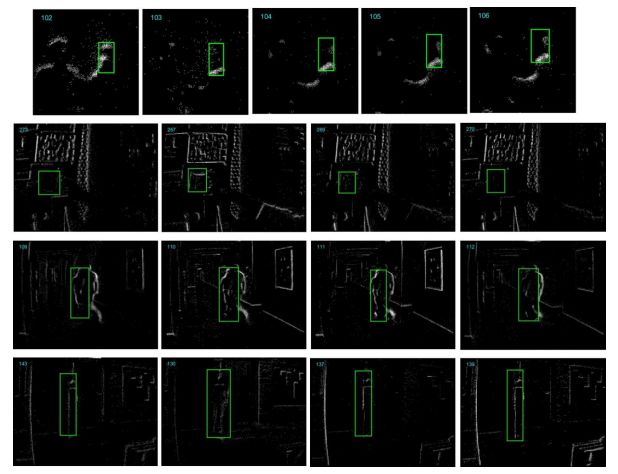

2. Robust event-stream pattern tracking based on correlative filter(基于相关滤波器的鲁棒事件流模式跟踪)

作者:Hongmin Li,Luping Shi

摘要:Object tracking based on retina-inspired and event-based dynamic vision sensor (DVS) is challenging for the noise events, rapid change of event-stream shape, chaos of complex background textures, and occlusion. To address these challenges, this paper presents a robust event-stream pattern tracking method based on correlative filter mechanism. In the proposed method, rate coding is used to encode the event-stream object in each segment. Feature representations from hierarchical convolutional layers of a deep convolutional neural network (CNN) are used to represent the appearance of the rate encoded event-stream object. The results prove that our method not only achieves good tracking performance in many complicated scenes with noise events, complex background textures, occlusion, and intersected trajectories, but also is robust to variable scale, variable pose, and non-rigid deformations. In addition, this correlative filter based event-stream tracking has the advantage of high speed. The proposed approach will promote the potential applications of these event-based vision sensors in self-driving, robots and many other high-speed scenes.

期刊:arXiv, 2018年3月17日

网址:

http://www.zhuanzhi.ai/document/2e0499e60ec9d6d73afdeb3387308f9f

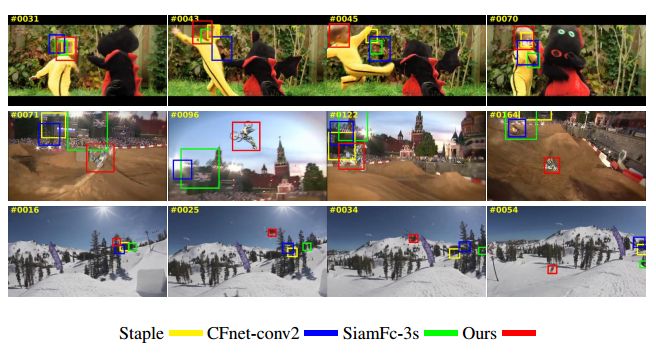

3. Quadruplet Network with One-Shot Learning for Fast Visual Object Tracking(基于单次学习Quadruplet网络的快速视觉目标跟踪)

作者:Xingping Dong,Jianbing Shen,Yu Liu,Wenguan Wang,Fatih Porikli

机构:Beijing Institute of Technology,Australian National University

摘要:In the same vein of discriminative one-shot learning, Siamese networks allow recognizing an object from a single exemplar with the same class label. However, they do not take advantage of the underlying structure of the data and the relationship among the multitude of samples as they only rely on pairs of instances for training. In this paper, we propose a new quadruplet deep network to examine the potential connections among the training instances, aiming to achieve a more powerful representation. We design four shared networks that receive multi-tuple of instances as inputs and are connected by a novel loss function consisting of pair-loss and triplet-loss. According to the similarity metric, we select the most similar and the most dissimilar instances as the positive and negative inputs of triplet loss from each multi-tuple. We show that this scheme improves the training performance. Furthermore, we introduce a new weight layer to automatically select suitable combination weights, which will avoid the conflict between triplet and pair loss leading to worse performance. We evaluate our quadruplet framework by model-free tracking-by-detection of objects from a single initial exemplar in several Visual Object Tracking benchmarks. Our extensive experimental analysis demonstrates that our tracker achieves superior performance with a real-time processing speed of 78 frames-per-second (fps).

期刊:arXiv, 2018年3月17日

网址:

http://www.zhuanzhi.ai/document/3d101933b7fad05e3eb9f77e2ffad76e

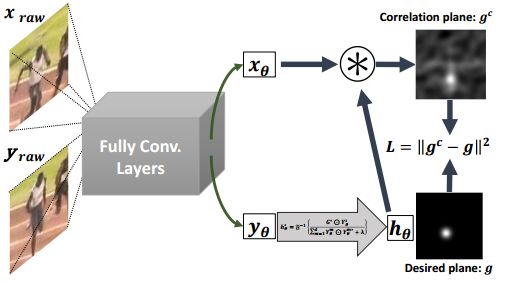

4.Good Features to Correlate for Visual Tracking

作者:Erhan Gundogdu,A. Aydin Alatan

机构:IEEE

摘要:During the recent years, correlation filters have shown dominant and spectacular results for visual object tracking. The types of the features that are employed in these family of trackers significantly affect the performance of visual tracking. The ultimate goal is to utilize robust features invariant to any kind of appearance change of the object, while predicting the object location as properly as in the case of no appearance change. As the deep learning based methods have emerged, the study of learning features for specific tasks has accelerated. For instance, discriminative visual tracking methods based on deep architectures have been studied with promising performance. Nevertheless, correlation filter based (CFB) trackers confine themselves to use the pre-trained networks which are trained for object classification problem. To this end, in this manuscript the problem of learning deep fully convolutional features for the CFB visual tracking is formulated. In order to learn the proposed model, a novel and efficient backpropagation algorithm is presented based on the loss function of the network. The proposed learning framework enables the network model to be flexible for a custom design. Moreover, it alleviates the dependency on the network trained for classification. Extensive performance analysis shows the efficacy of the proposed custom design in the CFB tracking framework. By fine-tuning the convolutional parts of a state-of-the-art network and integrating this model to a CFB tracker, which is the top performing one of VOT2016, 18% increase is achieved in terms of expected average overlap, and tracking failures are decreased by 25%, while maintaining the superiority over the state-of-the-art methods in OTB-2013 and OTB-2015 tracking datasets.

期刊:arXiv, 2018年3月10日

网址:

http://www.zhuanzhi.ai/document/4dd70054b9d6ccb1d8c88caec1425a95

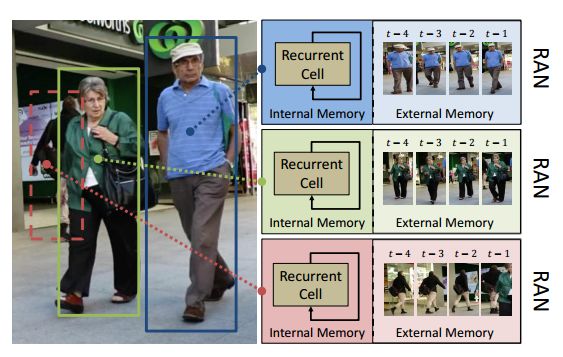

5.Recurrent Autoregressive Networks for Online Multi-Object Tracking(基于循环自回归网络的在线多目标跟踪)

作者:Kuan Fang,Yu Xiang,Xiaocheng Li,Silvio Savarese

机构:Stanford University,University of Washington

摘要:The main challenge of online multi-object tracking is to reliably associate object trajectories with detections in each video frame based on their tracking history. In this work, we propose the Recurrent Autoregressive Network (RAN), a temporal generative modeling framework to characterize the appearance and motion dynamics of multiple objects over time. The RAN couples an external memory and an internal memory. The external memory explicitly stores previous inputs of each trajectory in a time window, while the internal memory learns to summarize long-term tracking history and associate detections by processing the external memory. We conduct experiments on the MOT 2015 and 2016 datasets to demonstrate the robustness of our tracking method in highly crowded and occluded scenes. Our method achieves top-ranked results on the two benchmarks.

期刊:arXiv, 2018年3月4日

网址:

http://www.zhuanzhi.ai/document/701b011b92974f886a2c9e0cb43aaf91

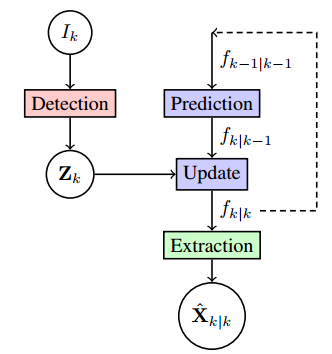

6.Mono-Camera 3D Multi-Object Tracking Using Deep Learning Detections and PMBM Filtering(采用深度学习检测和PMBM滤波的单机三维多目标跟踪)

作者:Samuel Scheidegger,Joachim Benjaminsson,Emil Rosenberg,Amrit Krishnan,Karl Granstrom

机构:Chalmers University of Technology

摘要:Monocular cameras are one of the most commonly used sensors in the automotive industry for autonomous vehicles. One major drawback using a monocular camera is that it only makes observations in the two dimensional image plane and can not directly measure the distance to objects. In this paper, we aim at filling this gap by developing a multi-object tracking algorithm that takes an image as input and produces trajectories of detected objects in a world coordinate system. We solve this by using a deep neural network trained to detect and estimate the distance to objects from a single input image. The detections from a sequence of images are fed in to a state-of-the art Poisson multi-Bernoulli mixture tracking filter. The combination of the learned detector and the PMBM filter results in an algorithm that achieves 3D tracking using only mono-camera images as input. The performance of the algorithm is evaluated both in 3D world coordinates, and 2D image coordinates, using the publicly available KITTI object tracking dataset. The algorithm shows the ability to accurately track objects, correctly handle data associations, even when there is a big overlap of the objects in the image, and is one of the top performing algorithms on the KITTI object tracking benchmark. Furthermore, the algorithm is efficient, running on average close to 20 frames per second.

期刊:arXiv, 2018年2月27日

网址:

http://www.zhuanzhi.ai/document/ae22a341058e2b202fd2afaf34125cd1

-END-

专 · 知

人工智能领域主题知识资料查看获取:【专知荟萃】人工智能领域26个主题知识资料全集(入门/进阶/论文/综述/视频/专家等)

同时欢迎各位用户进行专知投稿,详情请点击:

【诚邀】专知诚挚邀请各位专业者加入AI创作者计划!了解使用专知!

请PC登录www.zhuanzhi.ai或者点击阅读原文,注册登录专知,获取更多AI知识资料!

请扫一扫如下二维码关注我们的公众号,获取人工智能的专业知识!

请加专知小助手微信(Rancho_Fang),加入专知主题人工智能群交流!加入专知主题群(请备注主题类型:AI、NLP、CV、 KG等)交流~

点击“阅读原文”,使用专知!