CMU 邢波教授2019春季《概率图模型》课程开讲,带你学习PGM(含讲义PPT及视频)

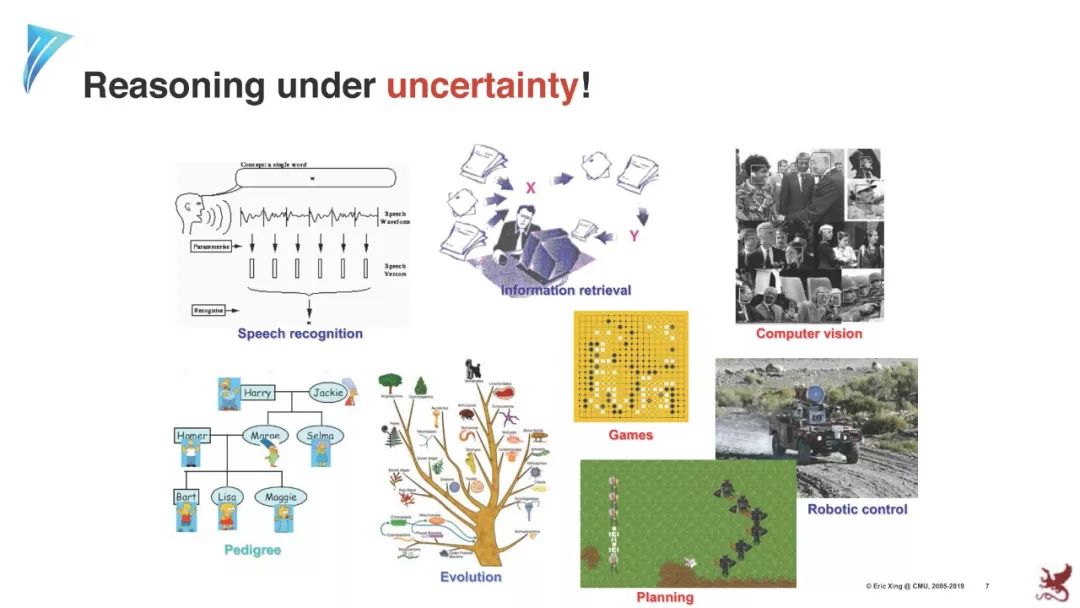

【导读】如何在不确定性情况下进行推理,是很多应用面临的问题。卡内基梅隆大学邢波老师一直从事概率图模型的研究,最近他新设2019年新的《概率图模型》(Probabilistic Graphical Models)课程,是系统性学习PGM 非常好的资料。

https://sailinglab.github.io/pgm-spring-2019/

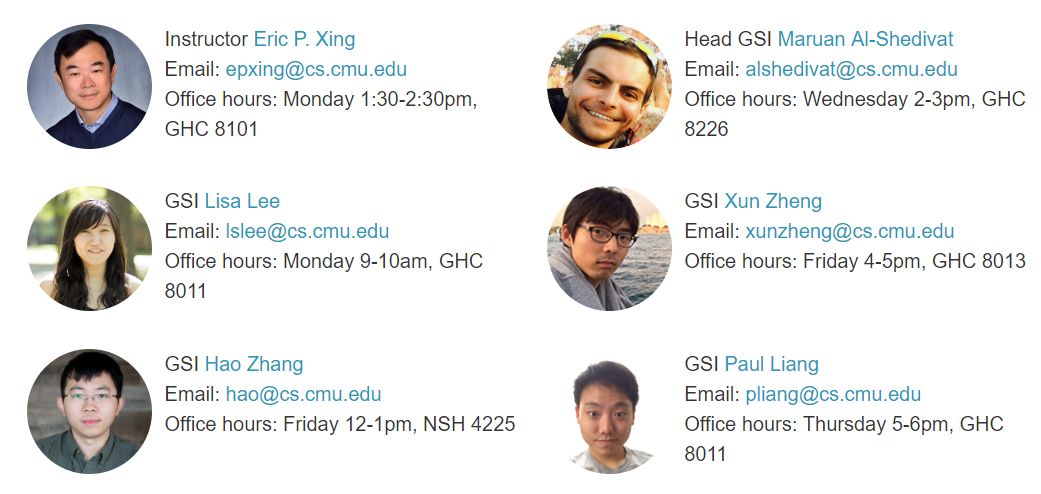

授课老师

邢波(Eric Xing)是卡耐基梅隆大学教授,曾于2014年担任国际机器学习大会(ICML)主席。主要研究兴趣集中在机器学习和统计学习方法论及理论的发展,和大规模计算系统和架构的开发。他创办了Petuum 公司,这是一家专注于人工智能和机器学习的解决方案研发的公司,腾讯曾投资了这家公司。

个人主页: http://www.cs.cmu.edu/~epxing/

概率图模型

课程简介

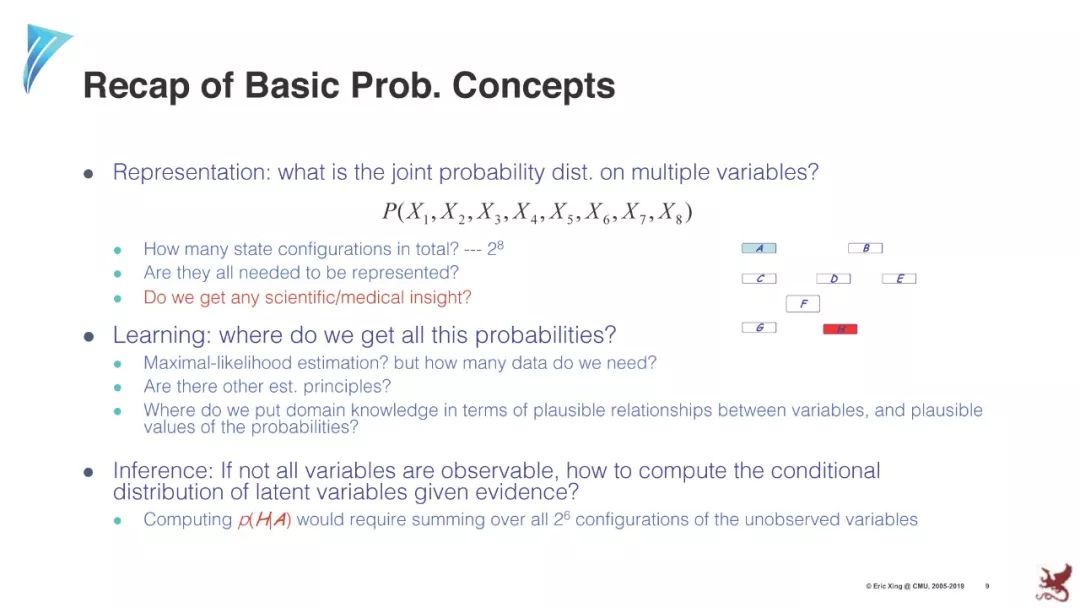

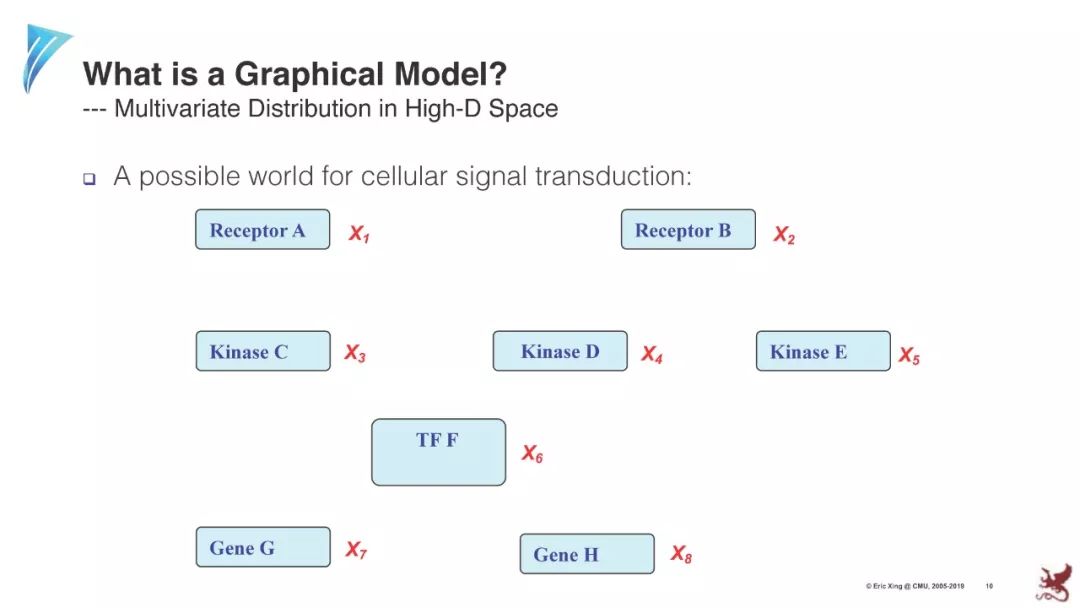

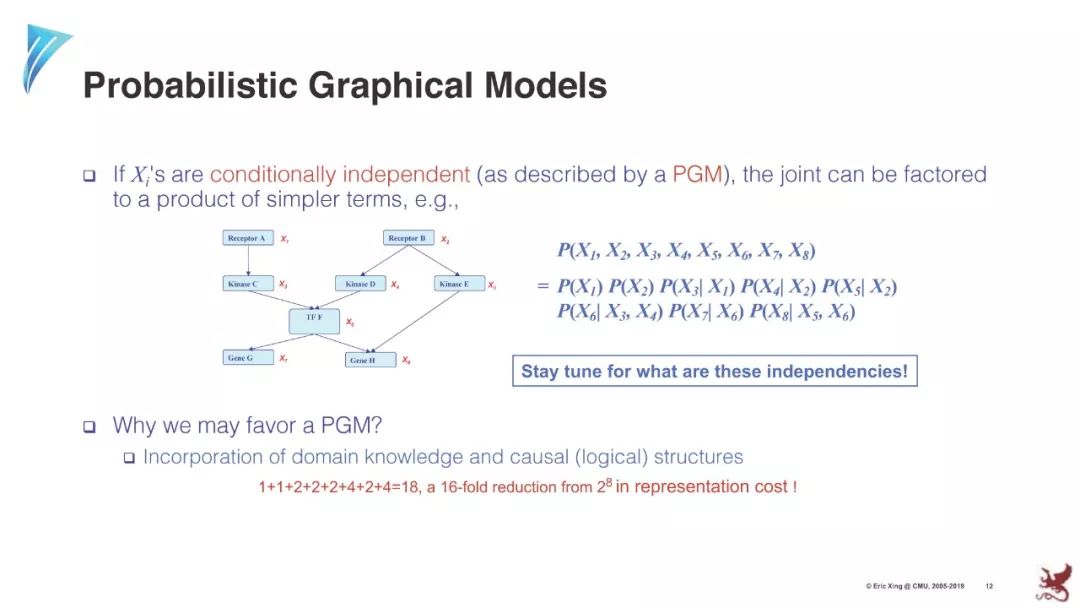

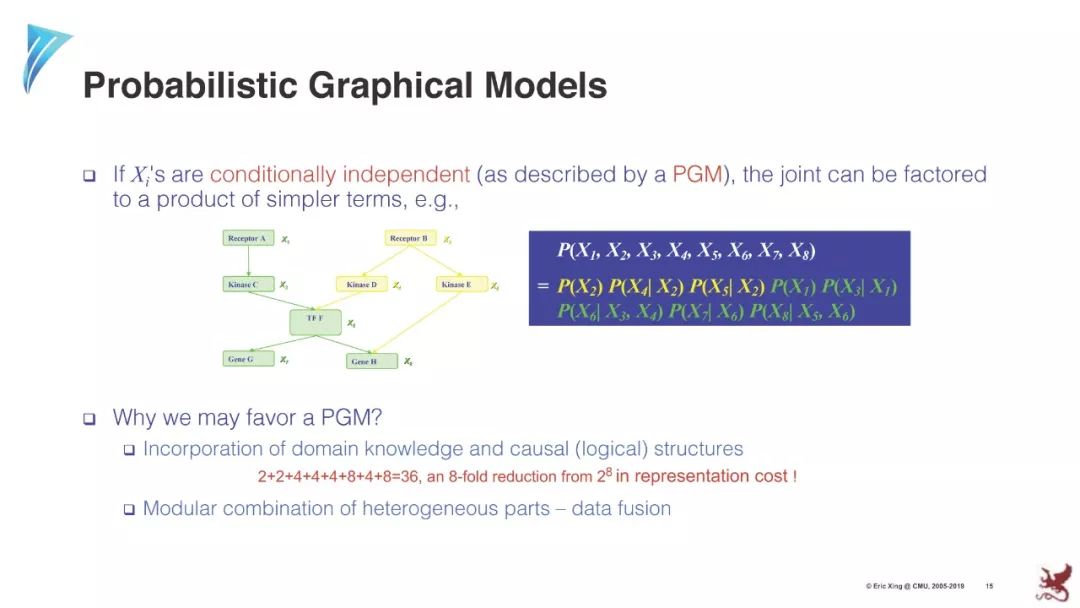

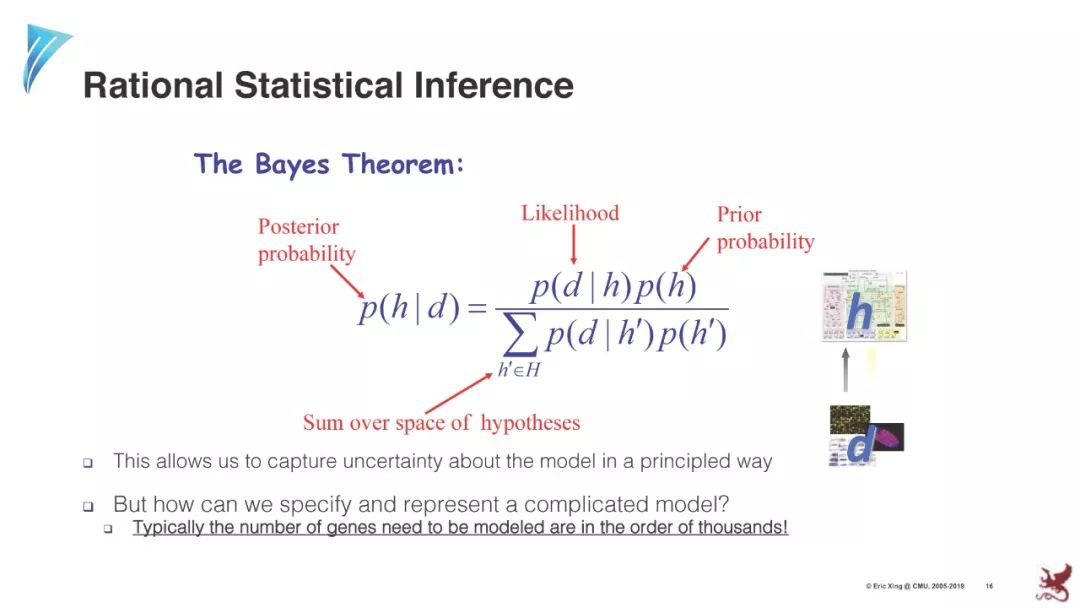

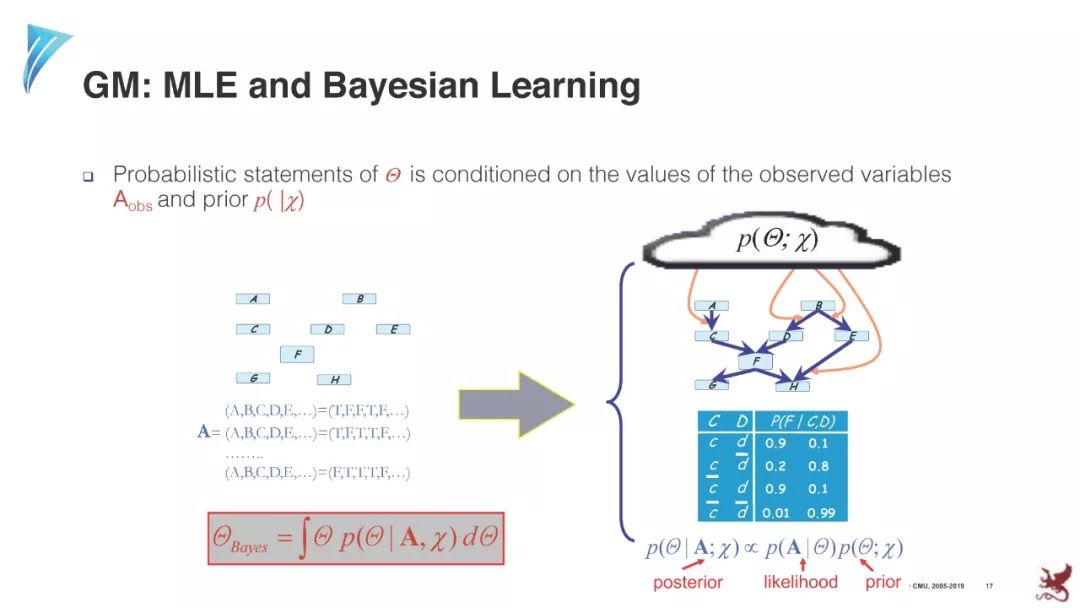

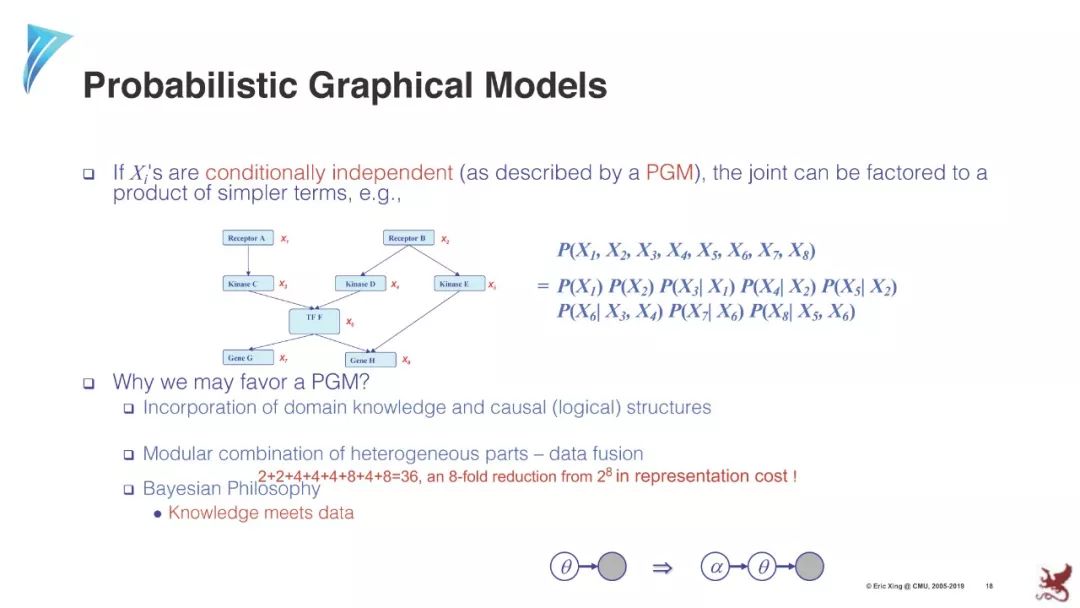

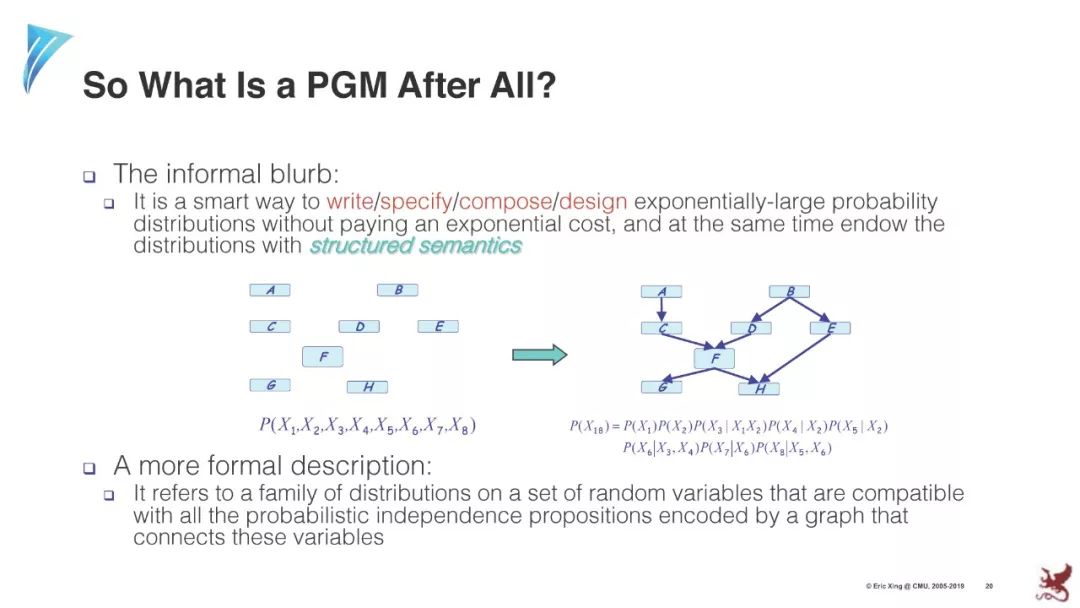

在人工智能、统计学、计算机系统、计算机视觉、自然语言处理和计算生物学等许多领域中的问题,都可以被视为从局部信息中寻找一致的全局结论。概率图模型框架为这些普遍问题提供了统一的视角解决方案,支持在具有大量属性和庞大数据集的问题中进行有效的推理、决策和学习。本研究生课程将为您运用图模型到复杂的问题和解决图模型的核心研究课题提供坚实的基础。

课程目录

Module 1: Introduction, Representation, and Exact Inference

Introduction to GM

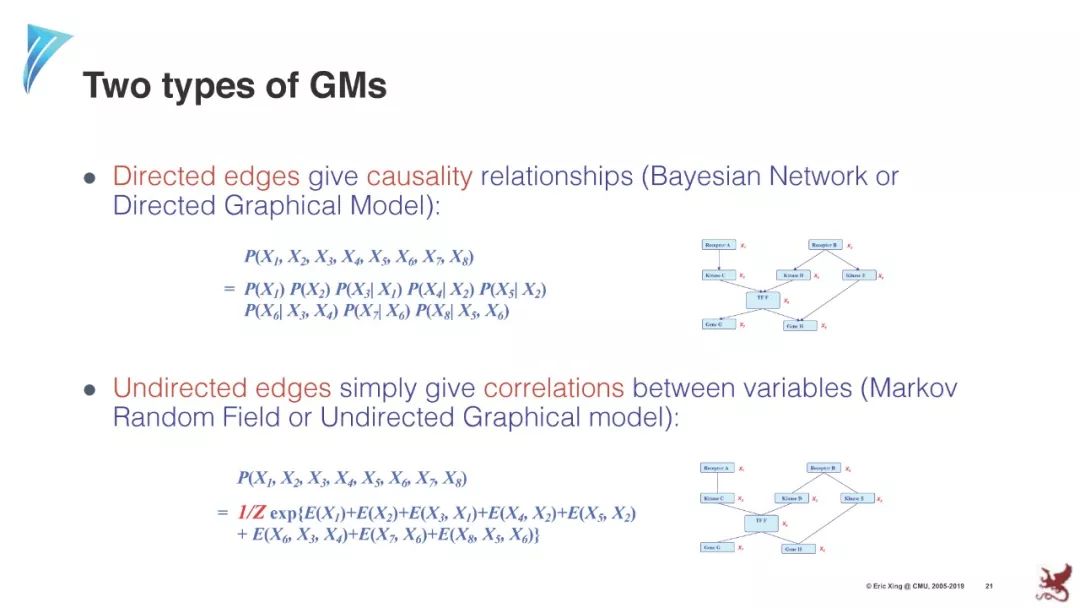

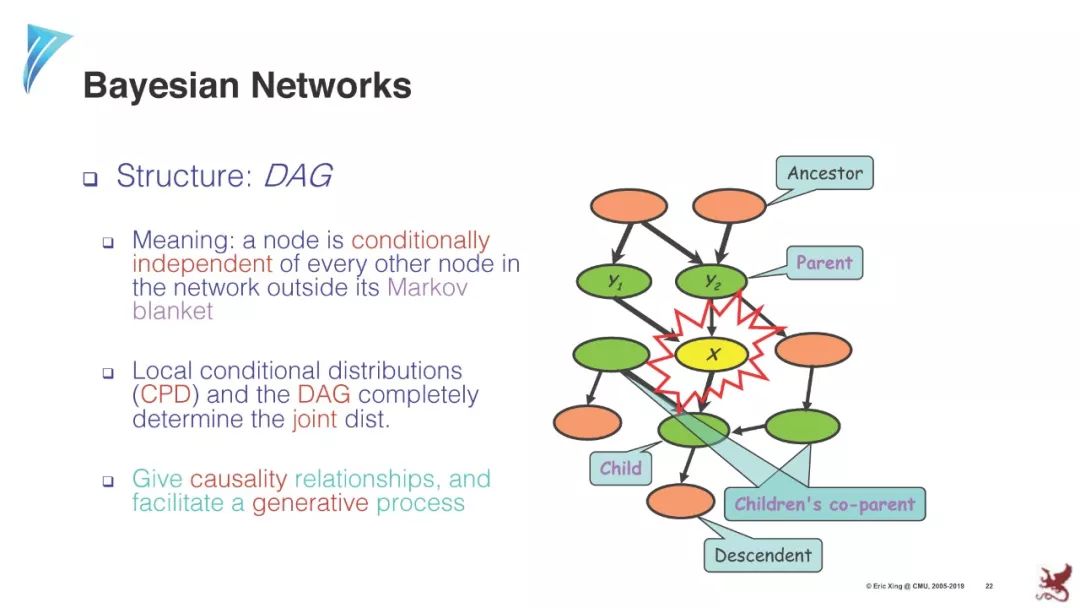

Representation: Directed GMs (BNs)

Representation: Undirected GMs (MRFs)

Exact inference

- Elimination

- Message passing

- Sum product algorithParameter learning in fully observable Bayesian Networks

- Generalized Linear Models (GLIMs)

- Maximum Likelihood Estimation (MLE)

- Markov ModelsParameter Learning of partially observed BN

- Mixture models

- Hidden Markov Models (HMMs)

- The EM algorithmParameter learning in fully observable Markov networks (CRF)

Causal discovery and inference

Gaussian graphical models, Ising model, Modeling networks

Sequential models

- Discrete Hidden State (HMM vs. CRF)

- Continuous Hidden State (Kalman Filter)

Module 2: Approximate Inference

Approximate Inference: Mean Field (MF) and loopy Belief Propagation (BP) approximations

Theory of Variational Inference: Inner and Outer Approximations

Approximate Inference: Monte Carlo and Sequential Monte Carlo methods

Markov Chain Monte Carlo

- Metropolis-Hastings

- Hamiltonian Monte Carlo

- Langevin DynamicsModule 3: Deep Learning & Generative Models

Statistical and Algorithmic Foundations of Deep Learning

- Insight into DL

- Connections to GMBuilding blocks of DL

- RNN and LSTM

- CNN, Transformers

- Attention mechanisms

- (Case studies in NLP)Deep generative models (part 1):

Overview of advances and theoretical basis of deep generative models

- Wake sleep algorithm

- Variational autoencoders

- Generative adversarial networksDeep generative models (part 2)

- Variational Autoencoders (VAE)

- Normalizing Flows

- Inverse Autoregressive Flows

- GANs and Implicit ModelsA unified view of deep generative models

- New formulations of deep generative models

- Symmetric modeling of latent and visible variables

- Evaluation of Deep Generative Models

Module 4: Reinforcement Learning & Control Through Inference in GM

Sequential decision making (part 1): The framework

- Brief introduction to reinforcement learning (RL)

- Connections to GM: RL and control as inferenceSequential decision making (part 2): The algorithms

- Maximum entropy RL and inverse RL

- Max-entropy policy gradient algorithms

- Soft Q-learning algorithms

- Some open questions/challenges

- Applications/case studies (games, robotics, etc.)

Module 5: Nonparametric methods

Bayesian non-parameterics

- Dirichlet process (DP)

- Hierarchical Dirichlet Process (HDP)

- Chinese Restaurant Process (CRP)

- Indian Buffet Process (IBP)Gaussian processes (GPs) and elements of meta-learning

- GPs and (deep) kernel learning

- Meta-learning formulation as learning a process

- Hypernetworks and contextual networks

- Neural processes (NPs) as an approximation to GPsRegularized Bayesian GMs (structured sparsiry, diversity, etc.)

Elements of Spectral & Kernel GMs

Module 6: Modular and scalable algorithms and systems

Automated black-box variational inference and elements of probabilistic programming

Scalable algorithms and systems for learning, inference, and prediction

Industialization of AI: standards, modules, building-blocks, and platform

附录第一章

【讲义下载】

请关注专知公众号(点击上方蓝色专知关注)

后台回复“PGM2019” 就可以获取本文的下载链接~

专知《深度学习:算法到实战》2019年全部完成,欢迎扫码报名学习!

-END-

专 · 知

专知《深度学习:算法到实战》课程全部完成!465位同学在学习,现在报名,限时优惠!网易云课堂人工智能畅销榜首位!

请加专知小助手微信(扫一扫如下二维码添加),咨询《深度学习:算法到实战》参团限时优惠报名~

欢迎微信扫一扫加入专知人工智能知识星球群,获取专业知识教程视频资料和与专家交流咨询!

请PC登录www.zhuanzhi.ai或者点击阅读原文,注册登录专知,获取更多AI知识资料!

点击“阅读原文”,了解报名专知《深度学习:算法到实战》课程