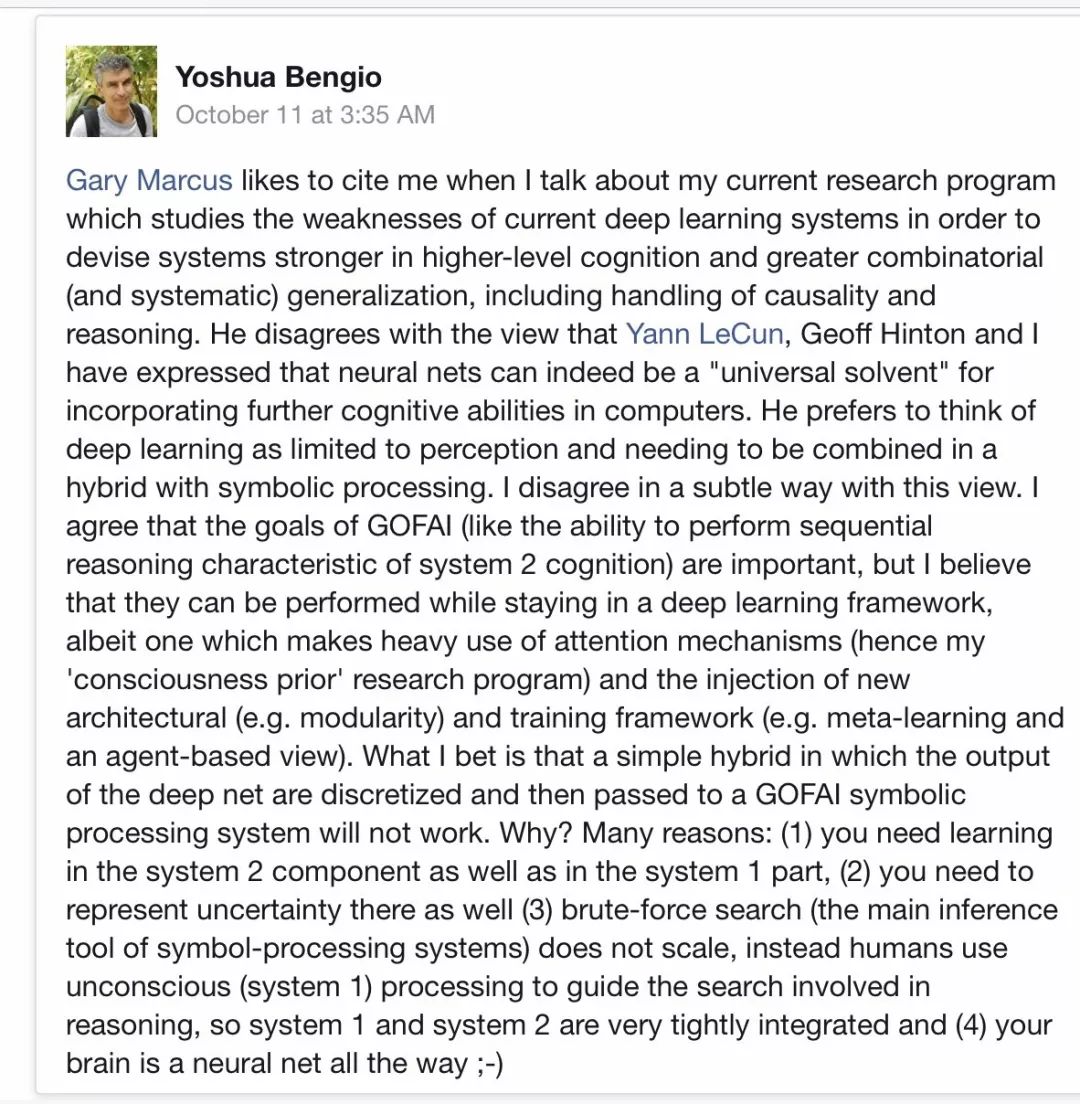

Gary Marcus 与Yoshua Bengio继续“舌战”, 关于当前AI与深度学习发展,神经网络是否通向通用AI之道?

【导读】Gary Marcus 又和Yoshua Bengio又开始“杠上”了!这位纽约大学著名心理学、神经科学家怼深度学习不是一天两天了。来看看他们说什么

The current state of AI and Deep Learning: A reply to Yoshua Bengio

Dear Yoshua,

Thanks for your note on Facebook, which I reprint below, followed by some thoughts of my own. I appreciate your taking the time to consider these issues.

I concur that you and I agree more than we disagree, and as you do, I share your implicit hope that field might benefit from an articulation of both our agreements and our disagreements.

Agreements

Deep learning on its own, as it has been practiced, is a valuable tool, but not enough on its own in its current form to get us to general intelligence.

Current techniques to deep learning often yield superficial results with poor generalizability. I’ve have been arguing about this since my first publication in in 1992, and made this specific point with respect to deep learning in 2012 in my first public comment on deep learning per se in a New Yorker post. It was also the focus of my 2001 book on cognitive science. The introduction of your recent arXiv article on causality echoes almost exactly the central point of The Algebraic Mind: generalizing outside the training space is challenging for many common neural networks.

We both also agree on the importance of bringing causality into the mix. Judea Pearl has been stressing this for decades; I believe I may have been the first to specifically stress this with respect to deep learning, in 2012, again in the linked New Yorker article.

I agree with you that it is vital to understand how to incorporate sequential “System II” (Kahnem’s term) reasoning, that I like call deliberative reasoning, into the workflow of artificial intelligence. Classical AI offers one approach, but one with its own significant limitations; it’s certainly interesting to explore whether there are alternatives.

Many or all of the things that you propose to incorporate — particularly attention, modularity, and metalearning — are likely to be useful. My previous company (I am sorry that the results are not published, and under NDA) had a significant interest in metalearning, and I am a firm believer in modularity and in building more structured models; to a large degree my campaign over the years has been for adding more structure (Ernest Davis and I explicit endorse this in our new book). I am not entirely sure what you have in mind about an agent-based view, but that too sounds reasonable to me.

Disagreements

You seem to think that I am advocating a “simple hybrid in which the output of the deep net are discretized and then passed to a GOFAI symbolic processing system”, but I have never proposed any such thing. I am all for hybrids, but think we need something a lot more subtle, and I have never endorsed the notion that all core processing must be straight GOFAI. To the contrary, Davis and I challenge GOFAI as well in our recent book, and in the common sense and cognitive science chapters call for a hybrid that extends beyond a simple concatenation of extant GOFAI and deep learning. I entirely agree that we need richer notions of uncertainty than are typically found in GOFAI. We also explicitly lobby for incorporating more learning into System II, as you do, though I personally don’t think that deep learning is suitable for that purpose.

That said, I do that think that symbol-manipulation (a core commitment of GOFAI) is critical, and that you significantly underestimate its value.

To begin with a large fraction of the world’s knowledge is expressed symbolically (eg. in unstructured text, throughout the internet), and current, deep-learning based systems lack adequate ways to leverage that knowledge. I can tell a child that a zebra is a horse with stripes, and they can acquire that knowledge on a single trial, and integrate it with their perceptual systems. Current systems can’t do anything (reliable) of the sort.

I see no way to do robust natural language understanding in the absence of some sort of symbol manipulating system; the very idea of doing so seems to dismiss an entire field of cognitive science (linguistics). Yes, deep learning has made progress on translation, but on robust conversational interpretation, it has not.

I honestly see no principled reason for excluding symbol systems from the tools of general artificial intelligence; certainly you express none above. The vast preponderance of the world’s software still consists of symbol-manipulating code; Why you would wish to exclude such demonstrably valuable tools from a comprehensive approach to general intelligence?

I think that you overvalue the notion of one-stop shopping; sure, it would be great to have a single architecture to capture all of cognition, but I think it’s unrealistic to expect this. Cognition / general intelligence is a multidimensional thing that consists of many different challenges. Off-the-shelf deep learning is great at perceptual classification, which is one thing any intelligent creature might do, but not (as currently constituted) well suited to other problems that have very different character. Mapping a set of entities onto a set of predetermined categories (as deep learning does well) is not the same as generative novel interpretations from an infinite number of sentences, or formulating a plan that crosses multiple time scales. There is no particular reason to think that the deep learning can do the latter two sorts of problems well, nor to think that each of these problems is identical. In biology, in a complex creature such as a human, one finds many different brain areas, with subtly different pattern of gene expression; most problem-solving draws on different subsets of neural architecture, exquisitely tuned to the nature of those problems. Gating between systems with differing computational strengths seems to be the essence of human intelligence; expecting a monolithic architecture to replicate that seems to me deeply unrealistic.

Above, at the close of your post, you seem to suggest that because the brain is a neural network, we can infer that it is not a symbol-manipulating system. However, we have no idea what sort of neural network the brain is, and we know from various proofs that neural networks can (eg) directly implement (symbol-manipulating) Turing machines. We also know that humans can be trained to be symbol-manipulators; whenever a trained person does logic or algebra or physics etc, it’s clear that the human brain. (a neural network of unknown architecture) can do some symbol manipulation. The real questions are how central is that, and how is it implemented in the brain?

There is some equivocation in what you write between “neural networks” and deep learning. I don’t actually think that the two are the same; I think deep learning (as currently practiced) is ONE way of building and training neural networks, but not the only way. It may or may not relate to the ways in which human brains work, and which may or may not relate to the ways in which some future class of synthetic neural networks work. Fodor and Pylyshyn made an important distinction between implementational connectionism, which would use neural networks to, e.g. build things like Turing Machines, and eliminative connectionism, which aimed to build neural networks that don’t simply implement symbol-manipulation. I don’t doubt that whatever ultimately works for AI can be implemented in a neural network, the question is what is the nature of that neural network. My consistent claim, for nearly 30 years, is that a successful neural network for capturing general intelligence needs to include operations over variables — as some recent work in differentiable programming does, but as a simple multilayer perceptron does not.

I think you are focusing on too narrow a slice of causality; it’s important to have a quantitative estimate of how strongly one factor influences another, but also to have mechanisms with which to draw causal inferences. how for example, does a person understand which part of a cheese grater does the cutting, and how the shape of the holes in the grater relate to the cheese shavings that ensue? it’s not enough just to specify some degree of relatedness between holes and grated cheese. A richer marriage of symbol-manipulation that can represent abstract notions such as function with the sort of work you are embarking on may be required here.

Gaps

You don’t really say what you think about the notion of building in prior knowledge; to me, that issue is absolutely central, and neglected in most current work on deep learning. I am curious about your views of innateness, and whether you see adding more prior knowledge to ML to be an important part of moving forward.

Bones to Pick

At times you misrepresent me, and I think that conversation would be improved if you would respond to my actual position, rather than a misinterpretation. To take one example, you seem unaware of the fact that Rebooting AI calls for many of the same things you do; where you say

“is that a simple hybrid in which the output of the deep net are discretized and then passed to a GOFAI symbolic processing system will not work. Why? Many reasons: (1) you need learning in the system 2 component as well as in the system 1 part, (2) you need to represent uncertainty there as well…”

Ernie Davis and I actually make the same points:

“… it’s probably not realistic to encode by hand every-thing that machines need to know. Machines are going to need to learn lots of things on their own. We might want to hand-code the fact that sharp hard blades can cut soft material, but then an AI should be able to build on that knowledge and learn how knives, cheese graters, lawn mowers, and blenders work, without having each of these mechanisms coded by hand”

and on point 2 we too emphasize uncertainty and GOFAI’s weaknesses thereon

“ formal logic of the sort we have been talking about does only one thing well: it allows us to take knowledge of which we are certain and apply rules that are always valid to deduce new knowledge of which we are also certain. If we are entirely sure that Ida owns an iPhone, and we are sure that Apple makes Iphones, then we can be sure that Ida owns something made by Apple. But what in life is absolutely certain? As Bertrand Russell once wrote, “All human knowledge is uncertain, inexact, and partial.” Yet somehow we humans manage. When machines can finally do the same, representing and reasoning about that sort of knowledge — uncertain, inexact, and partial — with the fluidity of human beings, the age of flexible and powerful, broad AI will finally be in sight.”

As you can see, we are actually on the same side on questions like these; in your post above you are criticizing a strawperson rather than our actual position.

At the same time, I don’t think that you have acknowledged that your own views have changed somewhat; your 2016 Nature paper was far more strident than your current views, and acknowledged far fewer limits on deep learning.

I genuinely appreciate your engagement in your Facebook post; I do wish at times that you would cite my work when it clearly prefigures your own. As a case in point, in a recent arXiv paper you open your paper, without citation, by focusing on this problem:

“Current machine learning methods seem weak when they are required to generalize beyond the training distribution… It is not enough to obtain good generalization on a test set sampled from the same distribution as the training data”.

This challenge is is precisely what I showed in 1998 when I wrote:

the class of eliminative connectionist models that is currently popular cannot learn to extend universals outside the training space

and it was the central focus of Chapter 3 of The Algebraic Mind, in 2001:

“multilayer perceptron[s] cannot generalize [a certain class of universally quantified function] outside the training space. .. In some cases it appears that humans can freely generalize from restricted data, [in these cases a certain class of] multilayer perceptions that are trained by back-propagation are inappropriate”

I have tried to call your attention to this prefiguring multiple times, in publicand in private, and you have never responded nor cited the work, even though the point I tried to call attention to has become increasingly central to the framing of your research.

Despite the disagreements, I remain a fan of yours, both because of the consistent, exceptional quality of your work, and because of the honesty and integrity with which you have acknowledged the limitations of deep learning in recent years. I also adore the way in which you work to apply AI to the greater good of humanity, and genuinely wish more people would take you as a role model.

If you can bring causality, in something like the rich form in which it is expressed in humans, into deep learning, it will be a real and lasting contribution to general artificial intelligence. I look forward to seeing what you develop next, and would welcome a chance to visit you and your lab when I am next in Montreal.

Best regards,

Gary Marcus

原文链接:

https://medium.com/@GaryMarcus/the-current-state-of-ai-and-deep-learning-a-reply-to-yoshua-bengio-77952ead7970