用Kubernetes(k8s)构建企业容器云

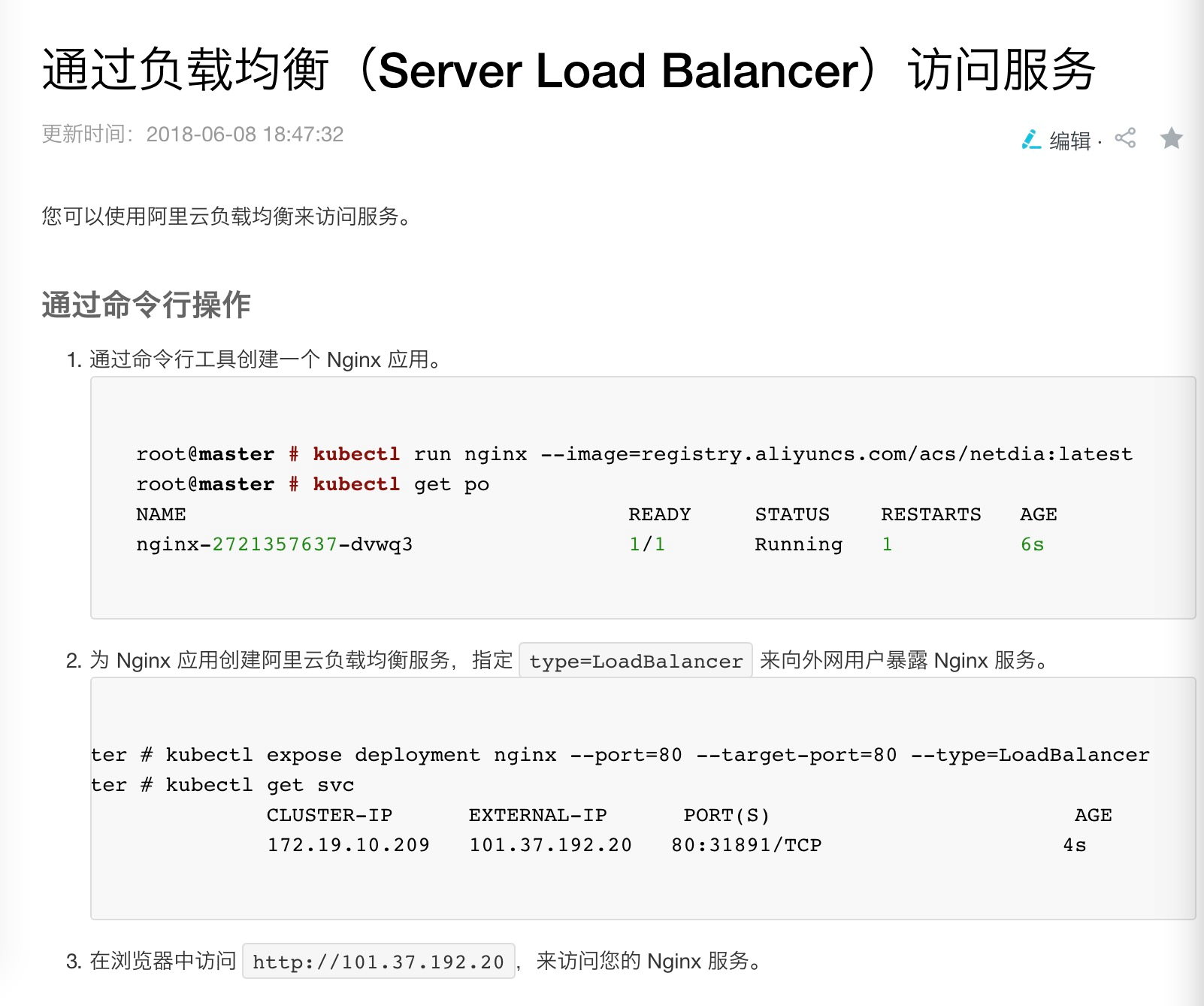

1、Kubernetes架构

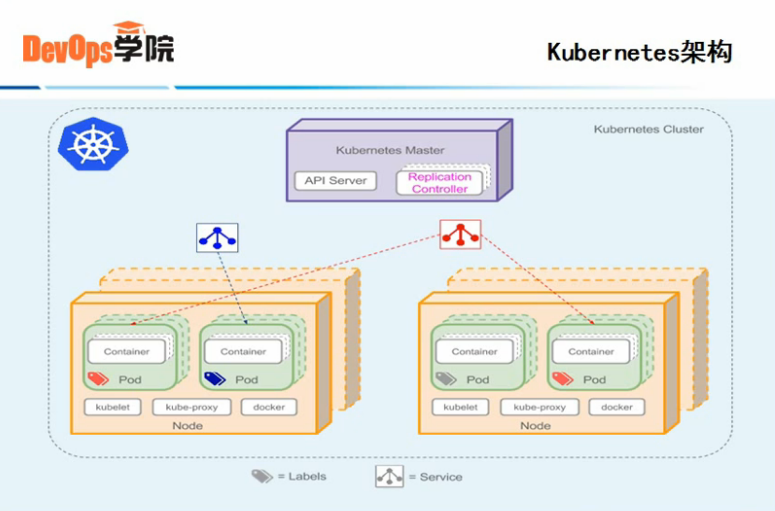

2、k8s架构图

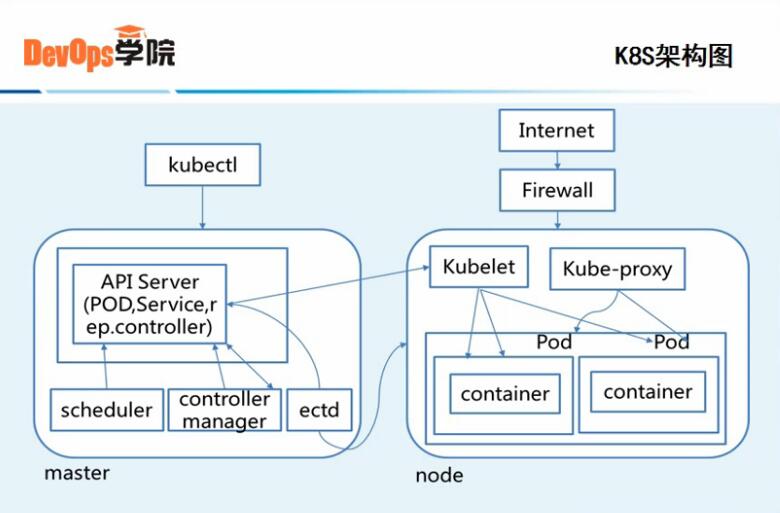

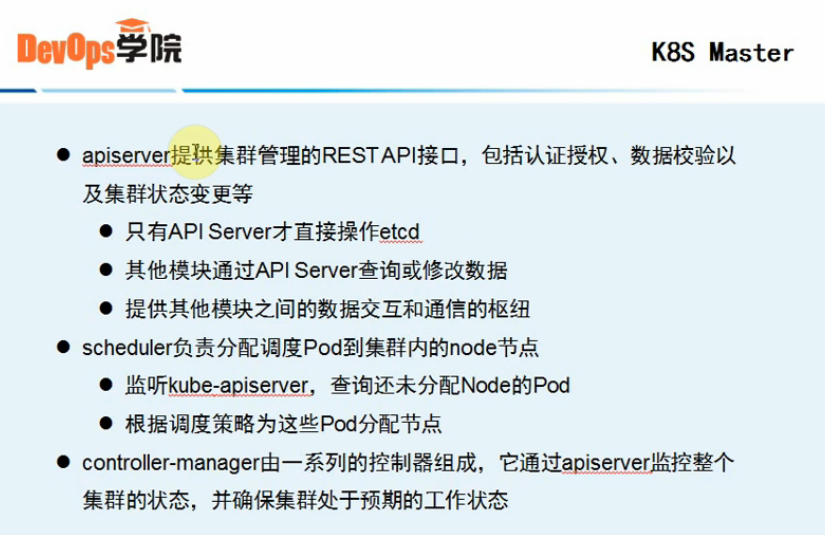

3、k8s Master节点

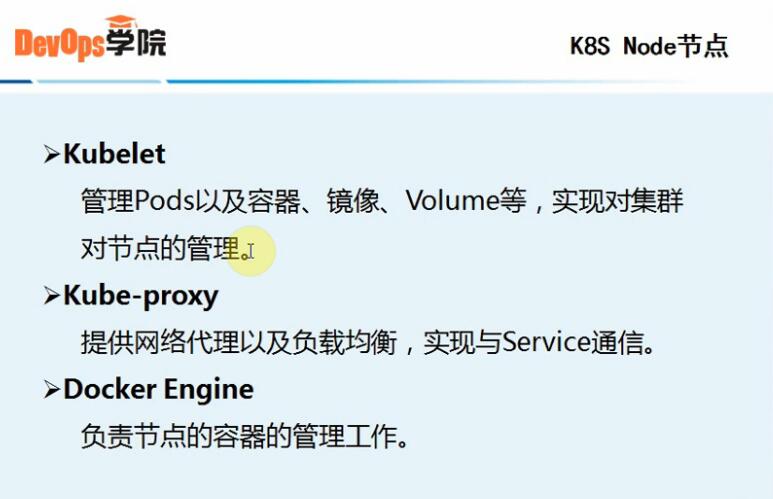

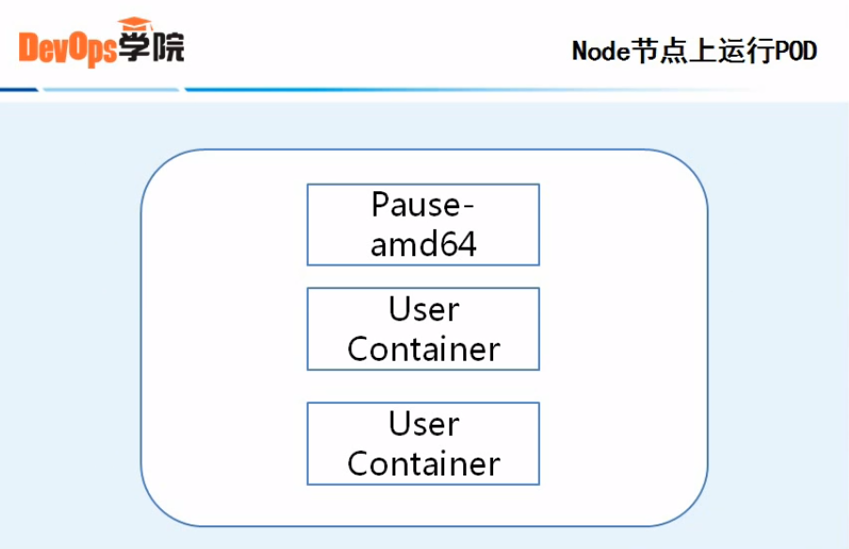

4、k8s Node节点

5、课程目标

二、环境准备

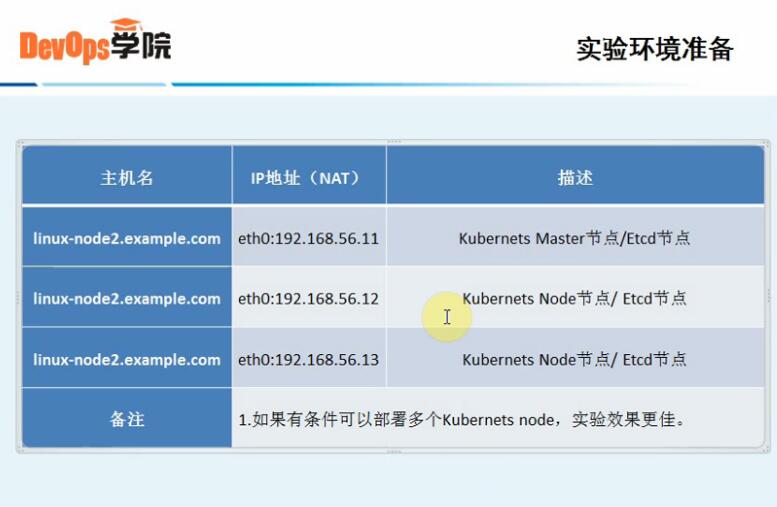

1、实验环境准备

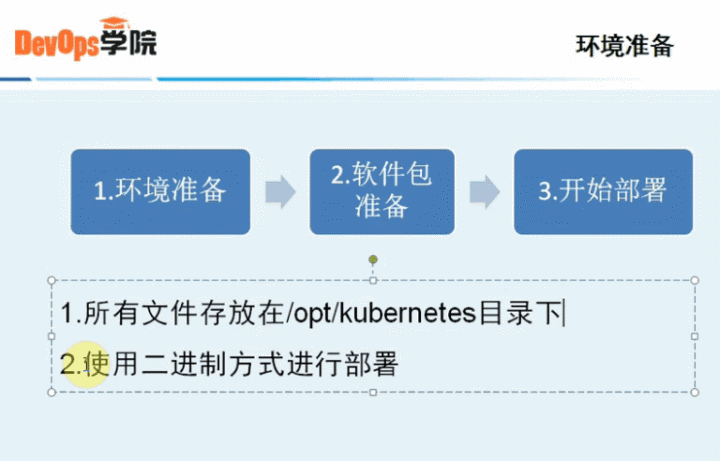

2、环境准备

3、实验环境

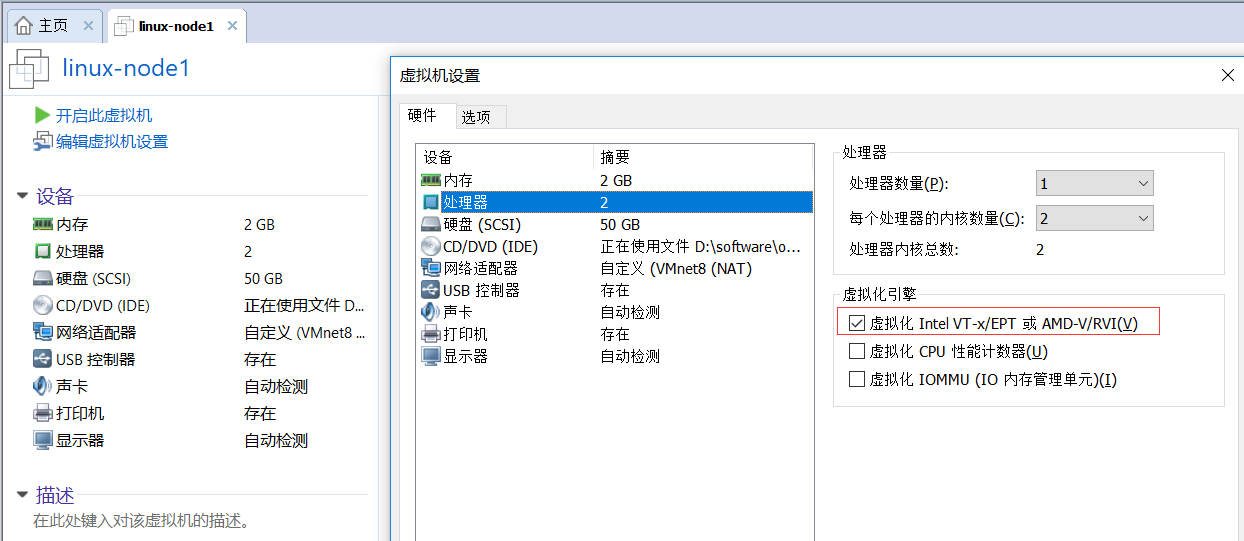

4、硬件要求

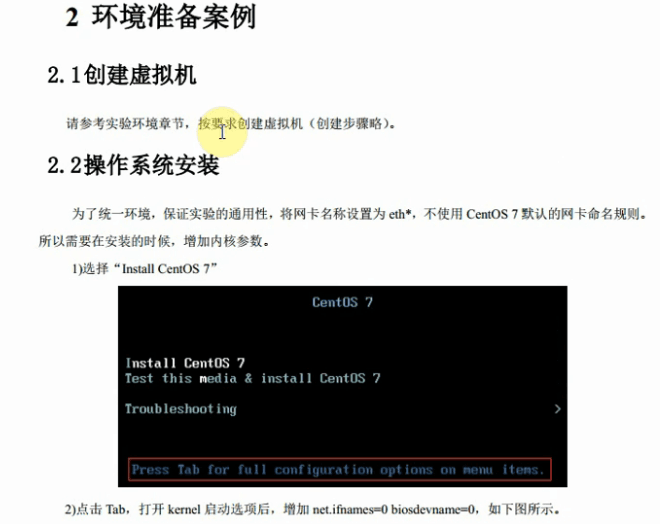

5、环境准备案例

3、网络配置

主机:linux-node1

4、网络地址规划

主机名称:linux-node1 ip:192.168.56.11 gateway:192.168.56.2

#网卡信息

[root@linux-node1 ~]# cat /etc/sysconfig/network-scripts/ifcfg-eth0TYPE=EthernetBOOTPROTO=staticDEFROUTE=yesPEERDNS=no PEERROUTES=yesIPV4_FAILURE_FATAL=noNAME=eth0#UUID=3bd0b7db-a40b-4ac0-a65f-f6b3895b5717DEVICE=eth0ONBOOT=yesIPADDR=192.168.56.11NETMASK=255.255.255.0GATEWAY=192.168.56.2 |

#重启网络

[root@linux-node1 ~]# systemctl restart network

#主机名称

[root@linux-node1 ~]# hostnamectl set-hostname linux-node1.example.com

linux-node1.example.com

[root@linux-node1 ~]# hostname linux-node1.example.com

linux-node1.example.com

or

[root@linux-node1 ~]# vi /etc/hostname

linux-node1.example.com

#close firewalld and NetworkManager

[root@linux-node1 ~]# systemctl disable firewalld

[root@linux-node1 ~]# systemctl disable NetworkManager

#关闭SELinux

sed -i 's/SELINUX=enforcing/SELINUX=disabled/g' /etc/selinux/config

setenforce 0

#配置dns解析

[root@linux-node1 ~]# vi /etc/resolv.conf # Generated by NetworkManagernameserver 192.168.56.2# No nameservers found; try putting DNS servers into your# ifcfg files in /etc/sysconfig/network-scripts like so:## DNS1=xxx.xxx.xxx.xxx# DNS2=xxx.xxx.xxx.xxx# DOMAIN=lab.foo.com bar.foo.com |

#配置/etc/hosts解析

[root@linux-node1 ~]# cat /etc/hosts127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4::1 localhost localhost.localdomain localhost6 localhost6.localdomain6192.168.56.11 linux-node1 linux-node1.example.com192.168.56.12 linux-node2 linux-node1.example.com |

#配置centos7.x epel源

[root@linux-node1 ~]# rpm -ivh https://mirrors.aliyun.com/epel/epel-release-latest-7.noarch.rpmRetrieving https://mirrors.aliyun.com/epel/epel-release-latest-7.noarch.rpmwarning: /var/tmp/rpm-tmp.KDisBz: Header V3 RSA/SHA256 Signature, key ID 352c64e5: NOKEYPreparing... ################################# [100%]Updating / installing... 1:epel-release-7-11 ################################# [100%] |

#安装常用软件

yum install net-tools vim lrzsz screen lsof tcpdump nc mtr nmap -y |

#更新并重启系统

yum update -y && reboot

三、其它

四、系统环境初始化

第一步:使用国内Docker源

[root@linux-node1 ~]# cd /etc/yum.repos.d/

[root@linux-node1 yum.repos.d]# wget \

https://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

第二步:Docker安装:

[root@linux-node1 ~]# yum install -y docker-ce

第三步:启动后台进程:

[root@linux-node1 ~]# systemctl start docker

mkdir -p /opt/kubernetes/{cfg,bin,ssl,log}

官网:https://github.com/kubernetes/kubernetes

or

QQ群中下载

4.解压软件包

unzip k8s-v1.10.1-manual.zip

直入目录中,再解压

tar zxf kubernetes.tar.gz

tar zxf kubernetes-server-linux-amd64.tar.gz

tar zxf kubernetes-client-linux-amd64.tar.gz

tar zxf kubernetes-node-linux-amd64.tar.gz5、配置环境变量

[root@linux-node1 ~]# vim .bash_profile

# .bash_profile# Get the aliases and functionsif [ -f ~/.bashrc ]; then . ~/.bashrcfi# User specific environment and startup programsPATH=$PATH:$HOME/bin:/opt/kubernetes/binexport PATH |

#生效

[root@linux-node1 ~]# source .bash_profile

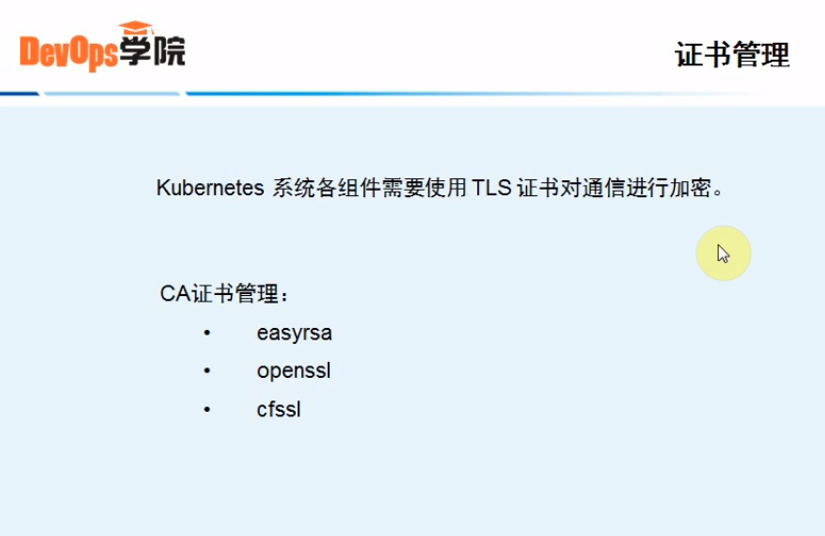

五、CA证书创建和分发

证书管理

#手动制作CA证书

证书下载地址:https://pkg.cfssl.org/

#做ssh-keygen认证

[root@linux-node1 bin]# ssh-keygen -t rsaGenerating public/private rsa key pair.Enter file in which to save the key (/root/.ssh/id_rsa): Created directory '/root/.ssh'.Enter passphrase (empty for no passphrase): Enter same passphrase again: Your identification has been saved in /root/.ssh/id_rsa.Your public key has been saved in /root/.ssh/id_rsa.pub.The key fingerprint is:SHA256:V0GMqskLf1IFyyotCJr9kA7QSKuOGNEnRwPdNUf6+bo root@linux-node1.example.comThe key's randomart image is:+---[RSA 2048]----+| .o . .o.o+o || . + . .+. .. ||.+.. . ..+ . ||=.+ o +..o ||+= * o +S.+ ||* = + * .. . ||++ o = o . ||o.. . + . . || o E. |+----[SHA256]-----+#发送公钥认证[root@linux-node1 bin]# ssh-copy-id linux-node1/usr/bin/ssh-copy-id: INFO: Source of key(s) to be installed: "/root/.ssh/id_rsa.pub"The authenticity of host 'linux-node1 (192.168.56.11)' can't be established.ECDSA key fingerprint is SHA256:oZh5LUJsx3rzHUVvAS+9q8r+oYDNjNIVS7CKxZZhXQY.ECDSA key fingerprint is MD5:6c:0c:ca:73:ad:66:9d:ce:4c:1d:88:27:4e:d1:81:3a.Are you sure you want to continue connecting (yes/no)? yes/usr/bin/ssh-copy-id: INFO: attempting to log in with the new key(s), to filter out any that are already installed/usr/bin/ssh-copy-id: INFO: 1 key(s) remain to be installed -- if you are prompted now it is to install the new keysroot@linux-node1's password: Number of key(s) added: 1Now try logging into the machine, with: "ssh 'linux-node1'"and check to make sure that only the key(s) you wanted were added.#发送公钥认证[root@linux-node1 bin]# ssh-copy-id linux-node2[root@linux-node1 bin]# ssh-copy-id linux-node3 |

#测试

|

1

2

3

4

5

6

7

8

9

10

11

12

13

|

[root@linux-node1 bin]# ssh linux-node2Last login: Sun Jun 3 20:41:27 2018 from 192.168.56.1[root@linux-node2 ~]# exitlogoutConnection to linux-node2 closed.[root@linux-node1 bin]# ssh linux-node3Last failed login: Mon Jun 4 06:00:20 CST 2018 from linux-node2 on ssh:nottyThere was 1 failed login attempt since the last successful login.Last login: Mon Jun 4 05:53:44 2018 from 192.168.56.1[root@linux-node3 ~]# exitlogoutConnection to linux-node3 closed. |

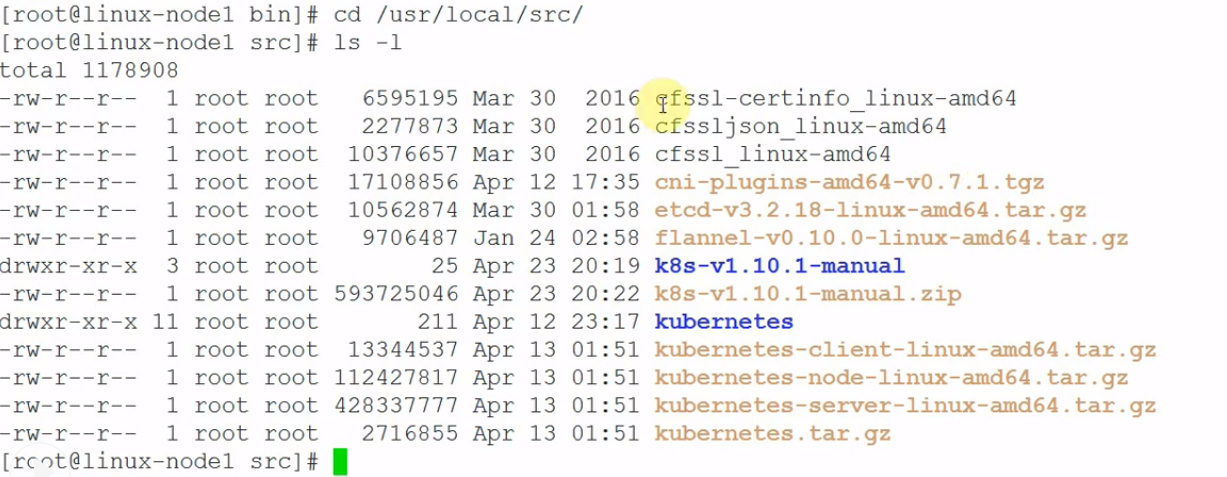

1、安装 CFSSL

[root@linux-node1 ~]# cd /usr/local/src[root@linux-node1 src]# wget https://pkg.cfssl.org/R1.2/cfssl_linux-amd64[root@linux-node1 src]# wget https://pkg.cfssl.org/R1.2/cfssljson_linux-amd64[root@linux-node1 src]# wget https://pkg.cfssl.org/R1.2/cfssl-certinfo_linux-amd64[root@linux-node1 src]# chmod +x cfssl*[root@linux-node1 src]# mv cfssl-certinfo_linux-amd64 /opt/kubernetes/bin/cfssl-certinfo[root@linux-node1 src]# mv cfssljson_linux-amd64 /opt/kubernetes/bin/cfssljson[root@linux-node1 src]# mv cfssl_linux-amd64 /opt/kubernetes/bin/cfssl复制cfssl命令文件到k8s-node1和k8s-node2节点。如果实际中多个节点,就都需要同步复制。[root@linux-node1 ~]# scp /opt/kubernetes/bin/cfssl* 192.168.56.12:/opt/kubernetes/bin[root@linux-node1 ~]# scp /opt/kubernetes/bin/cfssl* 192.168.56.13:/opt/kubernetes/bin |

2.初始化cfssl

[root@linux-node1 bin]# cd /usr/local/src/[root@linux-node1 src]# mkdir ssl && cd ssl |

3.创建用来生成 CA 文件的 JSON 配置文件

[root@linux-node1 ssl]# vim ca-config.json{ "signing": { "default": { "expiry": "8760h" }, "profiles": { "kubernetes": { "usages": [ "signing", "key encipherment", "server auth", "client auth" ], "expiry": "8760h" } } }} |

4.创建用来生成 CA 证书签名请求(CSR)的 JSON 配置文件

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

|

[root@linux-node1 ssl]# vim ca-csr.json{ "CN": "kubernetes", "key": { "algo": "rsa", "size": 2048 }, "names": [ { "C": "CN", "ST": "BeiJing", "L": "BeiJing", "O": "k8s", "OU": "System" } ]} |

5.生成CA证书(ca.pem)和密钥(ca-key.pem)

[root@ linux-node1 ssl]# cfssl gencert -initca ca-csr.json | cfssljson -bare ca[root@ linux-node1 ssl]# ls -l ca*-rw-r--r-- 1 root root 290 Mar 4 13:45 ca-config.json-rw-r--r-- 1 root root 1001 Mar 4 14:09 ca.csr-rw-r--r-- 1 root root 208 Mar 4 13:51 ca-csr.json-rw------- 1 root root 1679 Mar 4 14:09 ca-key.pem-rw-r--r-- 1 root root 1359 Mar 4 14:09 ca.pem |

6.分发证书

# cp ca.csr ca.pem ca-key.pem ca-config.json /opt/kubernetes/sslSCP证书到k8s-node1和k8s-node2节点# scp ca.csr ca.pem ca-key.pem ca-config.json 192.168.56.12:/opt/kubernetes/ssl # scp ca.csr ca.pem ca-key.pem ca-config.json 192.168.56.13:/opt/kubernetes/ssl |

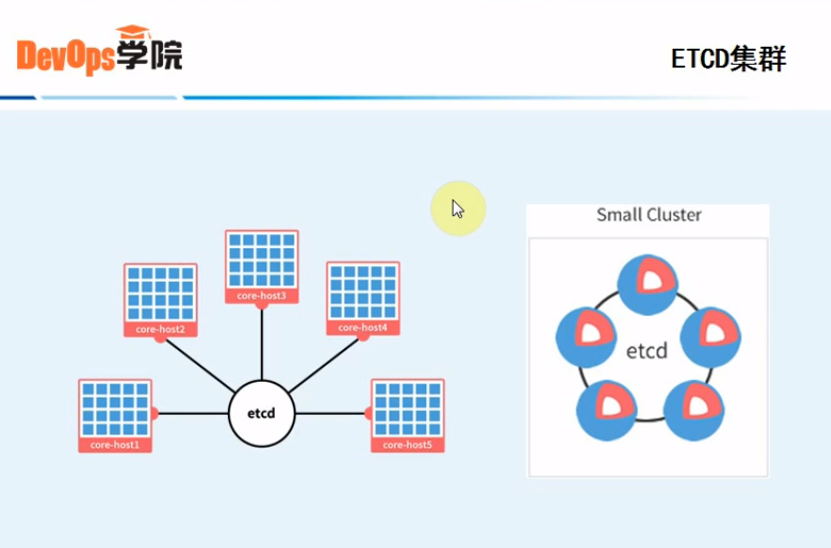

六、手动部署ETCD集群

官网地址:https://github.com/coreos/etcd/

0.准备etcd软件包

wget https://github.com/coreos/etcd/releases/download/v3.2.18/etcd-v3.2.18-linux-amd64.tar.gz[root@linux-node1 src]# tar zxf etcd-v3.2.18-linux-amd64.tar.gz[root@linux-node1 src]# cd etcd-v3.2.18-linux-amd64[root@linux-node1 etcd-v3.2.18-linux-amd64]# cp etcd etcdctl /opt/kubernetes/bin/ [root@linux-node1 etcd-v3.2.18-linux-amd64]# scp etcd etcdctl 192.168.56.12:/opt/kubernetes/bin/[root@linux-node1 etcd-v3.2.18-linux-amd64]# scp etcd etcdctl 192.168.56.13:/opt/kubernetes/bin/ |

1.创建 etcd 证书签名请求:

证书存放目录如下:

[root@linux-node1 etcd-v3.2.18-linux-amd64]# cd /usr/local/src/ssl/

[root@linux-node1 ssl]# ll

total 20

-rw-r--r-- 1 root root 290 Jun 4 07:49 ca-config.json

-rw-r--r-- 1 root root 1001 Jun 4 07:53 ca.csr

-rw-r--r-- 1 root root 208 Jun 4 07:50 ca-csr.json

-rw------- 1 root root 1679 Jun 4 07:53 ca-key.pem

-rw-r--r-- 1 root root 1359 Jun 4 07:53 ca.pem

#创建etcd证书

#注意ip地址192.168.56.11,如果增加,在其它机器,改成自己ip地址。

[root@linux-node1 ~]# vim etcd-csr.json{ "CN": "etcd", "hosts": [ "127.0.0.1","192.168.56.11","192.168.56.12","192.168.56.13" ], "key": { "algo": "rsa", "size": 2048 }, "names": [ { "C": "CN", "ST": "BeiJing", "L": "BeiJing", "O": "k8s", "OU": "System" } ]} |

2.生成 etcd 证书和私钥:

[root@linux-node1 ~]# cfssl gencert -ca=/opt/kubernetes/ssl/ca.pem \ -ca-key=/opt/kubernetes/ssl/ca-key.pem \ -config=/opt/kubernetes/ssl/ca-config.json \ -profile=kubernetes etcd-csr.json | cfssljson -bare etcd会生成以下证书文件[root@k8s-master ~]# ls -l etcd*-rw-r--r-- 1 root root 1045 Mar 5 11:27 etcd.csr-rw-r--r-- 1 root root 257 Mar 5 11:25 etcd-csr.json-rw------- 1 root root 1679 Mar 5 11:27 etcd-key.pem-rw-r--r-- 1 root root 1419 Mar 5 11:27 etcd.pem |

3.将证书移动到/opt/kubernetes/ssl目录下

[root@k8s-master ~]# cp etcd*.pem /opt/kubernetes/ssl[root@linux-node1 ~]# scp etcd*.pem 192.168.56.12:/opt/kubernetes/ssl[root@linux-node1 ~]# scp etcd*.pem 192.168.56.13:/opt/kubernetes/ssl |

4.设置ETCD配置文件

[root@linux-node1 ~]# vim /opt/kubernetes/cfg/etcd.conf#[member]ETCD_NAME="etcd-node1"ETCD_DATA_DIR="/var/lib/etcd/default.etcd"#ETCD_SNAPSHOT_COUNTER="10000"#ETCD_HEARTBEAT_INTERVAL="100"#ETCD_ELECTION_TIMEOUT="1000"ETCD_LISTEN_PEER_URLS="https://192.168.56.11:2380"ETCD_LISTEN_CLIENT_URLS="https://192.168.56.11:2379,https://127.0.0.1:2379"#ETCD_MAX_SNAPSHOTS="5"#ETCD_MAX_WALS="5"#ETCD_CORS=""#[cluster]ETCD_INITIAL_ADVERTISE_PEER_URLS="https://192.168.56.11:2380"# if you use different ETCD_NAME (e.g. test),# set ETCD_INITIAL_CLUSTER value for this name, i.e. "test=http://..."ETCD_INITIAL_CLUSTER="etcd-node1=https://192.168.56.11:2380,etcd-node2=https://192.168.56.12:2380,etcd-node3=https://192.168.56.13:2380"ETCD_INITIAL_CLUSTER_STATE="new"ETCD_INITIAL_CLUSTER_TOKEN="k8s-etcd-cluster"ETCD_ADVERTISE_CLIENT_URLS="https://192.168.56.11:2379"#[security]CLIENT_CERT_AUTH="true"ETCD_CA_FILE="/opt/kubernetes/ssl/ca.pem"ETCD_CERT_FILE="/opt/kubernetes/ssl/etcd.pem"ETCD_KEY_FILE="/opt/kubernetes/ssl/etcd-key.pem"PEER_CLIENT_CERT_AUTH="true"ETCD_PEER_CA_FILE="/opt/kubernetes/ssl/ca.pem"ETCD_PEER_CERT_FILE="/opt/kubernetes/ssl/etcd.pem"ETCD_PEER_KEY_FILE="/opt/kubernetes/ssl/etcd-key.pem" |

#注意:

ETCD_NAME="etcd-node1" #其它节点需要修改成对应的名称。

ETCD_LISTEN_PEER_URLS="https://192.168.56.11:2380" #备注:监听端口2380

ETCD_LISTEN_CLIENT_URLS="https://192.168.56.11:2379,https://127.0.0.1:2379" #备注:客户端端口2379

ETCD_INITIAL_CLUSTER="etcd-node1=https://192.168.56.11:2380,etcd-node2=https://192.168.56.12:2380,etcd-node3=https://192.168.56.13:2380" #增加节点这里也要增加

5.创建ETCD系统服务

[root@linux-node1 ~]# vim /etc/systemd/system/etcd.service[Unit]Description=Etcd ServerAfter=network.target[Service]Type=simpleWorkingDirectory=/var/lib/etcdEnvironmentFile=-/opt/kubernetes/cfg/etcd.conf# set GOMAXPROCS to number of processorsExecStart=/bin/bash -c "GOMAXPROCS=$(nproc) /opt/kubernetes/bin/etcd"Type=notify[Install]WantedBy=multi-user.target |

6.重新加载系统服务

[root@linux-node1 ~]# systemctl daemon-reload[root@linux-node1 ~]# systemctl enable etcd# scp /opt/kubernetes/cfg/etcd.conf 192.168.56.12:/opt/kubernetes/cfg/# scp /etc/systemd/system/etcd.service 192.168.56.12:/etc/systemd/system/# scp /opt/kubernetes/cfg/etcd.conf 192.168.56.13:/opt/kubernetes/cfg/# scp /etc/systemd/system/etcd.service 192.168.56.13:/etc/systemd/system/<br><br> |

#需要:reload |

[root@linux-node2 ~]# mkdir /var/lib/etcd |

#修改linux-node2[root@linux-node2 ~]# cat /opt/kubernetes/cfg/etcd.conf#[member]ETCD_NAME="etcd-node2"ETCD_DATA_DIR="/var/lib/etcd/default.etcd"#ETCD_SNAPSHOT_COUNTER="10000"#ETCD_HEARTBEAT_INTERVAL="100"#ETCD_ELECTION_TIMEOUT="1000"ETCD_LISTEN_PEER_URLS="https://192.168.56.12:2380"ETCD_LISTEN_CLIENT_URLS="https://192.168.56.12:2379,https://127.0.0.1:2379"#ETCD_MAX_SNAPSHOTS="5"#ETCD_MAX_WALS="5"#ETCD_CORS=""#[cluster]ETCD_INITIAL_ADVERTISE_PEER_URLS="https://192.168.56.12:2380"# if you use different ETCD_NAME (e.g. test),# set ETCD_INITIAL_CLUSTER value for this name, i.e. "test=http://..."ETCD_INITIAL_CLUSTER="etcd-node1=https://192.168.56.11:2380,etcd-node2=https://192.168.56.12:2380,etcd-node3=https://192.168.56.13:2380"ETCD_INITIAL_CLUSTER_STATE="new"ETCD_INITIAL_CLUSTER_TOKEN="k8s-etcd-cluster"ETCD_ADVERTISE_CLIENT_URLS="https://192.168.56.12:2379"#[security]CLIENT_CERT_AUTH="true"ETCD_CA_FILE="/opt/kubernetes/ssl/ca.pem"ETCD_CERT_FILE="/opt/kubernetes/ssl/etcd.pem"ETCD_KEY_FILE="/opt/kubernetes/ssl/etcd-key.pem"PEER_CLIENT_CERT_AUTH="true"ETCD_PEER_CA_FILE="/opt/kubernetes/ssl/ca.pem"ETCD_PEER_CERT_FILE="/opt/kubernetes/ssl/etcd.pem"ETCD_PEER_KEY_FILE="/opt/kubernetes/ssl/etcd-key.pem"#需要:reload |

[root@linux-node2 ~]# mkdir /var/lib/etcd |

[root@linux-node2 ~]# systemctl daemon-reload

[root@linux-node2 ~]# systemctl enable etcd

Created symlink from /etc/systemd/system/multi-user.target.wants/etcd.service to /etc/systemd/system/etcd.service.

#修改linux-node3[root@linux-node3 ~]# cat /opt/kubernetes/cfg/etcd.conf#[member]ETCD_NAME="etcd-node3"ETCD_DATA_DIR="/var/lib/etcd/default.etcd"#ETCD_SNAPSHOT_COUNTER="10000"#ETCD_HEARTBEAT_INTERVAL="100"#ETCD_ELECTION_TIMEOUT="1000"ETCD_LISTEN_PEER_URLS="https://192.168.56.13:2380"ETCD_LISTEN_CLIENT_URLS="https://192.168.56.13:2379,https://127.0.0.1:2379"#ETCD_MAX_SNAPSHOTS="5"#ETCD_MAX_WALS="5"#ETCD_CORS=""#[cluster]ETCD_INITIAL_ADVERTISE_PEER_URLS="https://192.168.56.13:2380"# if you use different ETCD_NAME (e.g. test),# set ETCD_INITIAL_CLUSTER value for this name, i.e. "test=http://..."ETCD_INITIAL_CLUSTER="etcd-node1=https://192.168.56.11:2380,etcd-node2=https://192.168.56.12:2380,etcd-node3=https://192.168.56.13:2380"ETCD_INITIAL_CLUSTER_STATE="new"ETCD_INITIAL_CLUSTER_TOKEN="k8s-etcd-cluster"ETCD_ADVERTISE_CLIENT_URLS="https://192.168.56.13:2379"#[security]CLIENT_CERT_AUTH="true"ETCD_CA_FILE="/opt/kubernetes/ssl/ca.pem"ETCD_CERT_FILE="/opt/kubernetes/ssl/etcd.pem"ETCD_KEY_FILE="/opt/kubernetes/ssl/etcd-key.pem"PEER_CLIENT_CERT_AUTH="true"ETCD_PEER_CA_FILE="/opt/kubernetes/ssl/ca.pem"ETCD_PEER_CERT_FILE="/opt/kubernetes/ssl/etcd.pem"ETCD_PEER_KEY_FILE="/opt/kubernetes/ssl/etcd-key.pem" |

#需要:reload |

[root@linux-node3 ~]# mkdir /var/lib/etcd |

[root@linux-node3 ~]# systemctl daemon-reload

[root@linux-node3~]# systemctl enable etcd

Created symlink from /etc/systemd/system/multi-user.target.wants/etcd.service to /etc/systemd/system/etcd.service.

#在所有节点上创建etcd存储目录并启动etcd (备注:以下三个节点都要操作)[root@linux-node1 ~]# mkdir /var/lib/etcd [root@linux-node1 ~]# systemctl start etcd |

#查看状态

[root@linux-node1 ssl]# systemctl status etcd● etcd.service - Etcd Server Loaded: loaded (/etc/systemd/system/etcd.service; enabled; vendor preset: disabled) Active: active (running) since Wed 2018-06-06 10:11:53 CST; 14s ago Main PID: 11198 (etcd) Tasks: 7 Memory: 7.2M CGroup: /system.slice/etcd.service └─11198 /opt/kubernetes/bin/etcdJun 06 10:11:53 linux-node1.example.com etcd[11198]: set the initial cluster version to 3.0Jun 06 10:11:53 linux-node1.example.com etcd[11198]: enabled capabilities for version 3.0Jun 06 10:11:55 linux-node1.example.com etcd[11198]: peer ce7b884e428b6c8c became activeJun 06 10:11:55 linux-node1.example.com etcd[11198]: established a TCP streaming connection with pe...er)Jun 06 10:11:55 linux-node1.example.com etcd[11198]: established a TCP streaming connection with pe...er)Jun 06 10:11:55 linux-node1.example.com etcd[11198]: established a TCP streaming connection with pe...er)Jun 06 10:11:55 linux-node1.example.com etcd[11198]: established a TCP streaming connection with pe...er)Jun 06 10:11:57 linux-node1.example.com etcd[11198]: updating the cluster version from 3.0 to 3.2Jun 06 10:11:57 linux-node1.example.com etcd[11198]: updated the cluster version from 3.0 to 3.2Jun 06 10:11:57 linux-node1.example.com etcd[11198]: enabled capabilities for version 3.2Hint: Some lines were ellipsized, use -l to show in full.<br>#check 2379 and 2380[root@linux-node1 ssl]# netstat -ntlpActive Internet connections (only servers)Proto Recv-Q Send-Q Local Address Foreign Address State PID/Program name tcp 0 0 192.168.56.11:2379 0.0.0.0:* LISTEN 11198/etcd tcp 0 0 127.0.0.1:2379 0.0.0.0:* LISTEN 11198/etcd tcp 0 0 192.168.56.11:2380 0.0.0.0:* LISTEN 11198/etcd tcp 0 0 0.0.0.0:22 0.0.0.0:* LISTEN 874/sshd tcp 0 0 127.0.0.1:25 0.0.0.0:* LISTEN 974/master tcp6 0 0 :::22 :::* LISTEN 874/sshd tcp6 0 0 ::1:25 :::* LISTEN 974/master |

下面需要大家在所有的 etcd 节点重复上面的步骤,直到所有机器的 etcd 服务都已启动。

7.验证集群

[root@linux-node1 ~]# etcdctl --endpoints=https://192.168.56.11:2379 \ --ca-file=/opt/kubernetes/ssl/ca.pem \ --cert-file=/opt/kubernetes/ssl/etcd.pem \ --key-file=/opt/kubernetes/ssl/etcd-key.pem cluster-healthmember 435fb0a8da627a4c is healthy: got healthy result from https://192.168.56.12:2379member 6566e06d7343e1bb is healthy: got healthy result from https://192.168.56.11:2379member ce7b884e428b6c8c is healthy: got healthy result from https://192.168.56.13:2379cluster is healthy |

七、K8S Master节点部署

部署Kubernetes API服务部署

0.准备软件包

#复制配置文件 (备注:在linux-node1)

[root@linux-node1 bin]# cd /usr/local/src/kubernetes/server/bin[root@linux-node1 bin]# lltotal 2016824-rwxr-xr-x 1 root root 58245918 Apr 12 23:16 apiextensions-apiserver-rwxr-xr-x 1 root root 131966577 Apr 12 23:16 cloud-controller-manager-rw-r--r-- 1 root root 8 Apr 12 23:16 cloud-controller-manager.docker_tag-rw-r--r-- 1 root root 133343232 Apr 12 23:16 cloud-controller-manager.tar-rwxr-xr-x 1 root root 266422752 Apr 12 23:16 hyperkube-rwxr-xr-x 1 root root 156493057 Apr 12 23:16 kubeadm-rwxr-xr-x 1 root root 57010027 Apr 12 23:16 kube-aggregator-rw-r--r-- 1 root root 8 Apr 12 23:16 kube-aggregator.docker_tag-rw-r--r-- 1 root root 58386432 Apr 12 23:16 kube-aggregator.tar-rwxr-xr-x 1 root root 223882554 Apr 12 23:16 kube-apiserver-rw-r--r-- 1 root root 8 Apr 12 23:16 kube-apiserver.docker_tag-rw-r--r-- 1 root root 225259008 Apr 12 23:16 kube-apiserver.tar-rwxr-xr-x 1 root root 146695941 Apr 12 23:16 kube-controller-manager-rw-r--r-- 1 root root 8 Apr 12 23:16 kube-controller-manager.docker_tag-rw-r--r-- 1 root root 148072448 Apr 12 23:16 kube-controller-manager.tar-rwxr-xr-x 1 root root 54277604 Apr 12 23:17 kubectl-rwxr-xr-x 1 root root 152789584 Apr 12 23:16 kubelet-rwxr-xr-x 1 root root 51343381 Apr 12 23:16 kube-proxy-rw-r--r-- 1 root root 8 Apr 12 23:16 kube-proxy.docker_tag-rw-r--r-- 1 root root 98919936 Apr 12 23:16 kube-proxy.tar-rwxr-xr-x 1 root root 49254848 Apr 12 23:16 kube-scheduler-rw-r--r-- 1 root root 8 Apr 12 23:16 kube-scheduler.docker_tag-rw-r--r-- 1 root root 50631168 Apr 12 23:16 kube-scheduler.tar-rwxr-xr-x 1 root root 2165591 Apr 12 23:16 mounter[root@linux-node1 bin]# cp kube-apiserver kube-controller-manager kube-scheduler /opt/kubernetes/bin/ |

1.创建生成CSR的 JSON 配置文件

[root@linux-node1 bin]# cd /usr/local/src/ssl/[root@linux-node1 ssl]# lltotal 599132-rw-r--r-- 1 root root 290 Jun 6 09:15 ca-config.json-rw-r--r-- 1 root root 1001 Jun 6 09:17 ca.csr-rw-r--r-- 1 root root 208 Jun 6 09:16 ca-csr.json-rw------- 1 root root 1679 Jun 6 09:17 ca-key.pem-rw-r--r-- 1 root root 1359 Jun 6 09:17 ca.pem-rw-r--r-- 1 root root 6595195 Mar 30 2016 cfssl-certinfo_linux-amd64-rw-r--r-- 1 root root 2277873 Mar 30 2016 cfssljson_linux-amd64-rw-r--r-- 1 root root 10376657 Mar 30 2016 cfssl_linux-amd64-rw-r--r-- 1 root root 17108856 Apr 12 17:35 cni-plugins-amd64-v0.7.1.tgz-rw-r--r-- 1 root root 1062 Jun 6 09:59 etcd.csr-rw-r--r-- 1 root root 287 Jun 6 09:59 etcd-csr.json-rw------- 1 root root 1675 Jun 6 09:59 etcd-key.pem-rw-r--r-- 1 root root 1436 Jun 6 09:59 etcd.pemdrwxr-xr-x 3 478493 89939 117 Mar 30 01:49 etcd-v3.2.18-linux-amd64-rw-r--r-- 1 root root 10562874 Mar 30 01:58 etcd-v3.2.18-linux-amd64.tar.gz-rw-r--r-- 1 root root 9706487 Jan 24 02:58 flannel-v0.10.0-linux-amd64.tar.gz-rw-r--r-- 1 root root 13344537 Apr 13 01:51 kubernetes-client-linux-amd64.tar.gz-rw-r--r-- 1 root root 112427817 Apr 13 01:51 kubernetes-node-linux-amd64.tar.gz-rw-r--r-- 1 root root 428337777 Apr 13 01:51 kubernetes-server-linux-amd64.tar.gz-rw-r--r-- 1 root root 2716855 Apr 13 01:51 kubernetes.tar.gz |

[root@linux-node1 ssl]# vim kubernetes-csr.json

{ "CN": "kubernetes", "hosts": [ "127.0.0.1", "192.168.56.11", "10.1.0.1", "kubernetes", "kubernetes.default", "kubernetes.default.svc", "kubernetes.default.svc.cluster", "kubernetes.default.svc.cluster.local" ], "key": { "algo": "rsa", "size": 2048 }, "names": [ { "C": "CN", "ST": "BeiJing", "L": "BeiJing", "O": "k8s", "OU": "System" } ]} |

2.生成 kubernetes 证书和私钥

[root@linux-node1 src]# cfssl gencert -ca=/opt/kubernetes/ssl/ca.pem \ -ca-key=/opt/kubernetes/ssl/ca-key.pem \ -config=/opt/kubernetes/ssl/ca-config.json \ -profile=kubernetes kubernetes-csr.json | cfssljson -bare kubernetes[root@linux-node1 src]# cp kubernetes*.pem /opt/kubernetes/ssl/[root@linux-node1 ~]# scp kubernetes*.pem 192.168.56.12:/opt/kubernetes/ssl/[root@linux-node1 ~]# scp kubernetes*.pem 192.168.56.13:/opt/kubernetes/ssl/ |

3.创建 kube-apiserver 使用的客户端 token 文件

[root@linux-node1 ~]# head -c 16 /dev/urandom | od -An -t x | tr -d ' 'ad6d5bb607a186796d8861557df0d17f [root@linux-node1 ~]# vim /opt/kubernetes/ssl/ bootstrap-token.csvad6d5bb607a186796d8861557df0d17f,kubelet-bootstrap,10001,"system:kubelet-bootstrap" |

4.创建基础用户名/密码认证配置

[root@linux-node1 ~]# vim /opt/kubernetes/ssl/basic-auth.csvadmin,admin,1readonly,readonly,2 |

5.部署Kubernetes API Server

[root@linux-node1 ~]# vim /usr/lib/systemd/system/kube-apiserver.service[Unit]Description=Kubernetes API ServerDocumentation=https://github.com/GoogleCloudPlatform/kubernetesAfter=network.target[Service]ExecStart=/opt/kubernetes/bin/kube-apiserver \ --admission-control=NamespaceLifecycle,LimitRanger,ServiceAccount,DefaultStorageClass,ResourceQuota,NodeRestriction \ --bind-address=192.168.56.11 \ --insecure-bind-address=127.0.0.1 \ --authorization-mode=Node,RBAC \ --runtime-config=rbac.authorization.k8s.io/v1 \ --kubelet-https=true \ --anonymous-auth=false \ --basic-auth-file=/opt/kubernetes/ssl/basic-auth.csv \ --enable-bootstrap-token-auth \ --token-auth-file=/opt/kubernetes/ssl/bootstrap-token.csv \ --service-cluster-ip-range=10.1.0.0/16 \ --service-node-port-range=20000-40000 \ --tls-cert-file=/opt/kubernetes/ssl/kubernetes.pem \ --tls-private-key-file=/opt/kubernetes/ssl/kubernetes-key.pem \ --client-ca-file=/opt/kubernetes/ssl/ca.pem \ --service-account-key-file=/opt/kubernetes/ssl/ca-key.pem \ --etcd-cafile=/opt/kubernetes/ssl/ca.pem \ --etcd-certfile=/opt/kubernetes/ssl/kubernetes.pem \ --etcd-keyfile=/opt/kubernetes/ssl/kubernetes-key.pem \ --etcd-servers=https://192.168.56.11:2379,https://192.168.56.12:2379,https://192.168.56.13:2379 \ --enable-swagger-ui=true \ --allow-privileged=true \ --audit-log-maxage=30 \ --audit-log-maxbackup=3 \ --audit-log-maxsize=100 \ --audit-log-path=/opt/kubernetes/log/api-audit.log \ --event-ttl=1h \ --v=2 \ --logtostderr=false \ --log-dir=/opt/kubernetes/logRestart=on-failureRestartSec=5Type=notifyLimitNOFILE=65536[Install]WantedBy=multi-user.target |

6.启动API Server服务

[root@linux-node1 ~]# systemctl daemon-reload[root@linux-node1 ~]# systemctl enable kube-apiserver[root@linux-node1 ~]# systemctl start kube-apiserver查看API Server服务状态[root@linux-node1 ~]# systemctl status kube-apiserver |

#check prot :6443 (备注:kube-apiserve)

[root@linux-node1 ssl]# netstat -lntupActive Internet connections (only servers)Proto Recv-Q Send-Q Local Address Foreign Address State PID/Program name tcp 0 0 192.168.56.11:6443 0.0.0.0:* LISTEN 11331/kube-apiservetcp 0 0 192.168.56.11:2379 0.0.0.0:* LISTEN 11198/etcd tcp 0 0 127.0.0.1:2379 0.0.0.0:* LISTEN 11198/etcd tcp 0 0 192.168.56.11:2380 0.0.0.0:* LISTEN 11198/etcd tcp 0 0 127.0.0.1:8080 0.0.0.0:* LISTEN 11331/kube-apiservetcp 0 0 0.0.0.0:22 0.0.0.0:* LISTEN 874/sshd tcp 0 0 127.0.0.1:25 0.0.0.0:* LISTEN 974/master tcp6 0 0 :::22 :::* LISTEN 874/sshd tcp6 0 0 ::1:25 :::* LISTEN 974/master |

部署Controller Manager服务

[root@linux-node1 ~]# vim /usr/lib/systemd/system/kube-controller-manager.service[Unit]Description=Kubernetes Controller ManagerDocumentation=https://github.com/GoogleCloudPlatform/kubernetes[Service]ExecStart=/opt/kubernetes/bin/kube-controller-manager \ --address=127.0.0.1 \ --master=http://127.0.0.1:8080 \ --allocate-node-cidrs=true \ --service-cluster-ip-range=10.1.0.0/16 \ --cluster-cidr=10.2.0.0/16 \ --cluster-name=kubernetes \ --cluster-signing-cert-file=/opt/kubernetes/ssl/ca.pem \ --cluster-signing-key-file=/opt/kubernetes/ssl/ca-key.pem \ --service-account-private-key-file=/opt/kubernetes/ssl/ca-key.pem \ --root-ca-file=/opt/kubernetes/ssl/ca.pem \ --leader-elect=true \ --v=2 \ --logtostderr=false \ --log-dir=/opt/kubernetes/logRestart=on-failureRestartSec=5[Install]WantedBy=multi-user.target |

3.启动Controller Manager

[root@linux-node1 ~]# systemctl daemon-reload[root@linux-node1 scripts]# systemctl enable kube-controller-manager[root@linux-node1 scripts]# systemctl start kube-controller-manager |

4.查看服务状态

[root@linux-node1 scripts]# systemctl status kube-controller-manager |

部署Kubernetes Scheduler

[root@linux-node1 ~]# vim /usr/lib/systemd/system/kube-scheduler.service[Unit]Description=Kubernetes SchedulerDocumentation=https://github.com/GoogleCloudPlatform/kubernetes[Service]ExecStart=/opt/kubernetes/bin/kube-scheduler \ --address=127.0.0.1 \ --master=http://127.0.0.1:8080 \ --leader-elect=true \ --v=2 \ --logtostderr=false \ --log-dir=/opt/kubernetes/logRestart=on-failureRestartSec=5[Install]WantedBy=multi-user.target |

2.部署服务

[root@linux-node1 ~]# systemctl daemon-reload[root@linux-node1 scripts]# systemctl enable kube-scheduler[root@linux-node1 scripts]# systemctl start kube-scheduler[root@linux-node1 scripts]# systemctl status kube-scheduler |

部署kubectl 命令行工具

1.准备二进制命令包 |

[root@linux-node1 ~]# cd /usr/local/src/kubernetes/client/bin[root@linux-node1 bin]# cp kubectl /opt/kubernetes/bin/ |

2.创建 admin 证书签名请求

[root@linux-node1 ~]# cd /usr/local/src/ssl/[root@linux-node1 ssl]# vim admin-csr.json{ "CN": "admin", "hosts": [], "key": { "algo": "rsa", "size": 2048 }, "names": [ { "C": "CN", "ST": "BeiJing", "L": "BeiJing", "O": "system:masters", "OU": "System" } ]} |

3.生成 admin 证书和私钥

[root@linux-node1 ssl]# cfssl gencert -ca=/opt/kubernetes/ssl/ca.pem \ -ca-key=/opt/kubernetes/ssl/ca-key.pem \ -config=/opt/kubernetes/ssl/ca-config.json \ -profile=kubernetes admin-csr.json | cfssljson -bare admin[root@linux-node1 ssl]# ls -l admin*-rw-r--r-- 1 root root 1009 Mar 5 12:29 admin.csr-rw-r--r-- 1 root root 229 Mar 5 12:28 admin-csr.json-rw------- 1 root root 1675 Mar 5 12:29 admin-key.pem-rw-r--r-- 1 root root 1399 Mar 5 12:29 admin.pem[root@linux-node1 src]# mv admin*.pem /opt/kubernetes/ssl/ |

4.设置集群参数

[root@linux-node1 ssl]# cd /usr/local/src/ssl

[root@linux-node1 src]# kubectl config set-cluster kubernetes \ --certificate-authority=/opt/kubernetes/ssl/ca.pem \ --embed-certs=true \ --server=https://192.168.56.11:6443<br><br>Cluster "kubernetes" set. |

5.设置客户端认证参数

[root@linux-node1 ssl]# kubectl config set-credentials admin \ --client-certificate=/opt/kubernetes/ssl/admin.pem \ --embed-certs=true \ --client-key=/opt/kubernetes/ssl/admin-key.pem<br>User "admin" set. |

6.设置上下文参数

[root@linux-node1 ssl]# kubectl config set-context kubernetes \ --cluster=kubernetes \ --user=admin<br><br>Context "kubernetes" created. |

7.设置默认上下文

[root@linux-node1 ssl]# kubectl config use-context kubernetes<br><br>Switched to context "kubernetes". |

#会在家目录生成.kube/config文件(备注:创建这个文件的作用,就是生成kubectl命令,以后其它节点要想使用这个命令,只需要把这个文件拷贝过去,就可以直接使用这个命令,不需要再重复上面操作)

[root@linux-node1 ssl]# cd

[root@linux-node1 ~]# cat .kube/config

apiVersion: v1

clusters:

- cluster:

certificate-authority-data: LS0tLS1CRUdJTiBDRVJUSUZJQ0FURS0tLS0tCk1JSUR2akNDQXFhZ0F3SUJBZ0lVZEZWY3lsY2ZNYWh6KzlJZ0dLNTBzc1pwbEJjd0RRWUpLb1pJaHZjTkFRRUwKQlFBd1pURUxNQWtHQTFVRUJoTUNRMDR4RURBT0JnTlZCQWdUQjBKbGFVcHBibWN4RURBT0JnTlZCQWNUQjBKbAphVXBwYm1jeEREQUtCZ05WQkFvVEEyczRjekVQTUEwR0ExVUVDeE1HVTNsemRHVnRNUk13RVFZRFZRUURFd3ByCmRXSmxjbTVsZEdWek1CNFhEVEU0TURZd05qQXhNVEl3TUZvWERUSXpNRFl3TlRBeE1USXdNRm93WlRFTE1Ba0cKQTFVRUJoTUNRMDR4RURBT0JnTlZCQWdUQjBKbGFVcHBibWN4RURBT0JnTlZCQWNUQjBKbGFVcHBibWN4RERBSwpCZ05WQkFvVEEyczRjekVQTUEwR0ExVUVDeE1HVTNsemRHVnRNUk13RVFZRFZRUURFd3ByZFdKbGNtNWxkR1Z6Ck1JSUJJakFOQmdrcWhraUc5dzBCQVFFRkFBT0NBUThBTUlJQkNnS0NBUUVBN21taitneStOZklrTVFCYUZ1L2sKYWdFZVI5LzRrQ21ZZjJQdFl2U0lBeVNtRVNxa0pCY0t6Qk5FN0hDSis2cXQ5T3NIUG1RMC81OTNaODNTVlVNZQpSdWR3ano5d3orT2xHeGVnc1BmYlVIbUtudU1TUGQxU2YreitCOHpTUTQrY0ZrVEdzdEFUSURWU0hCQTJ3c25yCmx1S25hb0QxWnhTd3VOUUpmazVMYmtzZ0tLd3N1ZG9KbVZuZWNiVG9SVncyUmFDalRpRUx6ZksyWHVLNjlsMjUKK3g0VGlVZHl2cGdvc1dsVE9JTW5mN2JGdUJZeXlyeXUvN2gwZFdKdDljS25GVUt4cU9jS0dqSnlpUW9QQjl4WQpWaUxTcUJ2WEkvZk1CaFFKWDUyYkdhSEVMdzBvQnR2UXRqUVM0OXVJSEY0NmNMeGdiMkNUVGRkbUZVSUZVd0NvCjdRSURBUUFCbzJZd1pEQU9CZ05WSFE4QkFmOEVCQU1DQVFZd0VnWURWUjBUQVFIL0JBZ3dCZ0VCL3dJQkFqQWQKQmdOVkhRNEVGZ1FVcWVMbGdIVGdiN1JGMzNZZm8rS0h6QjhUWmc4d0h3WURWUjBqQkJnd0ZvQVVxZUxsZ0hUZwpiN1JGMzNZZm8rS0h6QjhUWmc4d0RRWUpLb1pJaHZjTkFRRUxCUUFEZ2dFQkFGVDc5ZnlOekdBUlNrVlI0bHV6ClpDblRRTloyMVAxeTRVTlhXOXQ2VUw5aC9GYXZxdnNEMlRkQmsyaFZGR1lkbVpCcXQ0ejlyWkREZk5aWnBkUXEKdXczcjdMdWNpSDVGWGFqRGFMNGN5VUROMUVDVjB2d0dsUTUycTZyeTFaMmFOSmlPdnNZL0h5MW5mdFJuV0k5aQp2QVBVcXRNeUx4NFN2WjlXUGtaZnZVRVV6NVVROXFyYmRHeHViMzdQZ2JmRURZcWh3SzJmQkJSMGR4RjRhN3hpClVFaVJXUTNtajNhMmpRZVdhK2JZVU10S204WHY0eFBQZ1FTMWdMWFZoOVN0QTROdXRHTVBuT3lkZmorM0Rab2oKbGU4SHZsaTYwT3FkVDJsaUhyam1Objh5MENxS0RxV3VEb2lsSFRtTXl1NHFGeHlOUG0yUDl2NTRHSnhPcjFhMApTN2c9Ci0tLS0tRU5EIENFUlRJRklDQVRFLS0tLS0K

server: https://192.168.56.11:6443

name: kubernetes

contexts:

- context:

cluster: kubernetes

user: admin

name: kubernetes

current-context: kubernetes

kind: Config

preferences: {}

users:

- name: admin

user:

client-certificate-data: LS0tLS1CRUdJTiBDRVJUSUZJQ0FURS0tLS0tCk1JSUQzVENDQXNXZ0F3SUJBZ0lVT3UrbFBUWjhEWGJRaDNML2NKUTlTL3pmNTk4d0RRWUpLb1pJaHZjTkFRRUwKQlFBd1pURUxNQWtHQTFVRUJoTUNRMDR4RURBT0JnTlZCQWdUQjBKbGFVcHBibWN4RURBT0JnTlZCQWNUQjBKbAphVXBwYm1jeEREQUtCZ05WQkFvVEEyczRjekVQTUEwR0ExVUVDeE1HVTNsemRHVnRNUk13RVFZRFZRUURFd3ByCmRXSmxjbTVsZEdWek1CNFhEVEU0TURZd05qQXpNak13TUZvWERURTVNRFl3TmpBek1qTXdNRm93YXpFTE1Ba0cKQTFVRUJoTUNRMDR4RURBT0JnTlZCQWdUQjBKbGFVcHBibWN4RURBT0JnTlZCQWNUQjBKbGFVcHBibWN4RnpBVgpCZ05WQkFvVERuTjVjM1JsYlRwdFlYTjBaWEp6TVE4d0RRWURWUVFMRXdaVGVYTjBaVzB4RGpBTUJnTlZCQU1UCkJXRmtiV2x1TUlJQklqQU5CZ2txaGtpRzl3MEJBUUVGQUFPQ0FROEFNSUlCQ2dLQ0FRRUF5RmExSGpaUHhvY3gKdlFuL1ZuQkcwN2RHWVJmTTljRm9EcGFnYnV6TElLa1gzS29hWm41cXVBWTJaVlZnZENySDhCNHFVaytiMlZwKwo5OW5xNCtMWlVpY2xGVkhET2dKME5ZR3o1QkJscCs5c1FkZ1QvdEgvWTRzbEtsOUNzSnluazBJZ0JIbStJTERJCkNxL0hiUUJGbnN6VTBlVy9GeTIvV0tDMXBEbndpdk1WMmdGYzFWSm52Mk9sVUhMZ2dHc2NKMldRTnpQVUN0a0oKbnJtNzBKVzJmODJyeUFVSmxwWTZlYWVHZVBjbWk3TXVOZUtRVnVtM3F2bnZkTEVxVWxZdGM3eHI1Qy9GVEhwQwp5eUhtUWpydVpzc3RHb0pBanJOaXQrbU9seW9UaHh6UDVkT2J4cnZXandYd3BDL0lRSE1Ic2xVU0tXOC9aaFZJClkreHBvakFMNHdJREFRQUJvMzh3ZlRBT0JnTlZIUThCQWY4RUJBTUNCYUF3SFFZRFZSMGxCQll3RkFZSUt3WUIKQlFVSEF3RUdDQ3NHQVFVRkJ3TUNNQXdHQTFVZEV3RUIvd1FDTUFBd0hRWURWUjBPQkJZRUZFcjdPYkZCem5QOQpXTjFxY1pPTEZVYVFzUWJMTUI4R0ExVWRJd1FZTUJhQUZLbmk1WUIwNEcrMFJkOTJINlBpaDh3ZkUyWVBNQTBHCkNTcUdTSWIzRFFFQkN3VUFBNElCQVFDRVAyK202NXJiaEQwbHBTMGVLKzBrZG8ySTk4dWpqaWw5UjJGL1UzYXYKeXEyR2FxL2l2YXg4WVdKVXhTUWpDczZwbmkvd05SelFBdEVRazI5M0FVcUNiNUhzWkx5dHMvQTlmTWpSWFpiQgpaTWtnaDN6L2Rxb1JuTmRKVmV3WmlsTW9UNWZrNDdRU0xFbi80MXIyTmJxRXovdUlIWHZuOFVscnZwNFdabGJLCnFZUDFrWnBJZVJmVHB3STViNkZOTWdLcUl0VktmVnk4MnhVcHJFamZaTkJrbnh6WituNytyQkgrdE5waWttS2QKU2UrdG5nbysrcjFOdFVVK3RMelBkLzFFZnNmUG1qZEtOYUxMKzhsZjkrejNkTXptSlJBSUYvY0poSFVLVXFJagoyUEZpT1ZmeHNxV2htZ1pTWXEzNm5QdlAvb0RXWFlZSXRVRHNVMUxzSWxLTQotLS0tLUVORCBDRVJUSUZJQ0FURS0tLS0tCg==

client-key-data: LS0tLS1CRUdJTiBSU0EgUFJJVkFURSBLRVktLS0tLQpNSUlFb2dJQkFBS0NBUUVBeUZhMUhqWlB4b2N4dlFuL1ZuQkcwN2RHWVJmTTljRm9EcGFnYnV6TElLa1gzS29hClpuNXF1QVkyWlZWZ2RDckg4QjRxVWsrYjJWcCs5OW5xNCtMWlVpY2xGVkhET2dKME5ZR3o1QkJscCs5c1FkZ1QKL3RIL1k0c2xLbDlDc0p5bmswSWdCSG0rSUxESUNxL0hiUUJGbnN6VTBlVy9GeTIvV0tDMXBEbndpdk1WMmdGYwoxVkpudjJPbFVITGdnR3NjSjJXUU56UFVDdGtKbnJtNzBKVzJmODJyeUFVSmxwWTZlYWVHZVBjbWk3TXVOZUtRClZ1bTNxdm52ZExFcVVsWXRjN3hyNUMvRlRIcEN5eUhtUWpydVpzc3RHb0pBanJOaXQrbU9seW9UaHh6UDVkT2IKeHJ2V2p3WHdwQy9JUUhNSHNsVVNLVzgvWmhWSVkreHBvakFMNHdJREFRQUJBb0lCQUM2RnpDdUc2MEdzRllYVApzNExzTWRacWdpSjc5M0craHg2VUpnOThWN051OFFhaVRkMHRVRFVKNUVubDZLOHhYQnJMdG9KRTBHbEtGYUFTCjcvUVpzdVBjQ1VXSkppL3JiL2NZOXFCb21tTEVPN3lTcEJvUnhCL21xU3ZNMFZ6WUZDWWpQZklzSDFYU0Y3STcKbmJFWFZoT0pkNGFDdHJ4NE9DNHBxK1RHTzdEWVZuUW9ONGdac2x3NnZMWDRyRG84V2RsU2xiMnNxMS9LcDNpRwpNcEhOR1NQUHN3eWtyNG1hckxBUnJqb0ZmcDdoVWFiREtjT1lGT1IrMHZOa3cxbzUrRXdqVDYwK3VjdFZ3WnRLCjdxZG9pWTBnZ1IvNllPOFNZUmRtWXJMOE5MTlJ6U2JJUTJkTnZjbHY5WFJtYk5zK1BSSThMRE1PREMreElSL2cKWHlXblNva0NnWUVBLzBPV2VOL0lUeFR1ZEJVaERBYW5LSk1HSmcvd29RZHRUY0FRQS9vb2FpZzNEMmp5aUM1MApiYnBRdkJwcVVDWjJzL0NPUU9NM1Z5cllWR2tybGF3VVFsSGpPR0piVm9yNjZKNTMvSnBhbm5PSDNoelR6TWh5Cis3NTA3alNNcHZGclJWS0d2dmlwQ01uRkVrSHAzaXdqbzF4UWRiS085dzBSRUJkWEtFMHI4T2NDZ1lFQXlPcVUKTlZFK2ZxTHY1ekN5OStjQ05ISjRqNlNvSC9zZ3FDK2xMTUxOWmVVWEJua1ZCbzY3bTFrbEQvcmZoT1FhRWMrQQpORnQxMEJsQ0lVNmtpU1RkUk9lTm5FSnNwVnNtRlhGVnlwa3IzNDYwb01BR0V5RTI5bjdmVWlrMHlSZUNta0ozCnpibEh1S3lEakp4T3p2UFlCamJQa1NnaDZoWTI5SlBQM0FTd0lhVUNnWUFLSW5yVTdiMmNOaTZKZVZWSWp2TVEKRDFaTktKRGJNOXBKSGZrRXoyZlBYeTFnZFVBNzIreFBkdmhCbjZMYzc4b0N0dWhPOXpaNVJZQTFTYityUDV2RwpUazRCTFJhSFJ4bFRKd2VJaGZCWEhpc2t6R3cwVXprTmViQld6TXRRellEK3pabi85d3R2QitQRko4ekxQMkZpCjJRVnd4dGdhUXZDTWZRQyszdUdCdlFLQmdERURxU3hvcVlwVFRadGs4Z1F3UXdWd2Q2RHpWbUNXN3h5WW92OE0KZHZkSXNCbFFLS1QwNVNlODA2SFdYZmtaZkpLandHOEZjUFJYZFI2VEJPakFLWXJKd201QWRpalExN1diZElaOApYNHVtVU1KMmxnVE1zWS9vMjZvN2l6a1RselR5eWk5UjZBRlJkTFkwMjdUNUg5WkVRTmIwcDNGb0FmZ2dwekRSCm8vWlJBb0dBV3RaTHFRVEJhN25xa1Nza2Z2Y1BLQlRNTExXNEprdkQ4ZUYwUFplOFY5ajJxNWRZeHNKUXA4Q1cKUGl0S0QyZW0yYjJPdDV2UkhZdFh3bE9NQ1VIc3VpTHNRZzdwZ0cwVUo4NWdsb3E0SkdaRmFZblcxZHNicVUvQQpyT1pERCt1akgzRHhqUG1xWCtsNE9ZN3ZHa2ZrdVkvYlM3Z1ptNjAyQlZlK0dQRS9lMUE9Ci0tLS0tRU5EIFJTQSBQUklWQVRFIEtFWS0tLS0tCg==

8.使用kubectl工具,查看节点状态

[root@linux-node1 ~]# kubectl get csNAME STATUS MESSAGE ERRORcontroller-manager Healthy ok scheduler Healthy ok etcd-1 Healthy {"health":"true"} etcd-2 Healthy {"health":"true"} etcd-0 Healthy {"health":"true"} |

九、Node节点部署

部署kubelet

1.二进制包准备 将软件包从linux-node1复制到linux-node2中去。

[root@linux-node1 ~]# cd /usr/local/src/kubernetes/server/bin/[root@linux-node1 bin]# cp kubelet kube-proxy /opt/kubernetes/bin/[root@linux-node1 bin]# scp kubelet kube-proxy 192.168.56.12:/opt/kubernetes/bin/[root@linux-node1 bin]# scp kubelet kube-proxy 192.168.56.13:/opt/kubernetes/bin/ |

2.创建角色绑定

[root@linux-node1 ~]# kubectl create clusterrolebinding kubelet-bootstrap --clusterrole=system:node-bootstrapper --user=kubelet-bootstrap<br><br>clusterrolebinding "kubelet-bootstrap" created |

3.创建 kubelet bootstrapping kubeconfig 文件 设置集群参数

[root@linux-node1 ~]# kubectl config set-cluster kubernetes \ --certificate-authority=/opt/kubernetes/ssl/ca.pem \ --embed-certs=true \ --server=https://192.168.56.11:6443 \ --kubeconfig=bootstrap.kubeconfig<br><br>Cluster "kubernetes" set. |

设置客户端认证参数

先:cd /usr/local/src/ssl/ 目录

[root@linux-node1 ~]# kubectl config set-credentials kubelet-bootstrap \ --token=ad6d5bb607a186796d8861557df0d17f \ --kubeconfig=bootstrap.kubeconfig <br><br> User "kubelet-bootstrap" set. |

设置上下文参数

|

1

2

3

4

|

[root@linux-node1 ~]# kubectl config set-context default \ --cluster=kubernetes \ --user=kubelet-bootstrap \ --kubeconfig=bootstrap.kubeconfig<br><br>Context "default" created. |

选择默认上下文

[root@linux-node1 ~]# kubectl config use-context default --kubeconfig=bootstrap.kubeconfigSwitched to context "default".#把生成的bootstrap.kubeconfig,复制到 /opt/kubernetes/cfg[root@linux-node1 ssl]# cd /usr/local/src/ssl[root@linux-node1 ssl]# lltotal 55376-rw-r--r-- 1 root root 1009 Jun 6 11:27 admin.csr-rw-r--r-- 1 root root 229 Jun 6 11:27 admin-csr.json-rw------- 1 root root 256 Jun 6 11:52 bootstrap.kubeconfig[root@linux-node1 kubernetes]# cp bootstrap.kubeconfig /opt/kubernetes/cfg[root@linux-node1 kubernetes]# scp bootstrap.kubeconfig 192.168.56.12:/opt/kubernetes/cfg[root@linux-node1 kubernetes]# scp bootstrap.kubeconfig 192.168.56.13:/opt/kubernetes/cfg#以后每增加一个节点,都要把这个文件复制到他的: |

scp bootstrap.kubeconfig 192.168.56.13:/opt/kubernetes/cfg 目录中。 |

部署kubelet

1.设置CNI支持 (备注:所有节点都需要操作,注意:其实linux-node1可以不需要。我们只是在上面配置,然后发送到linux-node2 and linux-node3上面去。需要修改对应节点的ip地址)

#备注:在linux-node1上面操作

#备注:cni是k8s的网络接口的插件

[root@linux-node1 ~]# mkdir -p /etc/cni/net.d[root@linux-node1 ~]# vim /etc/cni/net.d/10-default.conf{ "name": "flannel", "type": "flannel", "delegate": { "bridge": "docker0", "isDefaultGateway": true, "mtu": 1400 }} |

2.创建kubelet目录 (备注:三个节点都要操作或linux-node1可以不操作。)

[root@linux-node1 ~]# mkdir /var/lib/kubelet |

3.创建kubelet服务配置 (备注:这个服务,不需要在linux-node1上面启动,只需在linux-node2,linux-node3上面启动。)

[root@k8s-node1 ~]# vim /usr/lib/systemd/system/kubelet.service[Unit]Description=Kubernetes KubeletDocumentation=https://github.com/GoogleCloudPlatform/kubernetesAfter=docker.serviceRequires=docker.service[Service]WorkingDirectory=/var/lib/kubeletExecStart=/opt/kubernetes/bin/kubelet \ --address=192.168.56.11 \ --hostname-override=192.168.56.11 \ --pod-infra-container-image=mirrorgooglecontainers/pause-amd64:3.0 \ --experimental-bootstrap-kubeconfig=/opt/kubernetes/cfg/bootstrap.kubeconfig \ --kubeconfig=/opt/kubernetes/cfg/kubelet.kubeconfig \ --cert-dir=/opt/kubernetes/ssl \ --network-plugin=cni \ --cni-conf-dir=/etc/cni/net.d \ --cni-bin-dir=/opt/kubernetes/bin/cni \ --cluster-dns=10.1.0.2 \ --cluster-domain=cluster.local. \ --hairpin-mode hairpin-veth \ --allow-privileged=true \ --fail-swap-on=false \ --logtostderr=true \ --v=2 \ --logtostderr=false \ --log-dir=/opt/kubernetes/logRestart=on-failureRestartSec=5 |

#复制文件到其它节点(备注:在linux-node1上面操作)

#复制文件到其它两个节点[root@linux-node1 ~]# scp /usr/lib/systemd/system/kubelet.service 192.168.56.12:/usr/lib/systemd/system/kubelet.servicekubelet.service 100% 911 1.2MB/s 00:00 [root@linux-node1 ~]# scp /usr/lib/systemd/system/kubelet.service 192.168.56.13:/usr/lib/systemd/system/kubelet.servicekubelet.service 100% 911 871.2KB/s 00:00 |

#修改配置文件ip

#在linux-node2上面操作

[root@linux-node2 ~]# cat /usr/lib/systemd/system/kubelet.service [Unit] Description=Kubernetes Kubelet Documentation=https://github.com/GoogleCloudPlatform/kubernetes After=docker.service Requires=docker.service [Service] WorkingDirectory=/var/lib/kubelet ExecStart=/opt/kubernetes/bin/kubelet \ --address=192.168.56.12 \ --hostname-override=192.168.56.12 \ --pod-infra-container-image=mirrorgooglecontainers/pause-amd64:3.0 \ --experimental-bootstrap-kubeconfig=/opt/kubernetes/cfg/bootstrap.kubeconfig \ --kubeconfig=/opt/kubernetes/cfg/kubelet.kubeconfig \ --cert-dir=/opt/kubernetes/ssl \ --network-plugin=cni \ --cni-conf-dir=/etc/cni/net.d \ --cni-bin-dir=/opt/kubernetes/bin/cni \ --cluster-dns=10.1.0.2 \ --cluster-domain=cluster.local. \ --hairpin-mode hairpin-veth \ --allow-privileged=true \ --fail-swap-on=false \ --logtostderr=true \ --v=2 \ --logtostderr=false \ --log-dir=/opt/kubernetes/log Restart=on-failure RestartSec=5

#在linux-node3上面操作

[root@linux-node3 ~]# cat /usr/lib/systemd/system/kubelet.service[Unit]Description=Kubernetes KubeletDocumentation=https://github.com/GoogleCloudPlatform/kubernetesAfter=docker.serviceRequires=docker.service[Service]WorkingDirectory=/var/lib/kubeletExecStart=/opt/kubernetes/bin/kubelet \ --address=192.168.56.13 \ --hostname-override=192.168.56.13 \ --pod-infra-container-image=mirrorgooglecontainers/pause-amd64:3.0 \ --experimental-bootstrap-kubeconfig=/opt/kubernetes/cfg/bootstrap.kubeconfig \ --kubeconfig=/opt/kubernetes/cfg/kubelet.kubeconfig \ --cert-dir=/opt/kubernetes/ssl \ --network-plugin=cni \ --cni-conf-dir=/etc/cni/net.d \ --cni-bin-dir=/opt/kubernetes/bin/cni \ --cluster-dns=10.1.0.2 \ --cluster-domain=cluster.local. \ --hairpin-mode hairpin-veth \ --allow-privileged=true \ --fail-swap-on=false \ --logtostderr=true \ --v=2 \ --logtostderr=false \ --log-dir=/opt/kubernetes/logRestart=on-failureRestartSec=5 |

4.启动Kubelet (备注:仅在linux-node2和linux-node3上面操作,linux-node1不需要操作。确记!!!)

[root@linux-node2 ~]# systemctl daemon-reload[root@linux-node2 ~]# systemctl enable kubelet[root@linux-node2 ~]# systemctl start kubelet |

5.查看服务状态

[root@linux-node2 kubernetes]# systemctl status kubelet |

6.查看csr请求 注意是在linux-node1上执行。

[root@linux-node1 kubelet]# kubectl get csrNAME AGE REQUESTOR CONDITIONnode-csr-1Rk_X4QeuIoxV9HSFkFPZ0QYRQbVBjakoKWvQgyMok4 2h kubelet-bootstrap Pendingnode-csr-4ZD5a2sCg4bNPnqVP3rfDgB0HdDxLEoX68xgSgq8ZGw 18m kubelet-bootstrap Pendingnode-csr-5eqgT8WQ3TyTmugHsNWgw8fhaiweNjyYsFIit1QqTYE 2h kubelet-bootstrap Pendingnode-csr-Kd2ucPEd3vhd28B3E-sT5AmPR0GBIMTiPPDwmJGXk2s 1h kubelet-bootstrap Pendingnode-csr-oIt1v6SLET2anyA81iH6SK8G_s_lKN_bKhaxl4gXJ1A 1h kubelet-bootstrap Pendingnode-csr-rXgzaG0yW6CWLfr-wR-5nWbU6Qh-sid3ErY4JhrKajY 1h kubelet-bootstrap Pendingnode-csr-vzJDdTnDUMqA6j7VPe_gLWLfIepokV7aGx2I5ctliIE 47m kubelet-bootstrap Pendingnode-csr-yfcnMD70P6K-yscOZ9URmrwqRPvFqWGGJtuSrqixFTE 1h kubelet-bootstrap Pending |

7.批准kubelet 的 TLS 证书请求(备注:linux-node1上面执行)

[root@linux-node1 ~]# kubectl get csr|grep 'Pending' | awk 'NR>0{print $1}'| xargs kubectl certificate approve |

#在linux-node2上面,会生成认证通过的证书

[root@linux-node2 ssl]# lltotal 48-rw-r--r-- 1 root root 290 Jun 6 09:18 ca-config.json-rw-r--r-- 1 root root 1001 Jun 6 09:18 ca.csr-rw------- 1 root root 1679 Jun 6 09:18 ca-key.pem-rw-r--r-- 1 root root 1359 Jun 6 09:18 ca.pem-rw------- 1 root root 1675 Jun 6 10:01 etcd-key.pem-rw-r--r-- 1 root root 1436 Jun 6 10:01 etcd.pem-rw-r--r-- 1 root root 1046 Jun 6 15:35 kubelet-client.crt-rw------- 1 root root 227 Jun 6 15:35 kubelet-client.key-rw-r--r-- 1 root root 2185 Jun 6 12:28 kubelet.crt-rw------- 1 root root 1679 Jun 6 12:28 kubelet.key-rw------- 1 root root 1679 Jun 6 10:58 kubernetes-key.pem-rw-r--r-- 1 root root 1610 Jun 6 10:58 kubernetes.pem |

执行完毕后,查看节点状态已经是Ready的状态了 (备注:linux-node1上面执行)

[root@linux-node1 kubelet]# kubectl get nodeNAME STATUS ROLES AGE VERSION192.168.56.12 Ready <none> 52s v1.10.1192.168.56.13 Ready <none> 52s v1.10.1 |

部署Kubernetes Proxy

1.配置kube-proxy使用LVS (备注:我们是通过在linux-node1上面安装,再生成证书,再复制到其它节点上面)

[root@linux-node2 ~]# yum install -y ipvsadm ipset conntrack |

2.创建 kube-proxy 证书请求

[root@linux-node1 ~]# cd /usr/local/src/ssl/[root@linux-node1 ~]# vim kube-proxy-csr.json{ "CN": "system:kube-proxy", "hosts": [], "key": { "algo": "rsa", "size": 2048 }, "names": [ { "C": "CN", "ST": "BeiJing", "L": "BeiJing", "O": "k8s", "OU": "System" } ]} |

3.生成证书

[root@linux-node1~]# cfssl gencert -ca=/opt/kubernetes/ssl/ca.pem \ -ca-key=/opt/kubernetes/ssl/ca-key.pem \ -config=/opt/kubernetes/ssl/ca-config.json \ -profile=kubernetes kube-proxy-csr.json | cfssljson -bare kube-proxy |

4.分发证书到所有Node节点

[root@linux-node1 ssl]# cp kube-proxy*.pem /opt/kubernetes/ssl/[root@linux-node1 ssl]# scp kube-proxy*.pem 192.168.56.12:/opt/kubernetes/ssl/[root@linux-node1 ssl]# scp kube-proxy*.pem 192.168.56.12:/opt/kubernetes/ssl/ |

5.创建kube-proxy配置文件

[root@linux-node2 ~]# kubectl config set-cluster kubernetes \ --certificate-authority=/opt/kubernetes/ssl/ca.pem \ --embed-certs=true \ --server=https://192.168.56.11:6443 \ --kubeconfig=kube-proxy.kubeconfig#返回结果Cluster "kubernetes" set.[root@linux-node2 ~]# kubectl config set-credentials kube-proxy \ --client-certificate=/opt/kubernetes/ssl/kube-proxy.pem \ --client-key=/opt/kubernetes/ssl/kube-proxy-key.pem \ --embed-certs=true \ --kubeconfig=kube-proxy.kubeconfig#返回结果User "kube-proxy" set.[root@linux-node2 ~]# kubectl config set-context default \ --cluster=kubernetes \ --user=kube-proxy \ --kubeconfig=kube-proxy.kubeconfig#返回结果Context "default" created.[root@linux-node2 ~]# kubectl config use-context default --kubeconfig=kube-proxy.kubeconfig#返回结果Switched to context "default". |

6.分发kubeconfig配置文件

[root@linux-node1 ssl]# cp kube-proxy.kubeconfig /opt/kubernetes/cfg/[root@linux-node1 ~]# scp kube-proxy.kubeconfig 192.168.56.12:/opt/kubernetes/cfg/[root@linux-node1 ~]# scp kube-proxy.kubeconfig 192.168.56.13:/opt/kubernetes/cfg/ |

7.创建kube-proxy服务配置 (备注:三个节点都要操作)

[root@linux-node1 bin]# mkdir /var/lib/kube-proxy [root@k8s-node1 ~]# vim /usr/lib/systemd/system/kube-proxy.service[Unit]Description=Kubernetes Kube-Proxy ServerDocumentation=https://github.com/GoogleCloudPlatform/kubernetesAfter=network.target[Service]WorkingDirectory=/var/lib/kube-proxyExecStart=/opt/kubernetes/bin/kube-proxy \ --bind-address=192.168.56.11 \ --hostname-override=192.168.56.11 \ --kubeconfig=/opt/kubernetes/cfg/kube-proxy.kubeconfig \--masquerade-all \ --feature-gates=SupportIPVSProxyMode=true \ --proxy-mode=ipvs \ --ipvs-min-sync-period=5s \ --ipvs-sync-period=5s \ --ipvs-scheduler=rr \ --logtostderr=true \ --v=2 \ --logtostderr=false \ --log-dir=/opt/kubernetes/logRestart=on-failureRestartSec=5LimitNOFILE=65536[Install]WantedBy=multi-user.target |

#分发到其它节点,并修改ip地址

[root@linux-node1 ssl]# scp /usr/lib/systemd/system/kube-proxy.service 192.168.56.12:/usr/lib/systemd/system/kube-proxy.service

kube-proxy.service 100% 699 258.2KB/s 00:00

[root@linux-node1 ssl]# scp /usr/lib/systemd/system/kube-proxy.service 192.168.56.13:/usr/lib/systemd/system/kube-proxy.service

kube-proxy.service 100% 699 509.9KB/s 00:00

#在linux-node2上面修改ip地址

[root@linux-node2 ssl]# vi /usr/lib/systemd/system/kube-proxy.service[Unit]Description=Kubernetes Kube-Proxy ServerDocumentation=https://github.com/GoogleCloudPlatform/kubernetesAfter=network.target[Service]WorkingDirectory=/var/lib/kube-proxyExecStart=/opt/kubernetes/bin/kube-proxy \ --bind-address=192.168.56.12 \ --hostname-override=192.168.56.12 \ --kubeconfig=/opt/kubernetes/cfg/kube-proxy.kubeconfig \--masquerade-all \ --feature-gates=SupportIPVSProxyMode=true \ --proxy-mode=ipvs \ --ipvs-min-sync-period=5s \ --ipvs-sync-period=5s \ --ipvs-scheduler=rr \ --logtostderr=true \ --v=2 \ --logtostderr=false \ --log-dir=/opt/kubernetes/logRestart=on-failureRestartSec=5LimitNOFILE=65536[Install]WantedBy=multi-user.target |

#在linux-node3上面修改ip地址

[root@linux-node3 ~]# vi /usr/lib/systemd/system/kube-proxy.service[Unit]Description=Kubernetes Kube-Proxy ServerDocumentation=https://github.com/GoogleCloudPlatform/kubernetesAfter=network.target[Service]WorkingDirectory=/var/lib/kube-proxyExecStart=/opt/kubernetes/bin/kube-proxy \ --bind-address=192.168.56.13 \ --hostname-override=192.168.56.13 \ --kubeconfig=/opt/kubernetes/cfg/kube-proxy.kubeconfig \--masquerade-all \ --feature-gates=SupportIPVSProxyMode=true \ --proxy-mode=ipvs \ --ipvs-min-sync-period=5s \ --ipvs-sync-period=5s \ --ipvs-scheduler=rr \ --logtostderr=true \ --v=2 \ --logtostderr=false \ --log-dir=/opt/kubernetes/logRestart=on-failureRestartSec=5LimitNOFILE=65536[Install]WantedBy=multi-user.target |

8.启动Kubernetes Proxy (备注:仅在linux-node2 和linux-node3上面操作)

[root@linux-node2 ~]# systemctl daemon-reload[root@linux-node2 ~]# systemctl enable kube-proxy[root@linux-node2 ~]# systemctl start kube-proxy |

9.查看服务状态 查看kube-proxy服务状态 (备注:仅在linux-node2 和linux-node3上面操作)

[root@linux-node2 ssl]# systemctl status kube-proxy● kube-proxy.service - Kubernetes Kube-Proxy Server Loaded: loaded (/usr/lib/systemd/system/kube-proxy.service; enabled; vendor preset: disabled) Active: active (running) since Wed 2018-06-06 16:07:20 CST; 15s ago Docs: https://github.com/GoogleCloudPlatform/kubernetes Main PID: 16526 (kube-proxy) Tasks: 0 Memory: 8.2M CGroup: /system.slice/kube-proxy.service ‣ 16526 /opt/kubernetes/bin/kube-proxy --bind-address=192.168.56.12 --hostname-override=19...Jun 06 16:07:20 linux-node2.example.com systemd[1]: Started Kubernetes Kube-Proxy Server.Jun 06 16:07:20 linux-node2.example.com systemd[1]: Starting Kubernetes Kube-Proxy Server... |

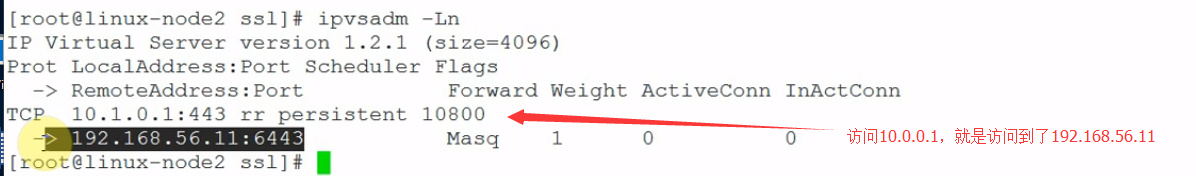

#检查LVS状态 (备注:仅在linux-node2上面操作)

[root@linux-node2 ~]# ipvsadm -L -nIP Virtual Server version 1.2.1 (size=4096)Prot LocalAddress:Port Scheduler Flags -> RemoteAddress:Port Forward Weight ActiveConn InActConnTCP 10.1.0.1:443 rr persistent 10800 -> 192.168.56.11:6443 Masq 1 0 0 |

#如果你在两台机器都安装了kubelet和proxy服务,使用下面的命令可以检查状态。(备注:仅在linux-node1上面操作)

[root@linux-node1 ssl]# kubectl get nodeNAME STATUS ROLES AGE VERSION192.168.56.12 Ready <none> 34m v1.10.1192.168.56.13 Ready <none> 34m v1.10.1 |

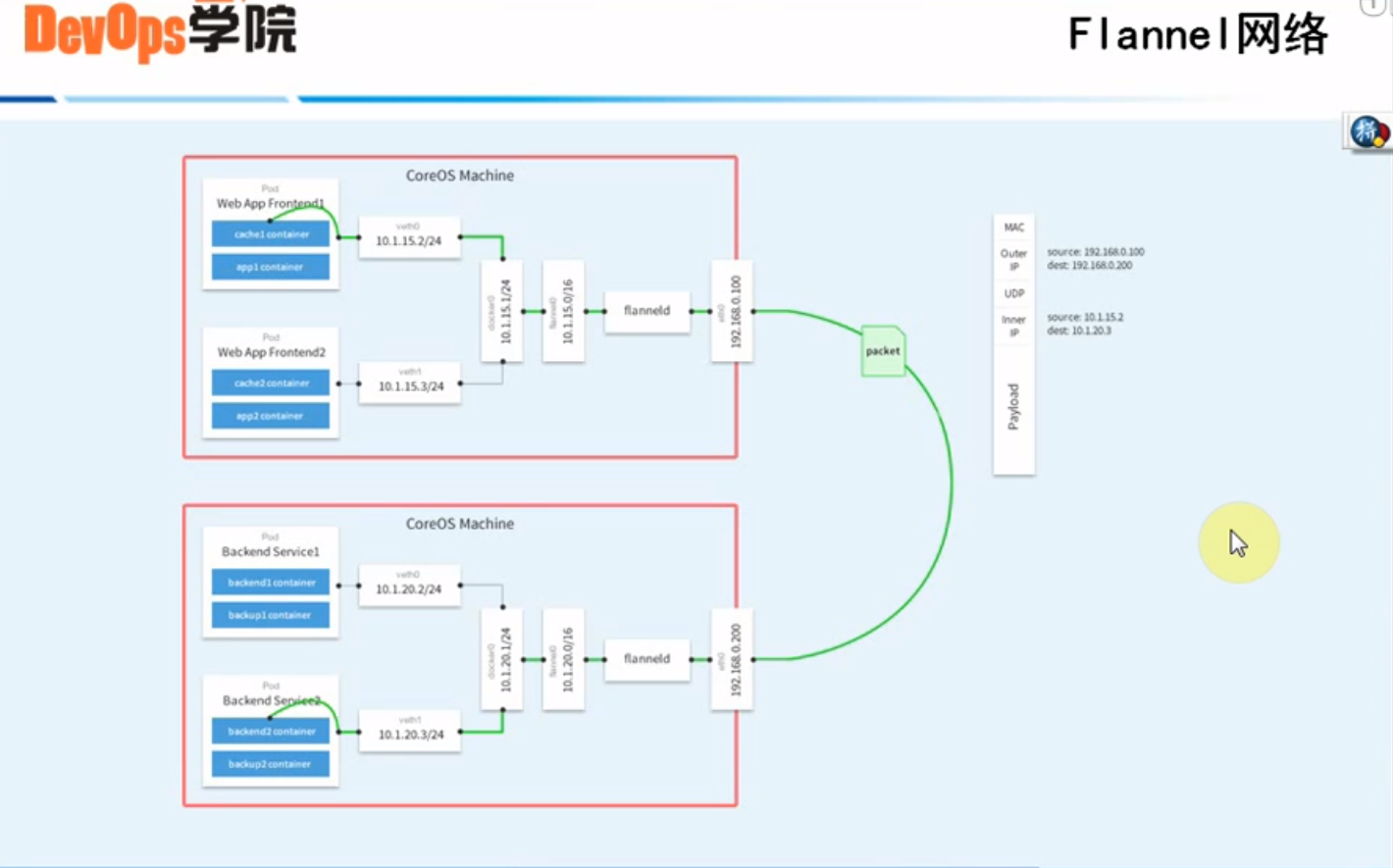

十、Flannel网络部署

#Flannel网络部署

1.为Flannel生成证书

[root@linux-node1 ssl]# cd /usr/local/src/ssl[root@linux-node1 ssl]# vim flanneld-csr.json{ "CN": "flanneld", "hosts": [], "key": { "algo": "rsa", "size": 2048 }, "names": [ { "C": "CN", "ST": "BeiJing", "L": "BeiJing", "O": "k8s", "OU": "System" } ]} |

2.生成证书

[root@linux-node1 ~]# cfssl gencert -ca=/opt/kubernetes/ssl/ca.pem \ -ca-key=/opt/kubernetes/ssl/ca-key.pem \ -config=/opt/kubernetes/ssl/ca-config.json \ -profile=kubernetes flanneld-csr.json | cfssljson -bare flanneld |

#查看生成的证书

|

1

2

3

4

5

|

[root@linux-node1 ssl]# ll-rw-r--r-- 1 root root 997 Jun 6 16:51 flanneld.csr-rw-r--r-- 1 root root 221 Jun 6 16:50 flanneld-csr.json-rw------- 1 root root 1675 Jun 6 16:51 flanneld-key.pem-rw-r--r-- 1 root root 1391 Jun 6 16:51 flanneld.pem |

3.分发证书

[root@linux-node1 ~]# cp flanneld*.pem /opt/kubernetes/ssl/[root@linux-node1 ~]# scp flanneld*.pem 192.168.56.12:/opt/kubernetes/ssl/[root@linux-node1 ~]# scp flanneld*.pem 192.168.56.13:/opt/kubernetes/ssl/ |

4.下载Flannel软件包

包下载地下:https://github.com/coreos/flannel/releases

[root@linux-node1 ~]# cd /usr/local/src# wget https://github.com/coreos/flannel/releases/download/v0.10.0/flannel-v0.10.0-linux-amd64.tar.gz[root@linux-node1 src]# tar zxf flannel-v0.10.0-linux-amd64.tar.gz[root@linux-node1 src]# cp flanneld mk-docker-opts.sh /opt/kubernetes/bin/复制到linux-node2节点[root@linux-node1 src]# scp flanneld mk-docker-opts.sh 192.168.56.12:/opt/kubernetes/bin/[root@linux-node1 src]# scp flanneld mk-docker-opts.sh 192.168.56.13:/opt/kubernetes/bin/复制对应脚本到/opt/kubernetes/bin目录下。[root@linux-node1 ~]# cd /usr/local/src/kubernetes/cluster/centos/node/bin/[root@linux-node1 bin]# cp remove-docker0.sh /opt/kubernetes/bin/[root@linux-node1 bin]# scp remove-docker0.sh 192.168.56.12:/opt/kubernetes/bin/[root@linux-node1 bin]# scp remove-docker0.sh 192.168.56.13:/opt/kubernetes/bin/ |

5.配置Flannel

[root@linux-node1 ~]# vim /opt/kubernetes/cfg/flannelFLANNEL_ETCD="-etcd-endpoints=https://192.168.56.11:2379,https://192.168.56.12:2379,https://192.168.56.13:2379"FLANNEL_ETCD_KEY="-etcd-prefix=/kubernetes/network"FLANNEL_ETCD_CAFILE="--etcd-cafile=/opt/kubernetes/ssl/ca.pem"FLANNEL_ETCD_CERTFILE="--etcd-certfile=/opt/kubernetes/ssl/flanneld.pem"FLANNEL_ETCD_KEYFILE="--etcd-keyfile=/opt/kubernetes/ssl/flanneld-key.pem"复制配置到其它节点上[root@linux-node1 ~]# scp /opt/kubernetes/cfg/flannel 192.168.56.12:/opt/kubernetes/cfg/[root@linux-node1 ~]# scp /opt/kubernetes/cfg/flannel 192.168.56.13:/opt/kubernetes/cfg/ |

6.设置Flannel系统服务

[root@linux-node1 ~]# vim /usr/lib/systemd/system/flannel.service[Unit]Description=Flanneld overlay address etcd agentAfter=network.targetBefore=docker.service[Service]EnvironmentFile=-/opt/kubernetes/cfg/flannelExecStartPre=/opt/kubernetes/bin/remove-docker0.shExecStart=/opt/kubernetes/bin/flanneld ${FLANNEL_ETCD} ${FLANNEL_ETCD_KEY} ${FLANNEL_ETCD_CAFILE} ${FLANNEL_ETCD_CERTFILE} ${FLANNEL_ETCD_KEYFILE}ExecStartPost=/opt/kubernetes/bin/mk-docker-opts.sh -d /run/flannel/dockerType=notify[Install]WantedBy=multi-user.targetRequiredBy=docker.service复制系统服务脚本到其它节点上# scp /usr/lib/systemd/system/flannel.service 192.168.56.12:/usr/lib/systemd/system/# scp /usr/lib/systemd/system/flannel.service 192.168.56.13:/usr/lib/systemd/system/ |

Flannel CNI集成

下载CNI插件 (备注:仅在linux-node1上面操作)

https://github.com/containernetworking/plugins/releaseswget https://github.com/containernetworking/plugins/releases/download/v0.7.1/cni-plugins-amd64-v0.7.1.tgz[root@linux-node1 ~]# mkdir /opt/kubernetes/bin/cni[root@linux-node1 bin]# cd /usr/local/src/[root@linux-node1 src]# lltotal 615788-rw-r--r-- 1 root root 17108856 Apr 12 17:35 cni-plugins-amd64-v0.7.1.tgz[root@linux-node1 src]# tar zxf cni-plugins-amd64-v0.7.1.tgz -C /opt/kubernetes/bin/cni# scp -r /opt/kubernetes/bin/cni/* 192.168.56.12:/opt/kubernetes/bin/cni/# scp -r /opt/kubernetes/bin/cni/* 192.168.56.13:/opt/kubernetes/bin/cni/ |

创建Etcd的key (备注:仅在linux-node1上面操作)

/opt/kubernetes/bin/etcdctl --ca-file /opt/kubernetes/ssl/ca.pem --cert-file /opt/kubernetes/ssl/flanneld.pem --key-file /opt/kubernetes/ssl/flanneld-key.pem \ --no-sync -C https://192.168.56.11:2379,https://192.168.56.12:2379,https://192.168.56.13:2379 \mk /kubernetes/network/config '{ "Network": "10.2.0.0/16", "Backend": { "Type": "vxlan", "VNI": 1 }}' >/dev/null 2>&1 |

启动flannel (备注:三个节点都要操作)

[root@linux-node1 ~]# systemctl daemon-reload[root@linux-node1 ~]# systemctl enable flannel[root@linux-node1 ~]# chmod +x /opt/kubernetes/bin/*[root@linux-node1 ~]# systemctl start flannel#检查是否启动[root@linux-node1 src]# systemctl status flannel● flannel.service - Flanneld overlay address etcd agent Loaded: loaded (/usr/lib/systemd/system/flannel.service; enabled; vendor preset: disabled) Active: active (running) since Wed 2018-06-06 17:11:07 CST; 10s ago Process: 12241 ExecStartPost=/opt/kubernetes/bin/mk-docker-opts.sh -d /run/flannel/docker (code=exited, status=0/SUCCESS) Process: 12222 ExecStartPre=/opt/kubernetes/bin/remove-docker0.sh (code=exited, status=0/SUCCESS) Main PID: 12229 (flanneld) Tasks: 8 Memory: 6.8M CGroup: /system.slice/flannel.service ├─12229 /opt/kubernetes/bin/flanneld -etcd-endpoints=https://192.168.56.11:2379,https://19... └─12269 /usr/sbin/iptables -t filter -C FORWARD -s 10.2.0.0/16 -j ACCEPT --waitJun 06 17:11:07 linux-node1.example.com flanneld[12229]: I0606 17:11:07.154891 12229 main.go:300]...nvJun 06 17:11:07 linux-node1.example.com flanneld[12229]: I0606 17:11:07.154919 12229 main.go:304]...d.Jun 06 17:11:07 linux-node1.example.com flanneld[12229]: I0606 17:11:07.155314 12229 vxlan_networ...esJun 06 17:11:07 linux-node1.example.com flanneld[12229]: I0606 17:11:07.165985 12229 main.go:396]...seJun 06 17:11:07 linux-node1.example.com flanneld[12229]: I0606 17:11:07.182140 12229 iptables.go:...esJun 06 17:11:07 linux-node1.example.com flanneld[12229]: I0606 17:11:07.182172 12229 iptables.go:...PTJun 06 17:11:07 linux-node1.example.com systemd[1]: Started Flanneld overlay address etcd agent.Jun 06 17:11:07 linux-node1.example.com flanneld[12229]: I0606 17:11:07.185239 12229 iptables.go:...PTJun 06 17:11:07 linux-node1.example.com flanneld[12229]: I0606 17:11:07.187837 12229 iptables.go:...PTJun 06 17:11:07 linux-node1.example.com flanneld[12229]: I0606 17:11:07.198623 12229 iptables.go:...PTHint: Some lines were ellipsized, use -l to show in full. |

#查看网络

#在linux-node1上面查看网络,发现ip段和linux-node2和linux-node3,网段都不一样。这就是Flannel网络封装产生的。

[root@linux-node1 src]# ifconfigeth0: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500 inet 192.168.56.11 netmask 255.255.255.0 broadcast 192.168.56.255 inet6 fe80::20c:29ff:fef0:1471 prefixlen 64 scopeid 0x20<link> ether 00:0c:29:f0:14:71 txqueuelen 1000 (Ethernet) RX packets 2556030 bytes 1567940286 (1.4 GiB) RX errors 0 dropped 0 overruns 0 frame 0 TX packets 2303039 bytes 1516846451 (1.4 GiB) TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0flannel.1: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1450 inet 10.2.17.0 netmask 255.255.255.255 broadcast 0.0.0.0 inet6 fe80::84f5:daff:fe22:4e34 prefixlen 64 scopeid 0x20<link> ether 86:f5:da:22:4e:34 txqueuelen 0 (Ethernet) RX packets 0 bytes 0 (0.0 B) RX errors 0 dropped 0 overruns 0 frame 0 TX packets 0 bytes 0 (0.0 B) TX errors 0 dropped 8 overruns 0 carrier 0 collisions 0lo: flags=73<UP,LOOPBACK,RUNNING> mtu 65536 inet 127.0.0.1 netmask 255.0.0.0 inet6 ::1 prefixlen 128 scopeid 0x10<host> loop txqueuelen 1000 (Local Loopback) RX packets 619648 bytes 160911710 (153.4 MiB) RX errors 0 dropped 0 overruns 0 frame 0 TX packets 619648 bytes 160911710 (153.4 MiB) TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0 |

#linux-node2

[root@linux-node2 ssl]# ifconfigeth0: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500 inet 192.168.56.12 netmask 255.255.255.0 broadcast 192.168.56.255 inet6 fe80::20c:29ff:fe48:f776 prefixlen 64 scopeid 0x20<link> ether 00:0c:29:48:f7:76 txqueuelen 1000 (Ethernet) RX packets 1959862 bytes 616981595 (588.3 MiB) RX errors 0 dropped 0 overruns 0 frame 0 TX packets 1734270 bytes 251652241 (239.9 MiB) TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0flannel.1: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1450 inet 10.2.89.0 netmask 255.255.255.255 broadcast 0.0.0.0 inet6 fe80::58f4:1eff:fe30:70d3 prefixlen 64 scopeid 0x20<link> ether 5a:f4:1e:30:70:d3 txqueuelen 0 (Ethernet) RX packets 0 bytes 0 (0.0 B) RX errors 0 dropped 0 overruns 0 frame 0 TX packets 0 bytes 0 (0.0 B) TX errors 0 dropped 8 overruns 0 carrier 0 collisions 0lo: flags=73<UP,LOOPBACK,RUNNING> mtu 65536 inet 127.0.0.1 netmask 255.0.0.0 inet6 ::1 prefixlen 128 scopeid 0x10<host> loop txqueuelen 1000 (Local Loopback) RX packets 3383 bytes 182706 (178.4 KiB) RX errors 0 dropped 0 overruns 0 frame 0 TX packets 3383 bytes 182706 (178.4 KiB) TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0 |

#linux-node3

[root@linux-node3 ~]# ifconfigeth0: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500 inet 192.168.56.13 netmask 255.255.255.0 broadcast 192.168.56.255 inet6 fe80::20c:29ff:fe11:e6df prefixlen 64 scopeid 0x20<link> ether 00:0c:29:11:e6:df txqueuelen 1000 (Ethernet) RX packets 1387972 bytes 553335974 (527.7 MiB) RX errors 0 dropped 0 overruns 0 frame 0 TX packets 1130801 bytes 152441609 (145.3 MiB) TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0flannel.1: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1450 inet 10.2.15.0 netmask 255.255.255.255 broadcast 0.0.0.0 inet6 fe80::10d1:d1ff:fee8:b46a prefixlen 64 scopeid 0x20<link> ether 12:d1:d1:e8:b4:6a txqueuelen 0 (Ethernet) RX packets 0 bytes 0 (0.0 B) RX errors 0 dropped 0 overruns 0 frame 0 TX packets 0 bytes 0 (0.0 B) TX errors 0 dropped 8 overruns 0 carrier 0 collisions 0lo: flags=73<UP,LOOPBACK,RUNNING> mtu 65536 inet 127.0.0.1 netmask 255.0.0.0 inet6 ::1 prefixlen 128 scopeid 0x10<host> loop txqueuelen 1000 (Local Loopback) RX packets 3384 bytes 182758 (178.4 KiB) RX errors 0 dropped 0 overruns 0 frame 0 TX packets 3384 bytes 182758 (178.4 KiB) TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0 |

配置Docker使用Flannel

[root@linux-node1 ~]# vim /usr/lib/systemd/system/docker.service[Unit] #在Unit下面修改After和增加RequiresAfter=network-online.target firewalld.service flannel.service #增加参数Wants=network-online.targetRequires=flannel.service #加载服务[Service] Type=notify |

#作用就是等flannel服务启来后,再加载docker_opts |

EnvironmentFile=-/run/flannel/docker #增加这行 |

ExecStart=/usr/bin/dockerd $DOCKER_OPTS #增加docker_opts |

#添加上面参数的作用

[root@linux-node3 ~]# cat /run/flannel/docker DOCKER_OPT_BIP="--bip=10.2.15.1/24" #给docker指定桥接的网段DOCKER_OPT_IPMASQ="--ip-masq=true"DOCKER_OPT_MTU="--mtu=1450"DOCKER_OPTS=" --bip=10.2.15.1/24 --ip-masq=true --mtu=1450" |

#添加好的配置文件

[root@linux-node1 src]# cat /usr/lib/systemd/system/docker.service[Unit]Description=Docker Application Container EngineDocumentation=https://docs.docker.comAfter=network-online.target firewalld.service flannel.serviceWants=network-online.targetRequires=flannel.service[Service]Type=notify# the default is not to use systemd for cgroups because the delegate issues still# exists and systemd currently does not support the cgroup feature set required# for containers run by dockerEnvironmentFile=-/run/flannel/dockerExecStart=/usr/bin/dockerd $DOCKER_OPTSExecReload=/bin/kill -s HUP $MAINPID# Having non-zero Limit*s causes performance problems due to accounting overhead# in the kernel. We recommend using cgroups to do container-local accounting.LimitNOFILE=infinityLimitNPROC=infinityLimitCORE=infinity# Uncomment TasksMax if your systemd version supports it.# Only systemd 226 and above support this version.#TasksMax=infinityTimeoutStartSec=0# set delegate yes so that systemd does not reset the cgroups of docker containersDelegate=yes# kill only the docker process, not all processes in the cgroupKillMode=process# restart the docker process if it exits prematurelyRestart=on-failureStartLimitBurst=3StartLimitInterval=60s[Install]WantedBy=multi-user.target |

备注:那就实现了docker和flannel都在同一个网段

#备注:在linux-node1上面操作

[root@linux-node1 src]# scp /usr/lib/systemd/system/docker.service 192.168.56.12:/usr/lib/systemd/system/

#执行结果:

docker.service 100% 1231 600.5KB/s 00:00

[root@linux-node1 src]# scp /usr/lib/systemd/system/docker.service 192.168.56.13:/usr/lib/systemd/system/

#执行结果:

docker.service 100% 1231 409.2KB/s 00:00

#重启Docker (备注:三个节点都要操作)

[root@linux-node1 ~]# systemctl daemon-reload[root@linux-node1 ~]# systemctl restart docker |

#查看网络,看docker和flannel是否在同一网络

#linux-node1

[root@linux-node1 src]# ifconfigdocker0: flags=4099<UP,BROADCAST,MULTICAST> mtu 1500 inet 10.2.17.1 netmask 255.255.255.0 broadcast 10.2.17.255 ether 02:42:a2:76:9f:4e txqueuelen 0 (Ethernet) RX packets 0 bytes 0 (0.0 B) RX errors 0 dropped 0 overruns 0 frame 0 TX packets 0 bytes 0 (0.0 B) TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0eth0: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500 inet 192.168.56.11 netmask 255.255.255.0 broadcast 192.168.56.255 inet6 fe80::20c:29ff:fef0:1471 prefixlen 64 scopeid 0x20<link> ether 00:0c:29:f0:14:71 txqueuelen 1000 (Ethernet) RX packets 2641888 bytes 1581734791 (1.4 GiB) RX errors 0 dropped 0 overruns 0 frame 0 TX packets 2387326 bytes 1530381125 (1.4 GiB) TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0flannel.1: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1450 inet 10.2.17.0 netmask 255.255.255.255 broadcast 0.0.0.0 inet6 fe80::84f5:daff:fe22:4e34 prefixlen 64 scopeid 0x20<link> ether 86:f5:da:22:4e:34 txqueuelen 0 (Ethernet) RX packets 0 bytes 0 (0.0 B) RX errors 0 dropped 0 overruns 0 frame 0 TX packets 0 bytes 0 (0.0 B) TX errors 0 dropped 8 overruns 0 carrier 0 collisions 0lo: flags=73<UP,LOOPBACK,RUNNING> mtu 65536 inet 127.0.0.1 netmask 255.0.0.0 inet6 ::1 prefixlen 128 scopeid 0x10<host> loop txqueuelen 1000 (Local Loopback) RX packets 654028 bytes 170396840 (162.5 MiB) RX errors 0 dropped 0 overruns 0 frame 0 TX packets 654028 bytes 170396840 (162.5 MiB) TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0 |

#linux-node2

[root@linux-node2 ssl]# ifconfigdocker0: flags=4099<UP,BROADCAST,MULTICAST> mtu 1500 inet 10.2.89.1 netmask 255.255.255.0 broadcast 10.2.89.255 ether 02:42:95:ce:36:75 txqueuelen 0 (Ethernet) RX packets 0 bytes 0 (0.0 B) RX errors 0 dropped 0 overruns 0 frame 0 TX packets 0 bytes 0 (0.0 B) TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0eth0: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500 inet 192.168.56.12 netmask 255.255.255.0 broadcast 192.168.56.255 inet6 fe80::20c:29ff:fe48:f776 prefixlen 64 scopeid 0x20<link> ether 00:0c:29:48:f7:76 txqueuelen 1000 (Ethernet) RX packets 2081588 bytes 632483927 (603.1 MiB) RX errors 0 dropped 0 overruns 0 frame 0 TX packets 1857039 bytes 269722165 (257.2 MiB) TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0flannel.1: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1450 inet 10.2.89.0 netmask 255.255.255.255 broadcast 0.0.0.0 inet6 fe80::58f4:1eff:fe30:70d3 prefixlen 64 scopeid 0x20<link> ether 5a:f4:1e:30:70:d3 txqueuelen 0 (Ethernet) RX packets 0 bytes 0 (0.0 B) RX errors 0 dropped 0 overruns 0 frame 0 TX packets 0 bytes 0 (0.0 B) TX errors 0 dropped 8 overruns 0 carrier 0 collisions 0lo: flags=73<UP,LOOPBACK,RUNNING> mtu 65536 inet 127.0.0.1 netmask 255.0.0.0 inet6 ::1 prefixlen 128 scopeid 0x10<host> loop txqueuelen 1000 (Local Loopback) RX packets 3543 bytes 191058 (186.5 KiB) RX errors 0 dropped 0 overruns 0 frame 0 TX packets 3543 bytes 191058 (186.5 KiB) TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0 |

#linux-node3

[root@linux-node3 ~]# ifconfigdocker0: flags=4099<UP,BROADCAST,MULTICAST> mtu 1500 inet 10.2.15.1 netmask 255.255.255.0 broadcast 10.2.15.255 ether 02:42:cb:c9:d0:2c txqueuelen 0 (Ethernet) RX packets 0 bytes 0 (0.0 B) RX errors 0 dropped 0 overruns 0 frame 0 TX packets 0 bytes 0 (0.0 B) TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0eth0: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500 inet 192.168.56.13 netmask 255.255.255.0 broadcast 192.168.56.255 inet6 fe80::20c:29ff:fe11:e6df prefixlen 64 scopeid 0x20<link> ether 00:0c:29:11:e6:df txqueuelen 1000 (Ethernet) RX packets 1459132 bytes 563513163 (537.4 MiB) RX errors 0 dropped 0 overruns 0 frame 0 TX packets 1202411 bytes 163564565 (155.9 MiB) TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0flannel.1: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1450 inet 10.2.15.0 netmask 255.255.255.255 broadcast 0.0.0.0 inet6 fe80::10d1:d1ff:fee8:b46a prefixlen 64 scopeid 0x20<link> ether 12:d1:d1:e8:b4:6a txqueuelen 0 (Ethernet) RX packets 0 bytes 0 (0.0 B) RX errors 0 dropped 0 overruns 0 frame 0 TX packets 0 bytes 0 (0.0 B) TX errors 0 dropped 8 overruns 0 carrier 0 collisions 0lo: flags=73<UP,LOOPBACK,RUNNING> mtu 65536 inet 127.0.0.1 netmask 255.0.0.0 inet6 ::1 prefixlen 128 scopeid 0x10<host> loop txqueuelen 1000 (Local Loopback) RX packets 3544 bytes 191110 (186.6 KiB) RX errors 0 dropped 0 overruns 0 frame 0 TX packets 3544 bytes 191110 (186.6 KiB) TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0 |

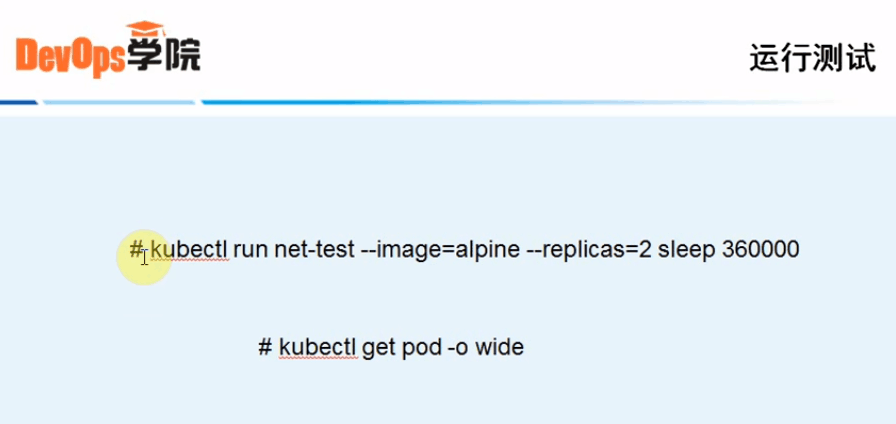

十一、创建第一个K8S应用

1.创建一个测试用的deployment

[root@linux-node1 ~]# kubectl run net-test --image=alpine --replicas=2 sleep 360000 |

2.查看获取IP情况

#第一次查看没有获取到ip,再等一会,再查看,因为要下载镜像。

[root@linux-node1 ~]# kubectl get pod -o wideNAME READY STATUS RESTARTS AGE IP NODEnet-test-5767cb94df-f46xh 0/1 ContainerCreating 0 16s <none> 192.168.56.13net-test-5767cb94df-hk68l 0/1 ContainerCreating 0 16s <none> 192.168.56.12 |

#再查看,就获取到了ip

[root@linux-node1 ~]# kubectl get pod -o wideNAME READY STATUS RESTARTS AGE IP NODEnet-test-5767cb94df-f46xh 1/1 Running 0 48s 10.2.15.2 192.168.56.13net-test-5767cb94df-hk68l 1/1 Running 0 48s 10.2.89.2 192.168.56.12 |

3.测试联通性

|

1

|

#第一个docker容器 |

|

1

2

3

4

5

6

7

8

9

10

|

[root@linux-node1 ~]# ping 10.2.15.2PING 10.2.15.2 (10.2.15.2) 56(84) bytes of data.64 bytes from 10.2.15.2: icmp_seq=1 ttl=63 time=0.800 ms64 bytes from 10.2.15.2: icmp_seq=2 ttl=63 time=0.755 ms#第二个docker容器[root@linux-node1 ~]# ping 10.2.89.2PING 10.2.89.2 (10.2.89.2) 56(84) bytes of data.64 bytes from 10.2.89.2: icmp_seq=1 ttl=63 time=0.785 ms64 bytes from 10.2.89.2: icmp_seq=2 ttl=63 time=0.698 ms |

4、测试nginx

下载地址:https://github.com/unixhot/salt-kubernetes/tree/master/addons

#编写nginx-deployment.yaml 文件

[root@linux-node1 ~]# vim nginx-deployment.yaml apiVersion: apps/v1kind: Deploymentmetadata: name: nginx-deployment labels: app: nginxspec: replicas: 3 selector: matchLabels: app: nginx template: metadata: labels: app: nginx spec: containers: - name: nginx image: nginx:1.10.3 ports: - containerPort: 80 |

#创建一个:nginx-deployment.yaml

[root@linux-node1 ~]# kubectl create -f nginx-deployment.yaml deployment.apps "nginx-deployment" created |

#查看状态:kubectl get deployment

[root@linux-node1 ~]# kubectl get deploymentNAME DESIRED CURRENT UP-TO-DATE AVAILABLE AGEnet-test 2 2 2 2 9mnginx-deployment 3 3 3 0 41s |

#查看nginx-deployment的详细信息

[root@linux-node1 ~]# kubectl describe deployment nginx-deploymentName: nginx-deploymentNamespace: defaultCreationTimestamp: Wed, 06 Jun 2018 17:48:01 +0800Labels: app=nginxAnnotations: deployment.kubernetes.io/revision=1Selector: app=nginxReplicas: 3 desired | 3 updated | 3 total | 2 available | 1 unavailableStrategyType: RollingUpdateMinReadySeconds: 0RollingUpdateStrategy: 25% max unavailable, 25% max surgePod Template: Labels: app=nginx Containers: nginx: Image: nginx:1.10.3 Port: 80/TCP Host Port: 0/TCP Environment: <none> Mounts: <none> Volumes: <none>Conditions: Type Status Reason ---- ------ ------ Available False MinimumReplicasUnavailable Progressing True ReplicaSetUpdatedOldReplicaSets: <none>NewReplicaSet: nginx-deployment-75d56bb955 (3/3 replicas created)Events: Type Reason Age From Message ---- ------ ---- ---- ------- Normal ScalingReplicaSet 4m deployment-controller Scaled up replica set nginx-deployment-75d56bb955 to 3 |

#查看他的pod

[root@linux-node1 ~]# kubectl get podNAME READY STATUS RESTARTS AGEnet-test-5767cb94df-f46xh 1/1 Running 0 14mnet-test-5767cb94df-hk68l 1/1 Running 0 14mnginx-deployment-75d56bb955-l9ffw 1/1 Running 0 5mnginx-deployment-75d56bb955-tdf6w 1/1 Running 0 5mnginx-deployment-75d56bb955-xjxq5 1/1 Running 0 5m |

#查看pod的详细信息

[root@linux-node1 ~]# kubectl describe pod nginx-deployment-75d56bb955-l9ffw Name: nginx-deployment-75d56bb955-l9ffwNamespace: defaultNode: 192.168.56.12/192.168.56.12Start Time: Wed, 06 Jun 2018 17:48:06 +0800Labels: app=nginx pod-template-hash=3181266511Annotations: <none>Status: RunningIP: 10.2.89.3Controlled By: ReplicaSet/nginx-deployment-75d56bb955Containers: nginx: Container ID: docker://9e966e891c95c1cf7a09e4d1e89c7fb3fca0538a5fa3c52e68607c4bfb84a88e Image: nginx:1.10.3 Image ID: docker-pullable://nginx@sha256:6202beb06ea61f44179e02ca965e8e13b961d12640101fca213efbfd145d7575 Port: 80/TCP Host Port: 0/TCP State: Running Started: Wed, 06 Jun 2018 17:53:37 +0800 Ready: True Restart Count: 0 Environment: <none> Mounts: /var/run/secrets/kubernetes.io/serviceaccount from default-token-hph7x (ro)Conditions: Type Status Initialized True Ready True PodScheduled True Volumes: default-token-hph7x: Type: Secret (a volume populated by a Secret) SecretName: default-token-hph7x Optional: falseQoS Class: BestEffortNode-Selectors: <none>Tolerations: <none>Events: Type Reason Age From Message ---- ------ ---- ---- ------- Normal Scheduled 6m default-scheduler Successfully assigned nginx-deployment-75d56bb955-l9ffw to 192.168.56.12 Normal SuccessfulMountVolume 6m kubelet, 192.168.56.12 MountVolume.SetUp succeeded for volume "default-token-hph7x" Normal Pulling 6m kubelet, 192.168.56.12 pulling image "nginx:1.10.3" Normal Pulled 1m kubelet, 192.168.56.12 Successfully pulled image "nginx:1.10.3" Normal Created 1m kubelet, 192.168.56.12 Created container Normal Started 1m kubelet, 192.168.56.12 Started container |

#查看他获取的ip地址

[root@linux-node1 ~]# kubectl get pod -o wideNAME READY STATUS RESTARTS AGE IP NODEnet-test-5767cb94df-f46xh 1/1 Running 0 16m 10.2.15.2 192.168.56.13net-test-5767cb94df-hk68l 1/1 Running 0 16m 10.2.89.2 192.168.56.12nginx-deployment-75d56bb955-l9ffw 1/1 Running 0 8m 10.2.89.3 192.168.56.12nginx-deployment-75d56bb955-tdf6w 1/1 Running 0 8m 10.2.15.4 192.168.56.13nginx-deployment-75d56bb955-xjxq5 1/1 Running 0 8m 10.2.15.3 192.168.56.13 |

#访问nginx

#第一个nginx

[root@linux-node1 ~]# curl --head http://10.2.89.3HTTP/1.1 200 OKServer: nginx/1.10.3Date: Wed, 06 Jun 2018 09:57:08 GMTContent-Type: text/htmlContent-Length: 612Last-Modified: Tue, 31 Jan 2017 15:01:11 GMTConnection: keep-aliveETag: "5890a6b7-264"Accept-Ranges: bytes |

#第二个nginx

[root@linux-node1 ~]# curl --head http://10.2.15.4HTTP/1.1 200 OKServer: nginx/1.10.3Date: Wed, 06 Jun 2018 09:58:23 GMTContent-Type: text/htmlContent-Length: 612Last-Modified: Tue, 31 Jan 2017 15:01:11 GMTConnection: keep-aliveETag: "5890a6b7-264"Accept-Ranges: bytes |

#第三个nginx

|

1

2

3

4

5

6

7

8

9

10

|

[root@linux-node1 ~]# curl --head http://10.2.15.3HTTP/1.1 200 OKServer: nginx/1.10.3Date: Wed, 06 Jun 2018 09:58:26 GMTContent-Type: text/htmlContent-Length: 612Last-Modified: Tue, 31 Jan 2017 15:01:11 GMTConnection: keep-aliveETag: "5890a6b7-264"Accept-Ranges: bytes |

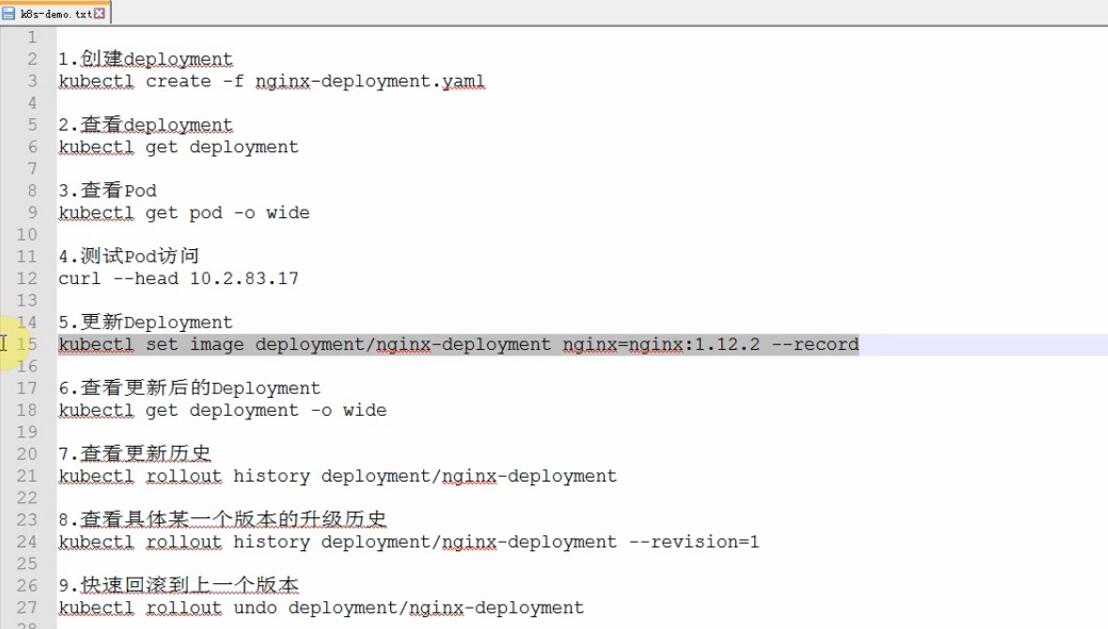

#常用命令

#升级deployment的nginx版本

作用:更新镜像 --record:记录日志

[root@linux-node1 ~]# kubectl set image deployment/nginx-deployment nginx=nginx:1.12.2 --record#返回结果deployment.apps "nginx-deployment" image updated |

#查看更新后的deployment

[root@linux-node1 ~]# kubectl get deployment -o wideNAME DESIRED CURRENT UP-TO-DATE AVAILABLE AGE CONTAINERS IMAGES SELECTORnet-test 2 2 2 2 26m net-test alpine run=net-testnginx-deployment 3 3 3 3 18m nginx nginx:1.12.2 app=nginx |

#查看pod的ip地址

|

1

2

3

4

5

6

7

|

[root@linux-node1 ~]# kubectl get pod -o wideNAME READY STATUS RESTARTS AGE IP NODEnet-test-5767cb94df-f46xh 1/1 Running 0 29m 10.2.15.2 192.168.56.13net-test-5767cb94df-hk68l 1/1 Running 0 29m 10.2.89.2 192.168.56.12nginx-deployment-7498dc98f8-8jft4 1/1 Running 0 3m 10.2.89.5 192.168.56.12nginx-deployment-7498dc98f8-fpv7g 1/1 Running 0 3m 10.2.15.5 192.168.56.13nginx-deployment-7498dc98f8-psvtr 1/1 Running 0 4m 10.2.89.4 192.168.56.12 |

#访问,查看是否成功更新版本

[root@linux-node1 ~]# curl --head http://10.2.89.5HTTP/1.1 200 OKServer: nginx/1.12.2 #版本升级成功Date: Wed, 06 Jun 2018 10:09:01 GMTContent-Type: text/htmlContent-Length: 612Last-Modified: Tue, 11 Jul 2017 13:29:18 GMTConnection: keep-aliveETag: "5964d2ae-264"Accept-Ranges: bytes |

#查看更新历史记录

[root@linux-node1 ~]# kubectl rollout history deployment/nginx-deploymentdeployments "nginx-deployment"REVISION CHANGE-CAUSE1 <none> #第一个没加: --record=true 参数,所以没有历史记录。2 kubectl set image deployment/nginx-deployment nginx=nginx:1.12.2 --record=true |

#查看具体某一个的操作历史

#查看第一个的操作历史<br>[root@linux-node1 ~]# kubectl rollout history deployment/nginx-deployment --revision=1deployments "nginx-deployment" with revision #1Pod Template: Labels: app=nginx pod-template-hash=3181266511 Containers: nginx: Image: nginx:1.10.3 Port: 80/TCP Host Port: 0/TCP Environment: <none> Mounts: <none> Volumes: <none> |

#快速的回滚到上一个版本

|

1

2

|

[root@linux-node1 ~]# kubectl rollout undo deployment/nginx-deploymentdeployment.apps "nginx-deployment" |

#查看pod里的ip地址,这个ip地址每次都会变化

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

|

[root@linux-node1 ~]# kubectl get pod -o wideNAME READY STATUS RESTARTS AGE IP NODEnet-test-5767cb94df-f46xh 1/1 Running 0 1h 10.2.15.2 192.168.56.13net-test-5767cb94df-hk68l 1/1 Running 0 1h 10.2.89.2 192.168.56.12nginx-deployment-75d56bb955-d8wr5 1/1 Running 0 2m 10.2.15.6 192.168.56.13nginx-deployment-75d56bb955-dvrgn 1/1 Running 0 1m 10.2.89.6 192.168.56.12nginx-deployment-75d56bb955-g9xtq 1/1 Running 0 1m 10.2.15.7 192.168.56.13#再访问ip[root@linux-node1 ~]# curl --head http://10.2.89.6HTTP/1.1 200 OKServer: nginx/1.10.3Date: Wed, 06 Jun 2018 10:53:25 GMTContent-Type: text/htmlContent-Length: 612Last-Modified: Tue, 31 Jan 2017 15:01:11 GMTConnection: keep-aliveETag: "5890a6b7-264"Accept-Ranges: bytes |

#创建nginx服务 (备注:通过服务,提供VIP)

编写:nginx-service.yaml 文件

[root@linux-node1 ~]# cat nginx-service.yaml kind: ServiceapiVersion: v1metadata: name: nginx-servicespec: selector: app: nginx ports: - protocol: TCP port: 80 targetPort: 80 |

#创建nginx-service服务 (备注:在linux-node1节点操作)

|

1

2

|

[root@linux-node1 ~]# kubectl create -f nginx-service.yamlservice "nginx-service" created |

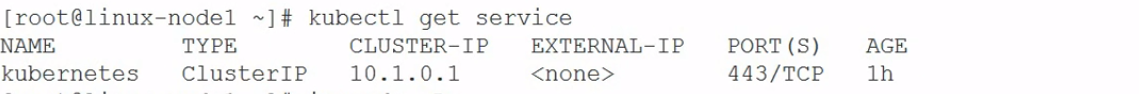

#查看服务,启动ClusterIP 10.1.156.120 (备注:这就是他的VIP地址) 备注:在linux-node1节点操作

[root@linux-node1 ~]# kubectl get serviceNAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGEkubernetes ClusterIP 10.1.0.1 <none> 443/TCP 7hnginx-service ClusterIP 10.1.156.120 <none> 80/TCP 13s |

#访问vip地址 (备注:在linux-node2 or linux-node3节点上面访问,因为linux-node1没有安装kubectl 服务)

[root@linux-node2 ~]# curl --head http://10.1.156.120HTTP/1.1 200 OKServer: nginx/1.10.3Date: Wed, 06 Jun 2018 11:01:03 GMTContent-Type: text/htmlContent-Length: 612Last-Modified: Tue, 31 Jan 2017 15:01:11 GMTConnection: keep-aliveETag: "5890a6b7-264"Accept-Ranges: bytes |

#查看Lvs

[root@linux-node2 ~]# ipvsadm -LnIP Virtual Server version 1.2.1 (size=4096)Prot LocalAddress:Port Scheduler Flags -> RemoteAddress:Port Forward Weight ActiveConn InActConnTCP 10.1.0.1:443 rr persistent 10800 -> 192.168.56.11:6443 Masq 1 0 0 #访问这个IP地址,会转发到下面三个IP上面。TCP 10.1.156.120:80 rr -> 10.2.15.6:80 Masq 1 0 1 -> 10.2.15.7:80 Masq 1 0 1 -> 10.2.89.6:80 Masq 1 0 0 |

#快速扩容到5个节点 (备注:自动扩容到5个副本)

[root@linux-node1 ~]# kubectl scale deployment nginx-deployment --replicas 5#返回结果deployment.extensions "nginx-deployment" scaled |

#查看pod (备注:linux-node1节点上面操作)

[root@linux-node1 ~]# kubectl get pod -o wideNAME READY STATUS RESTARTS AGE IP NODEnet-test-5767cb94df-f46xh 1/1 Running 0 1h 10.2.15.2 192.168.56.13net-test-5767cb94df-hk68l 1/1 Running 0 1h 10.2.89.2 192.168.56.12nginx-deployment-75d56bb955-d8wr5 1/1 Running 0 15m 10.2.15.6 192.168.56.13 #5个副本nginx-deployment-75d56bb955-dvrgn 1/1 Running 0 15m 10.2.89.6 192.168.56.12nginx-deployment-75d56bb955-g9xtq 1/1 Running 0 15m 10.2.15.7 192.168.56.13nginx-deployment-75d56bb955-wxbv7 1/1 Running 0 1m 10.2.15.8 192.168.56.13nginx-deployment-75d56bb955-x9qlf 1/1 Running 0 1m 10.2.89.7 192.168.56.12 |

#查看lvs对应5个节点 (备注:备注:在linux-node2 or linux-node3节点上面访问)

[root@linux-node2 ~]# ipvsadm -LnIP Virtual Server version 1.2.1 (size=4096)Prot LocalAddress:Port Scheduler Flags -> RemoteAddress:Port Forward Weight ActiveConn InActConnTCP 10.1.0.1:443 rr persistent 10800 -> 192.168.56.11:6443 Masq 1 0 0 TCP 10.1.156.120:80 rr -> 10.2.15.6:80 Masq 1 0 0 -> 10.2.15.7:80 Masq 1 0 0 -> 10.2.15.8:80 Masq 1 0 0 -> 10.2.89.6:80 Masq 1 0 0 -> 10.2.89.7:80 Masq 1 0 0 |

十二、Kubernetes CoreDNS and Dashboard部署

1、安装CoreDNS

#编写创建CoreDNS脚本: